Human Body Multiple Parts Parsing for Person Reidentification Based on Xception

, Shanchen Pang1, *,

, Shanchen Pang1, *,  , Xue Zhai1,

, Xue Zhai1,  , Min Wang2, Shihang Yu3,

, Min Wang2, Shihang Yu3,  , Tong Ding4,

, Tong Ding4,  , Xiaochun Cheng5, *

, Xiaochun Cheng5, *- DOI

- 10.2991/ijcis.d.201222.001How to use a DOI?

- Keywords

- Person reidentification; Semantic parsing; Global representations; Local representations

- Abstract

A mass of information grows explosively in socially networked industries, as extensive data, such as images and texts, is captured by vast sensors. Pedestrians are the main initiators of various activities in socially networked industries, hence, it is very important to quickly obtain relevant information of pedestrians from a large number of images. Person reidentification is an image retrieval technology, which can immediately retrieve target person in abundant images. However, due to the complexity of many important factors especially of changeful poses, occlusion and background clutter, person reidentification still faces extensive challenges. Considering these challenges, robust and distinguishing person representations are hard to be extracted well to identify different people. In this paper, to obtain more discriminative representations, we propose a human body multiple parts parsing (BMPP) architecture, which captures local pixel-level representations from body parts and global representations from whole body simultaneously. Additionally, a straightforward preprocessing method is adopted in this paper to improve the resolution of images in person reidentification benchmarks. To eliminate the negative effects of changeful poses, a simple yet effective representation fusion strategy is used for the original and horizontally flipped images to get final representations. Experimental results indicate that the method proposed in this article attains superior performance to most of state-of-the-art methods on CUHK03 and Market-1501.

- Copyright

- © 2021 The Authors. Published by Atlantis Press B.V.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

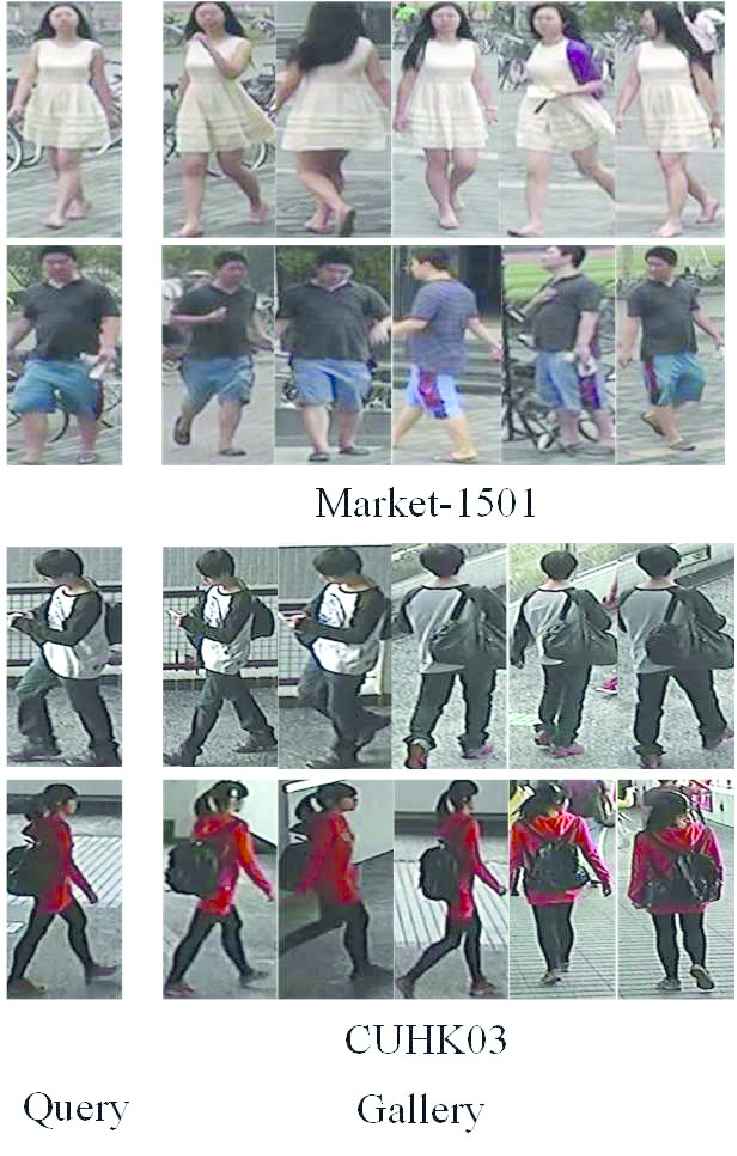

Nowadays, to ensure the safety of industrial production, surveillance cameras can be seen almost everywhere in industries. With the popularity of surveillance cameras, a huge social industrial network has gradually formed around people. The data such as videos and pictures provided by this network can be used to locate timely the person who causes dangerous events. However, if there is not an effective person retrieval method, a large amount of time is spent in filtering useless information, which leads to a considerable cost in human and material resources during the process. Person reidentification refers to retrieve all the images of the same person from a whole gallery based on a given query person image. The images in benchmarks (e.g., Market-1501 [1] and CUHK03 [2]) are captured by various surveillance cameras with different backgrounds, body poses and angles in socially networked industries. These images always have overlapping fields of view, hence, person reidentification can be viewed as a cross-camera image retrieval task. To complete the image retrieval task, two person benchmarks, namely Market-1501 [1] and CUHK03 [2], which are utilized in this paper. Several person images in the two benchmarks are shown in Figure 1. From Figure 1, the query contains four different person images. Furthermore, the gallery consists of their corresponding retrieved images which are taken from different online cameras.

Several person images in the two benchmarks. The first column is four query person images. The second to fifth columns correspond to their retrieved images, respectively.

Currently, online security cameras have been used in public places such as roads, schools and industries, which ensures our society more transparent and safe. The policeman can quickly retrieve the suspected person through person reidentification technology from the large quantity of images when a criminal case occurs. However, many factors render person reidentification an extremely challenging task. Firstly, when a single camera captures a person, the images taken at different times vary greatly due to lighting conditions, occlusion, background clutter, human postures and other factors. Secondly, the effect of aforementioned factors is more remarkable when a person is captured by diverse cameras. Hence, there are two things that can happen, one is that the images of one person captured by two or more surveillance cameras can be identified as different individuals, and the other is that the images captured of two or more different individuals can be identified as one person. Last but not the least, person images taken by surveillance cameras usually have poor qualities, making it considerably difficult to learn effective representations to distinguish identities. Consequently, we argue that an outstanding person reidentification model can obtain excellent representations to identify different individuals.

Several traditional models distinguish one identity from another by learning low-level representations such as color, shape or texture, but the performance of these models is poor [3,4]. Today, due to the rapid development of deep learning, it has been proved that a high-level representation of image learned through deep convolutional neural network (CNN) is more robust. Several methods have made a commendable improvement on the person reidentification and medical and transportation problems based on a designed CNN architecture [5–14]. Recently, the global-level representation of human image is utilized by most of the existing deep learning methods [15–17]. It is considered that the representations learned from deep CNN architecture should capture the most significant cues to the identities of different individuals. However, none of these deep learning methods has a highly satisfactory performance due to the global-level representations extracted including the human body parts and the background regions simultaneously. The background area contains a lot of clutter, which may add several noises to the final global-level representation.

To address the problems mentioned above, local-level representations extracted from human body parts are leveraged by some recent methods to discuss the person reidentification problem [5,8,18–20]. These methods can better locate the human body parts, which can capture discriminative features and reduce the negative effects of clutter to some extent. From the performance of these methods, we can observe that local-level representations have a stronger robustness. By studying these methods, we find that almost all of the works adopt bounding boxes to locate human body parts automatically. However, the bounding boxes are coarse due to including background clutter or incomplete person, which cannot capture exquisite representations of human body parts. In addition, the person reidentification systems proposed in these works are very complex CNN architectures. As described in literature [21], these systems have a good performance due to sub-models that contain lots of complicated training stages.

Through a set of experiments, we observe that the poor resolution of person images is a key factor that affects the accuracy of the model. In this work, we first do a simple preprocessing of person images to improve the resolution of the images. Then, we introduce human body multiple parts parsing (BMPP) architecture that merges human classification model and human parsing model together. The human classification and the human parsing model is used to exploit global-level representations and local-level cues for human body, respectively. The human parsing instead of bounding boxes is used to extract local body information, mainly because human parsing is a pixel-level method that can accurately locate body parts in variable environments. We separate person body into 5 parts such as head, upper-body, lower-body, shoes and foreground, which is inspired by literature [21].

To relieve the complexity of the proposed model, we use a popular deep convolutional model of Xception [22] as the backbone model with minor modifications to research person reidentification. We also demonstrate that the popular model has an excellent performance with no bells and whistles, when it operates on full images in the benchmarks such as Market-1501 [1] and CUHK03 [2].

The main contributions of this paper are as follows:

To improve the resolution of images in person benchmarks, we adopt a straightforward super-resolution method to preprocess these person images. The preprocessing strategy is demonstrated that it can make the performance of the model considerably better than the most existing methods through a group of experiments.

We propose a human BMPP model, where explores local-level representations from human multiple body parts and global-level representations from whole human body, simultaneously. Human semantic parsing provides complementary representations for the global features.

To improve the discrimination of person representations, a representation fusion strategy is proposed in this paper. An original person image and the same image flipped horizontally go through the BMPP architecture to obtain the final representation by the strategy. Our experiments demonstrate that the performance of BMPP with image-flipped technique proposed in this paper outperforms that of BMPP without image-flipped technique.

The remainder of this paper is organized as follows. Several related works on person reidentification are introduced in Section 2. In Section 3, we present the BMPP architecture for person reidentification. In Section 4, we give experimental results to show the performance of the BMPP architecture. In Section 5, we conclude the paper and have a prospect of future work.

2. RELATED WORK

Nowadays, deep learning has penetrated into various computer areas, in particular person reidentification, and plays a significant role in their development. The problem of person reidentification has achieved magnificent progress due to the rapid evolution of deep CNNs. Next, we provide an overview of literature on the person reidentification.

Recently, a growing number of new works have been focused on capturing human body parts to obtain robust representations. In work [18–20,23], to extract human body parts, they adopt predefined horizontal stripes to slice the feature maps of input human image. Specifically, Li et al. [18] exploit a spatial transform network [24] to learn human body parts including in three parts such as head-shoulder, upper-body and lower-body. The spatial transform network [24] can adaptively transform and align part-body data in space, improving the accuracy of the model. Such method can only learn human body representations roughly. Taking some improvements, several works [5,16,25,26] explore human body-part cues to research person reidentification. Multiple patches of a human image are extracted in [5,25] to capture local cues. Yao et al. [26] use region proposal network to detect body-part regions as well as generate local cues. Xu and Srivastava [27] design an automatic recognition algorithm for signs images, which can accurately extract images regions of interest and automatic recognition images. Generally speaking, these models are very complex and have a multi-stage training process.

To solve the problem of bounding boxes not accurate enough, several works attempt to use attention mechanism to study person reidentification [28–32]. Li et al. [28] propose an integrated attention model, which combines both soft and hard attention mechanism. Liu et al. [29] propose a multidirectional attention model, which captures attentive representations through masking various levels of representations with attention map. To preferably extract global-level and local-level features simultaneously, a multi-scale body-part mask guided attention architecture is proposed in [32], in which body parts masks are utilized to direct the training of corresponding attention.

To handle the problem of background clutter caused by bounding boxes, semantic segmentation is first introduced by several works, which has excellent performance on person reidentification task [21,33]. Nowadays, only a few works pay close attention to semantic segmentation in person reidentification. Semantic segmentation is naturally more suitable for locating human body parts than bounding boxes, which is attributed to its pixel-level accuracy. It has stronger robustness for the changes of human body posture. Human semantic parsing model is proposed in [21] to extract local feature masks from human body, which yields state-of-the-art performance.

In this article, we are inspired by the literature [21] to study the person reidentification problem adopting semantic segmentation method. We present an integrated architecture named BMPP, including human parsing model and human classification model. The human parsing model and human classification model are used to extract local-level representations from human multiple body parts and global-level representations from whole human body, respectively. Additionally, to improve the resolution of images in benchmarks, we adopt a simple super-resolution method to preprocess images. At the same time, to eliminate the negative effects of changeful poses, a simple yet effective representation fusion strategy is used for the original and horizontally flipped images to get the final representations. Then, we provide details for our methods on person reidentification.

3. OUR METHODS

In this work, Xception [22] is adopted as a backbone architecture, which is used for both human parsing and human classification models. Hence, we first make a simple introduction to Xception architecture. Subsequently, we describe our human classification and human parsing model in detail. Finally, just like reference [21], we give some details about how to combine the human parsing model with human classification model, which generates our proposed final person reidentification architecture.

3.1. Human Classification Model

In this paper, the backbone architecture of human classification model is Xception [22]. Hence, we give a detail of Xception [22] architecture below. Xception [22] is a 36-layers deep CNN architecture, which is based entirely on depthwise separable convolution layers. The depthwise separable convolution has a similar performance with regular convolution operation yet the former has a smaller parameter count than the latter. Therefore, Xception [22] architecture is considerably less computational cost than current popular neural network framework, just as Inception-V3 [34], ResNet-50 [35] and ResNet-152 [35]. These convolutional architectures are deeper than Xception [22], which Inception-V3 [34] has 48 layers, ResNet-50 [35] has 50 layers and ResNet-152 [35] is a deep convolutional architecture with 152 layers. While being shallower than these popular network architectures, our experiments demonstrate that it gives a better result than these architectures. The quantitative comparison is performed by a set of tests with different choices of backbone architectures in this work.

The Xception [22] architecture is inspired by Inception-V3 [34], where Inception modules are replaced with extremely separable convolutions. In these extremely separable convolutions,

In human classification model, to obtain a pretrained model, we make some modifications to the baseline Xception [22] architecture for person reidentification. The last classification layer is removed by us in the original Xception [22] model. Then, we add a fully-connected layer with 512 neural units followed by a ReLU nonlinearity after global average pooling layer. To mitigate overfitting of the baseline model, a dropout layer with 0.5 decay rate is adopted after the activation layer. Finally, a fully-connected layer is also added with 2048 neural units after the dropout layer. Hence, we can learn about that the human classification model generates a 2048-D global representation in the end. The 2048-D global representation is passed to a multi-class classification layer with softmax nonlinearity function when we train the human classification network. Simultaneously, we should be aware that the final 2048-D representation is employed to retrieve correct matches of a target person from the benchmarks when testing the performance of the designed model. The performance of designed model is showed in Section 4 when we use Xception [22], Inception-V3 [34], ResNet-50 [35] and ResNet-152 [35] as the backbone architecture one by one.

3.2. Human Parsing Model

To explore local body parts cues for person reidentification, just as literature [21], human parsing is utilized in this work. Human parsing is a pixel-level classification of a person image. It can capture a more comprehensive representation from person image. We insist that pixel-level operation has not only a considerable improvement in the performance than bounding box, but also significant robustness to the variation of person poses and background clutter.

Xception [22] architecture is adopted as the backbone of the human parsing model. And we use the architecture inspired by Deeplabv3+ [36], a prestigious Encoder-Decoder model for semantic segmentation. To apply to the human parsing task, several modifications are made to Xception [22] architecture in this work. The performance of human parsing task depends largely on whether the image with effective resolution is obtained. Hence, in order to reduce the representations loss caused by pooling operations, we replace all the max pooling operations with depthwise separable convolutions. The strides of these convolutions are seted to 2. Simultaneously, extra batch normalization [37] and ReLU method are added after each

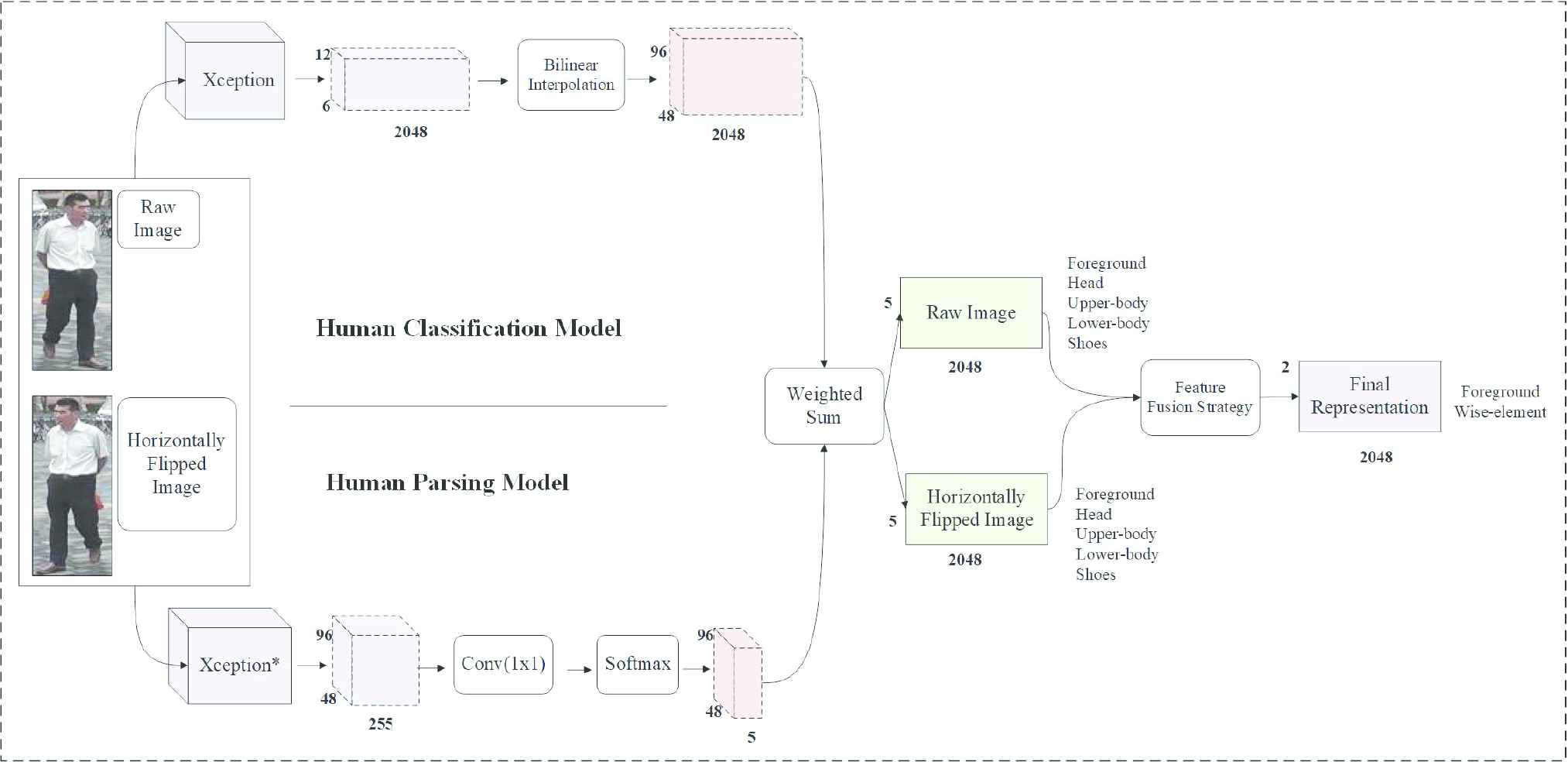

3.3. Overall Architecture for Person Reidentification

The overall architecture for person reidentification proposed in this article consists of two different branches, which are human classification model for global-level representations and human parsing model for local-level body parts representations. The overall architecture is named as BMPP, which is human BMPP for person reidentification. The BMPP architecture is illustrated in Figure 2. As shown in Figure 2, human classification model, based on Xception model, extract global activation values from input person image. Simultaneously, human parsing model, based on Xception* model, obtain masks of different human body parts. The Xception* model is modified from Xception model to parse human body parts, as described in Section 3.2. The final person representation is generated from raw image and its horizontally flipped image through the feature fusion strategy, as described in Section 3.3.

BMPP architecture proposed in the paper.

To explore the person body multiple local parts visual cues, we employ the mask maps obtained from human parsing model. These mask maps are associated with 5 different regions that are foreground, head, upper-body, lower-body and shoes. In this work, we also argue that segmenting the human body into 5 different parts, such as head, upper-body, lower-body, shoes and foreground, can bring a better performance to the BMPP model without large computational cost. In BMPP, we do the same operations to semantic regions as the literature [21]. The output activations of human classification model are pooled with one of five mask maps multiple times. This contrasts with global average pooling, which is unconscious of the spatial domain activation. In this article, we also see the specific pooling activation within 5 different parsing regions as a weighted sum operation. It is easy to understand that the mask maps in here are utilized as weights to get more effective and robust person representations. In other words, the weighted sum operation is consistent with a matrix multiplication between the output of human classification and human parsing architecture. We can find that their corresponding spatial domain is flattened through the matrix multiplication operation, and five 2048-D feature vectors are generated that represents one human body. The five 2048-D feature vectors respectively represent foreground, head, upper-body, lower-body and shoes. Next, the element-wise max operation is applied to the feature vectors such as head, upper-body, lower-body and shoes, generating one 2048-D feature vector named wise-element. In the end, we obtain two 2048-D feature vectors that are foreground and wise-element.

In this work, a final person image representation is identified by an original person image and the same person image flipped horizontally, shown in Figure 2. A detail of description of such a procedure is given as follows. Firstly, the two representations of foreground-original and wise-element-original are generated simultaneously by the BMPP architecture. Similarly, the two representations of foreground-flipped and wise-element-flipped are generated simultaneously by a horizontally flipped person image through the BMPP architecture. Then, the two representations of foreground-original and foreground-flipped are computed by the feature fusion strategy getting the final representation of foreground. The final representation of wise-element is computed by two representations of wise-element-original and wise-element-flipped in the same way. Finally, we concatenate the representation of foreground with the outcome of wise-element getting the final one 2048-D person image representation. Our experiments demonstrate that the performance of BMPP with image-flipped technique proposed in this paper is superior to that of BMPP without image-flipped technique. The strategy of feature fusion is described as follows:

Where both

4. EXPERIMENTS

4.1. Data Sets and Evaluation Strategy

To evaluate BMPP architecture proposed in this article, experiments are performed on two public person reidentification benchmarks, Market-1501 [1] and CUHK03 [2]. In Market-1501 [1] benchmark, 32,668 person images of 1,501 identities are included in the benchmark, which are captured by 5 high-resolution cameras and 1 low-resolution camera. Additionally, Deformable Part Model (DPM) [38] is used in this dataset to acquire bounding boxes of person, which generates several misaligned bounding boxes. In this work, we adopt 751 identities that have 12,936 person images to train our model. When we test the model, we divide person images of 750 identities into gallery data sets and query data sets. 19,734 and 3,368 person images consist in the gallery data sets and the query data sets respectively. These images are not used during training our model. The CUHK03 [2] dataset consists of 1,467 identities captured by 6 various cameras with a total of 13,164 person images. In this dataset, each person is recorded from 2 different views. On the average, each identity has 4.48 images from each view.

To estimate the performance of our method, two popular methods are adopted in this paper, which are Cumulative Matching Characteristic (CMC) and mean average precision (mAP). In the evaluation report, we give rank-1, rank-5, rank-10 and mAP results, which all are under single-query setting.

4.2. Training the Network

Keras, which is a deep learning framework, is adopted in this paper to deploy our BMPP architecture for these experiments. To improve the resolution of images in benchmark datasets, we adopt the residual dense network (RDN) [39] to preprocess these images. The RDN is a super-resolution CNN, which can generate a high-resolution image from its low-resolution image. Therefore, we obtain relatively higher-resolution person images with a scale magnified 4 times via RDN, which the model proposed in this paper can be trained with these higher-resolution images to achieve better performance. In short, all experiments in this article are conducted on the preprocessed person images.

To train the BMPP architecture proposed in this work, we first train one of the branches of BMPP, namely human classification model, on full person images. We train the human classification model using input images of size

Then, we train another branch of BMPP named human parsing model on the large Active Template Regression (ATR) dataset [42] for human parsing. Totally, 17,709 images with 17 semantic labels are included in the ATR dataset [42]. In this paper, we use 12,000 images for training, 5,000 images for validation and 709 images for testing. When we train the model, a mini-batch size is set to 12 in this work. To parse 5 human body parts for person reidentification, we make several modifications to 17 semantic labels. The original head parts, such as hat, hair, sunglasses, face and scarf, are all grouped into the head class. The original upper-body parts, such as upper-clothes, left-arm and right-arm are labeled upper-body class. The original lower-body regions, such as skirt, pants, dress, belt, left-leg and right-leg, are merged into lower-body class. The original left-shoe and right-shoe classes are grouped into shoe class. To reduce the impact of bags, we put the bags in the same class as background in this work. We train the human parsing model using input images of size

Examples of parsing mask generated by our human parsing model. The original person images are shown in the first row, and its parsing masks are shown in the second row. The masks of the third row are generated by original person images after supper-resolution.

In both training stages, the learning rate is set to 0.001 at first. We reduce learning rate by a factor of 0.2 when the validation loss has stopped declining. The Early Stopping technique is used with patience 10 when the validation accuracy has stopped improving. After training human classification model and human parsing model, the BMPP architecture aggregated of these two models is fine-tuned on Market-1501 and CUHK03 datasets separately.

4.3. The Performance of BMPP

We have a further study of the performance of various baseline models. In the Table 1, we show the results achieved by different baseline architectures based on the original-resolution person benchmarks. Table 2 shows the effect of various baseline architectures based on the super-resolution person benchmarks. From Tables 1 and 2, we observe that the baseline models, owing to the representation fusion strategy, outperform most of the current state-of-the-art. The results show that the performance of the model is greatly improved by using super-resolution preprocessing. Furthermore, we can draw a conclusion that Xception [22] has an extremely competitive effectiveness than ResNet-152 [35], in despite of its shallower framework. It also considerably outperforms Inception-V3 [34] and ResNet-50 [35], which has almost the same depth as those two backbone models. Additionally, we observe that the size of Xception [22], Inception-V3 [34], ResNet-50 [35] and ResNet-152 [35] is 88MB, 92MB, 98MB and 232MB respectively. We can conclude that the Xception [22] is the lightest among these models. Therefore, from the computation and performance point of view, Xception architecture is employed in our backbone architecture.

| Market-1501 | |||

|---|---|---|---|

| Model | mAP (%) | Rank-1 | Rank-10 |

| Xception | 65.33 | 85.34 | 98.35 |

| Inception-V3 | 62.32 | 84.30 | 96.20 |

| ResNet-50 | 58.35 | 73.34 | 95.98 |

| ResNet-152 | 65.54 | 85.89 | 98.43 |

| CUHK03 | |||

| Xception | – | 86.63 | 98.12 |

| Inception-V3 | – | 85.09 | 97.35 |

| ResNet-50 | – | 84.45 | 94.04 |

| ResNet-152 | – | 86.85 | 98.98 |

mAP, mean average precision.

The performance of original-resolution person reidentification benchmarks on various baseline models.

| Market-1501 | |||

|---|---|---|---|

| Model | mAP (%) | Rank-1 | Rank-10 |

| Xception | 74.85 | 90.05 | 98.95 |

| Inception-V3 | 73.06 | 88.87 | 97.00 |

| ResNet-50 | 66.32 | 85.10 | 95.95 |

| ResNet-152 | 72.95 | 88.33 | 98.88 |

| CUHK03 | |||

| Xception | – | 90.87 | 98.80 |

| Inception-V3 | – | 89.91 | 98.41 |

| ResNet-50 | – | 85.88 | 99.19 |

| ResNet-152 | – | 91.01 | 99.27 |

mAP, mean average precision.

The performance of super-resolution person reidentification benchmarks on various baseline models.

In the Table 3, we show the performance comparison between the BMPP architecture and the Xception baseline model. From the Table 3, we observe that the performance of BMPP architecture is superior to that of the Xception model, regardless of whether the image-flipped technique is adopted. The BMPP architecture with and without image-flipped technique is denoted as BMPP

| Market-1501 | |||

|---|---|---|---|

| Model | mAP (%) | Rank-1 | Rank-10 |

| Xception | 74.85 | 90.05 | 98.95 |

| BMPP |

80.02 | 91.32 | 97.75 |

| BMPP |

81.50 | 93.04 | 98.01 |

| CUHK03 | |||

| Xception | – | 90.87 | 98.80 |

| BMPP |

– | 85.88 | 98.79 |

| BMPP |

– | 92.67 | 98.95 |

BMPP, body multiple parts parsing; mAP, mean average precision.

The performance of BMPP architecture on the person reidentification benchmarks.

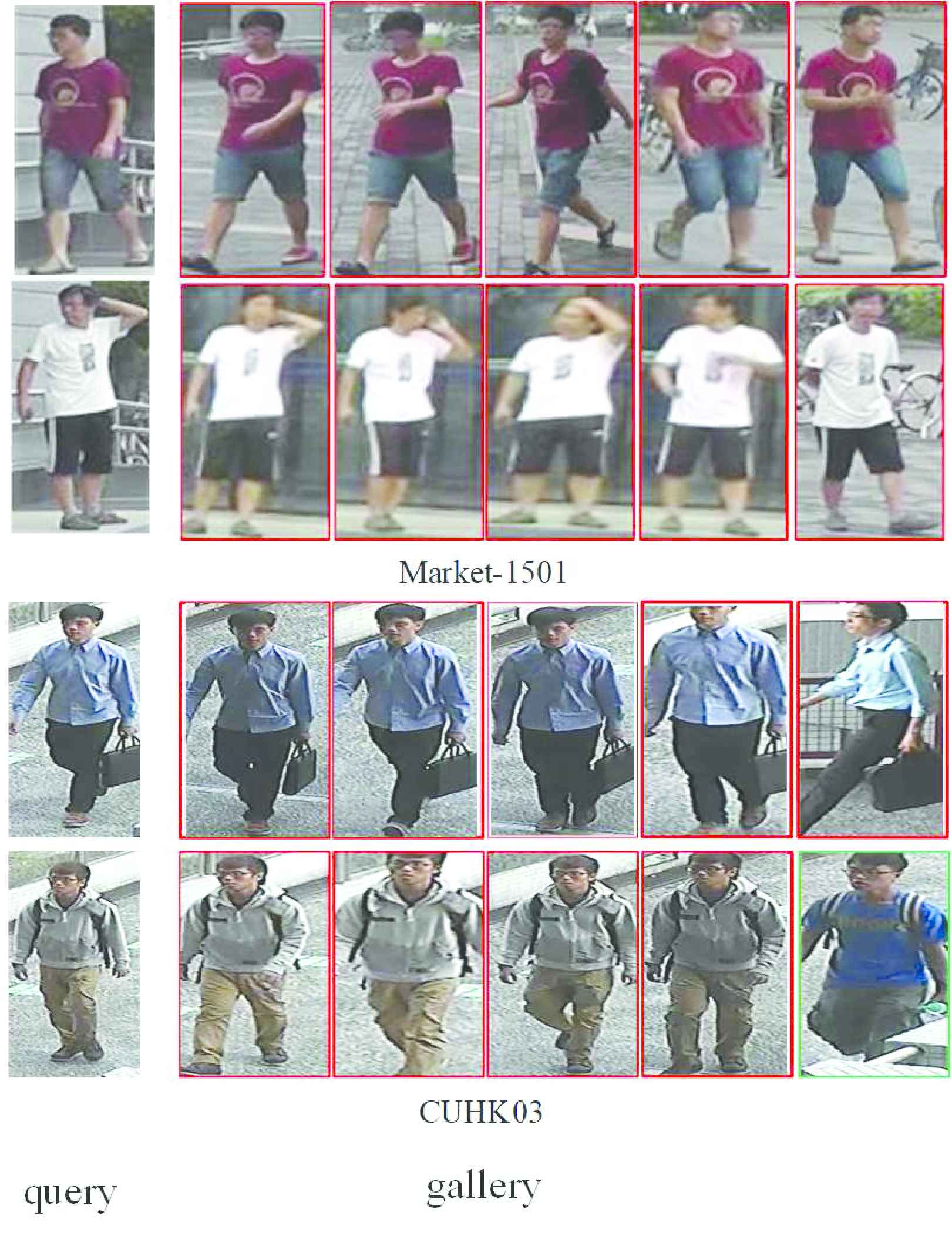

In the Figure 4, we show the top-5 retrieve results achieved by the BMPP architecture. From Figure 4, we list four person images from query set on Market-1501 and CUHK03. The images from gallery set are retrieved given a query image. As shown in Figure 4, the red rectangle represents the correct retrieved image. On the contrary, the green rectangle represents the incorrect matching image. The top-5 retrieve results for the two person images from Market-1501 are all correct. However, there is a matching error for one person images from CUHK03. From the picture content, they may have great similarity in appearance, which makes the BMPP to mismatch images of different pedestrians.

The top-5 ranking list for the query images on Market-1501 and CUHK03 by our body multiple parts parsing (BMPP). The first column is query images. The gallery images are in the second to fifth column

4.4. Comparison with the State-of-the-Art

Our proposed human BMPP method is compared with current state-of-the-art on CUHK03 and Market-1501 benchmarks. Table 4 shows a performance comparison between the BMPP architecture and the current state-of-the-art. We can observe that the BMPP architecture proposed in this paper outperforms most of state-of-the-art with large margin, adopting our super-resolution preprocessing and representation fusion strategy. In addition, the performance of our model is better than that of SPReID [21] on Market-1501, but not as good as SPReID [21] on CUHK03. We think that the SPReID [21] model, thanks to train SPReID [21] model on 10 various benchmarks and fine-tune on the Market-1501 and CUHK03 benchmarks, slightly outperforms our model.

| Market-1501 | ||||

|---|---|---|---|---|

| Model | mAP (%) | Rank-1 | Rank-5 | Rank-10 |

| BoW+Kissme [1] | 20.80 | 44.40 | 63.90 | 72.20 |

| Li et al. [18] | 57.50 | 80.30 | – | – |

| MGCAM [33] | 74.30 | 83.80 | – | – |

| HA-CNN [28] | 75.70 | 91.20 | – | – |

| AlignedReID [23] | 79.40 | 91.00 | 96.30 | – |

| SPReID [21] | 81.34 | 92.54 | 97.15 | 98.10 |

| BMPP |

81.50 | 93.04 | 97.35 | 98.01 |

| CUHK03 | ||||

| HA-CNN [28] | – | 44.40 | – | – |

| MGCAM [33] | – | 50.14 | – | – |

| FT-JSTL+DGD [17] | – | 75.30 | – | – |

| AlignedReID [23] | – | 88.30 | 97.10 | 98.50 |

| HydraPlus [29] | – | 91.80 | 98.40 | 99.10 |

| SPReID [21] | – | 93.89 | 98.76 | 99.51 |

| BMPP |

– | 92.67 | 97.65 | 98.95 |

BMPP, body multiple parts parsing; mAP, mean average precision; MGCAM, mask-guided contrastive attention model; HA-CNN, harmonious attention convolutional neural network.

The performance comparison of our model with the state-of-the art.

5. CONCLUSION

In this paper, we propose a human BMPP architecture, which includes human classification model and human parsing model, simultaneously. Human classification model and human parsing model are used to extract global-level and local-level representations of person images, respectively. In addition, a supper-resolution preprocessing method is adopted to improve the resolution of person benchmarks. Experimental results show that the human parsing model can accurately locate various body parts based on supper-resolution person images. Furthermore, we propose a representation fusion strategy to make the representations more distinguishable. Through an array of experiments, we demonstrate that our architecture has a competitive performance against most of state-of-the-art.

At the same time, our model still has several problems when it explores the local cues of person images. Although several person images with low-resolution have been preprocessed using the supper-resolution method, the BMPP still cannot locate accurately the 5 body parts. In the future, we will adopt attention mechanism (e.g., spatial attention mechanism and channel attention mechanism) to study person reidentification task. The attention mechanism is embedded in the backbone to focus on body local regions of interest, which can efficiently capture more robust and discriminative representations.

COMPETING INTERESTS

The authors declare that they have no competing interests.

AUTHORS' CONTRIBUTIONS

Sibo Qiao designs framework of the work; Shanchen Pang makes the manuscript writing; Xue Zhai makes the data experiments; Min Wang and Shihang Yu complete the data analysis and interpretation; Tong Ding constitutes data collection; Xiaochun Cheng is responsible for literature search and research design. All authors read and approved the final manuscript.

ACKNOWLEDGMENTS

This work was supported in part by the Major Science and Technology Innovation Project of Shandong Province (2019TSLH0214), the Tai Shan Industry Leading Talent Project (tscy20180416) and the National Natural Science Foundation of China under Grant 61873281.

REFERENCES

Cite this article

TY - JOUR AU - Sibo Qiao AU - Shanchen Pang AU - Xue Zhai AU - Min Wang AU - Shihang Yu AU - Tong Ding AU - Xiaochun Cheng PY - 2020 DA - 2020/12/29 TI - Human Body Multiple Parts Parsing for Person Reidentification Based on Xception JO - International Journal of Computational Intelligence Systems SP - 482 EP - 490 VL - 14 IS - 1 SN - 1875-6883 UR - https://doi.org/10.2991/ijcis.d.201222.001 DO - 10.2991/ijcis.d.201222.001 ID - Qiao2020 ER -