Using CFW-Net Deep Learning Models for X-Ray Images to Detect COVID-19 Patients

, Hao Liu1, Ji Li1, Hongshan Nie2, 3, *,

, Hao Liu1, Ji Li1, Hongshan Nie2, 3, *,  , Xin Wang1, *,

, Xin Wang1, *,

- DOI

- 10.2991/ijcis.d.201123.001How to use a DOI?

- Keywords

- COVID-19; Deep learning; CFW-Net; Convolutional neural network; Chest X-ray images

- Abstract

COVID-19 is an infectious disease caused by severe acute respiratory syndrome (SARS)-CoV-2 virus. So far, more than 20 million people have been infected. With the rapid spread of COVID-19 in the world, most countries are facing the shortage of medical resources. As the most extensive detection technology at present, reverse transcription polymerase chain reaction (RT-PCR) is expensive, long-time (time consuming) and low sensitivity. These problems prompted us to propose a deep learning model to help radiologists and clinicians detect COVID-19 cases through chest X-ray. According to the characteristics of chest X-ray image, we designed the channel feature weight extraction (CFWE) module, and proposed a new convolutional neural network, CFW-Net, based on the CFWE module. Meanwhile, in order to improve recognition efficiency, the network adopts three classifiers for classification: one fully connected (FC) layers, global average pooling fully-connected (GFC) module and point convolution global average pooling (CGAP) module. The latter two methods have fewer parameters, less calculation and better real-time performance. In this paper, we have evaluated CFW-Net based on two open-source datasets. The experimental results show that the overall accuracy of our model CFW-Net56-GFC is 94.35% and the accuracy and sensitivity of COVID-19 are 100%. Compared with other methods, our method can detect COVID-19 disease more accurately.

- Copyright

- © 2021 The Authors. Published by Atlantis Press B.V.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

COVID-19 is a novel coronavirus pneumonia discovered at the end of 2019, and the disease spread throughout the world in a very short time. On March 12, 2020, the World Health Organization announced that COVID-19 can be characterized as a pandemic. By 10:30 Beijing time on August 19, 2020, the total number of confirmed cases in the world is 22221289, and the number of recovered cases is 14,968,242. The death toll is 779,698 and the death rate is as high as 3.5%.

The novel coronavirus pneumonia is an acute infectious pneumonia which is a new type of coronavirus that has never been discovered by humans before. This pneumonia is named COVID-19 by the World Health Organization. The common symptoms of the infected person are fever, cough, sore throat and difficulty breathing [1]. At present, there is no vaccine or medicine for COVID-19. Therefore, it is necessary to diagnose the infected person in time to prevent the spread of the virus. The most widely technology used for COVID-19 detection is based on reverse transcription polymerase chain reaction (RT-PCR). However, RT-PCR kits are expensive, and it takes 6 to 9 hours to confirm infection in the patient [2]. Due to the low sensitivity of RT-PCR, it provides a high false-negative rate. To solve this problem, chest X-ray and computed tomography (CT) are used to detect and diagnose COVID-19 [3].

For example, Shui-Hua Wang et al. [4] proposed a novel chest CT-based method for the automatic detection of COVID-19. The algorithm is a hybrid method composed of wavelet Renyi entropy, feedforward neural network and a proposed three-segment biogeography-based optimization (3SBBO) algorithm. In addition, convolutional neural networks (CNNs) are widely used in the diagnosis of COVID-19. Mei et al. [5] used CNNs and traditional machine learning algorithm to combine chest CT images with clinical information to quickly diagnose COVID-19-positive patients. Rajaraman et al. [6] designed an iteratively pruned deep learning ensembles for COVID-19 detection in chest X-rays and achieved good results. In addition to CT and X-ray pictures, lung ultrasound (LUS) imaging can also be used to detect COVID-19. Leonardo et al. [7] propose an automatic and unsupervised method for the detection and localization of the pleural line in LUS data based on the hidden Markov model and Viterbi algorithm, and used support vector machine (SVM) method for classification.

Because X-ray machines are popular in most hospitals and are cheaper than CT scanners, X-ray images are more widely used [8]. COVID-19 contains some radiological features which can be detected by chest X-rays. Therefore, radiologists are required to analyze these characteristics. However, this is a time-consuming and error-prone task, so it is necessary to automatically detect COVID-19 in X-ray pictures.

With the development of artificial intelligence, the application range of deep learning methods is becoming wider and wider. Such as, Wang et al. [9] proposed an improved deep learning approach for detecting colonic polyp images and achieved good results. CNNs have achieved great success in the field of computer vision, especially in the field of image classification. So we can automatically analyze X-rays through image classification algorithms based on CNNs, which can reduce analysis time and improve diagnosis efficiency. For example, Khan et al. proposed a CNN CoroNet [10] based on the Xception [11] structure, which achieved good performance on the COVID-19 chest X-ray image classification task. Rahimzadeh [12] and others designed a network based on Xception and ResNet50V2, which improved network performance by utilizing the output features of the two networks. The network has achieved good results on a datasets containing three types of chest X-ray image of COVID-19, pneumonia and normal. Mahmud et al. proposed a CovXNet [13] model and introduced a dilated convolution. Based on this, two modules, residual unit and shifter unit, were designed. These two modules were used as the main components of CovXNet. This model has achieved good results in the classification task of chest X-ray images. Ozturk et al. proposed a DarkCovidNet [14] network, which improved on the basis of the DarkNet-19 network and achieved good classification accuracy.

In this paper, we use deep learning approach to classify chest X-ray images. We get inspiration from other studies. For example, Wang et al. [15] designed SSF-Net to solve the feature extraction problem of sparse data, and IVGG models [16] improved the network performance. Wang et al. [17] proposed an image target recognition method based on mixed features and adaptive weighted joint sparse representation. This method combines traditional methods with CNNs and achieved good results.

Different from the common image classification task, COVID-19 chest X-ray image data is very scarce. We solve this problem by using transfer learning and data augmentation. In addition, because the chest X-ray image has high inter-class similarity and low intra-class variance, it will lead to model deviation and overfitting, resulting in reduced performance and generalization, and further increasing the difficulty of model training. To solve the above problems, a channel feature weight extraction (CFWE) module is designed. Based on this module, a new CNN structure CFW-Net is proposed, which has strong classification ability for chest X-ray images. In addition, we reduce the amount of parameters and calculations of the network by improving the classifier of the network, and improve the generalization ability of the model. Compared with other deep learning methods, the method in this paper has higher classification accuracy and stronger generalization ability.

2. RELATED WORK

2.1. Convolutional Neural Networks

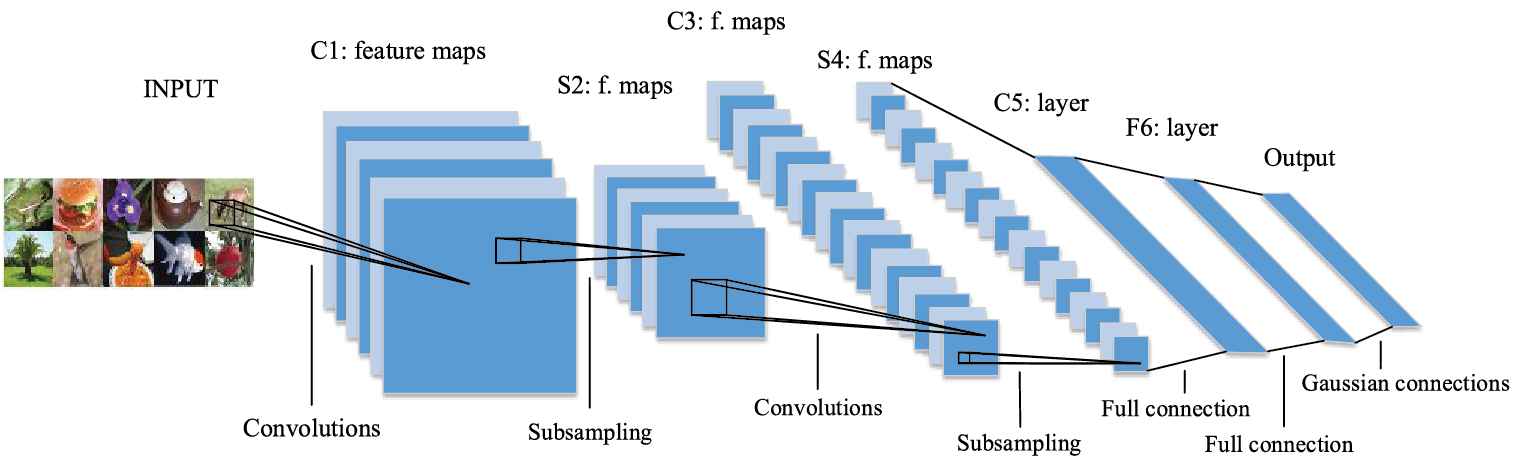

The deep CNNs have been widely used in the field of computer vision in recent years, and its basic structure is shown in Figure 1. Wang et al. analyzed the application and development of CNN in detail [18]. In 2012, Krizhevsky et al. proposed AlexNet [19], and applied new technologies such as ReLU [20], Dropout [21] and LRN [20] in the model, and used GPU to accelerate the training of the model for the first time in the training process, which solved the problem of network gradient dispersion and enhanced the generalization ability of the model. In 2014, Simonyan and Zisserman [22] proposed visual geometry group networks (VGGNets), which mainly explored the relationship between the depth and performance of CNNs. By repeatedly stacking 3 × 3 small convolution kernels and 2 × 2 maximum pooling layers, a 16 to 19 layers deep CNN was successfully built, which significantly improved the performance of the network. In the same year, Christian Szegedy proposed a new deep learning model, GoogleNet [23]. With the increase of network size, the number of network parameters becomes larger and larger, and the network is easy to overfitting and consumes a lot of computing resources. Christian Szegedy proposed an inception module, which improves the utilization of internal computing resources in the network, and alleviated the problem of overfitting to a certain extent. With the increase of network depth, network degradation occurs. That is, when the network converges, the accuracy begins to saturate or even decline. Kaiming He et al. proposed a residual net (RESNET) [24], which solved the problem of network degradation by using skip connections. Wang et al. proposed a deep residual dense network based on ResNet for recognizing super-resolution images with high accuracy [25].

The basic structure of convolution neural network [29].

Inspired by residual network, Huang Gao et al. proposed dense network (dense convolutional network, DenseNet) [26]. DenseNet uses a dense connection mechanism, in which all layers are interconnected. DenseNet directly concats feature maps from different layers, which can reuse the features of other layers. The above is the main difference between DenseNet and ResNet. On the basis of MobileNet [27], WANG et al. introduced dense blocks of DenseNet and constructed a Dense-MobileNet [28], which further reduced the amount of network calculations and parameters, and achieved higher accuracy.

2.2. CFWE Module and CFWE-Nets

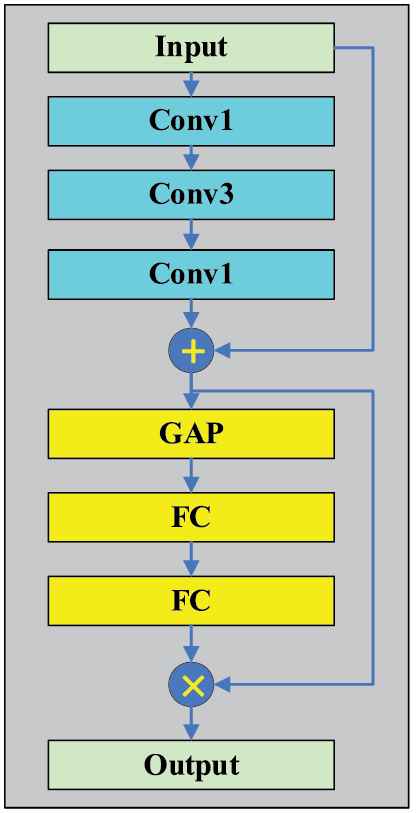

In order to reduce the pressure of medical institutions, we choose to use CNN to learn image features adaptively to classify chest X-ray images, so as to improve the efficiency of doctors in diagnosing COVID-19. However, the chest X-ray images have high inter-class similarity and low intra-class variance, which will lead to model deviation and overfitting, resulting in reduced performance and generalization. Therefore, we design a CFWE, as shown in Figure 2. In Figure2, “Conv7,” “Conv5,” “Conv3” and “Conv1” represent convolutional layers with the filters size of 7 × 7, 5 × 5, 3 × 3 and 1 × 1, “GAP” represents global average pooling layer and “FC” represents fully connected layer.

Structure of channel feature weight extraction (CFWE).

CFWE module contains multiple sizes of convolution kernels. The skip connections layer of CFWE module is composed of two “Conv1” and one “Conv3” layers. Through skip connections, the problem of network degradation can be solved to a certain extent. The first “Conv1” is used to reduce the dimension, and the second “Conv1” increases the dimension to mainly reduce the number of parameters. Then there are the series-connected pooling layer and fully connected layer, including two “FC” layers and a “GAP” layer. GAP is used to compress the feature map on the channel into a global feature, and the first FC layer is used to reduce the dimensionality. The second FC layer is used to restore the dimensionality. In this way, our model can learn the weight coefficient of each channel. In the feature extraction process, the weight coefficient can help our model extract more important channel features, suppress unimportant channel features, and enhance the feature extraction ability of the network.

Based on the CFWE module, we propose three CNN structures with CFWE, CFW-Net56, CFW-Net107 and CFW-Net158. A CFWE structure has 5 layers, where “Conv” represents a composite structure including “convolution,” “batch normalization” and “activation function.” The network structure is shown in Table 1.

| CFW-Net56 | CFW-Net107 Conv7-64, Stride:2 | CFW-Net158 | |||

|---|---|---|---|---|---|

| 3 × 3 Maxpool, Stride:2 | |||||

| Conv1-64 | Conv1-64 | Conv1-64 | |||

| Conv3-64 | ×2 | Conv3-64 | ×2 | Conv3-64 | ×2 |

| Conv1-256 | Conv1-256 | Conv1-256 | |||

| CFWE-256 | |||||

| Conv1-128 | Conv1-128 | Conv1-128 | |||

| Conv3-128 | ×3 | Conv3-128 | ×3 | Conv3-128 | ×7 |

| Conv1-512 | Conv1-512 | Conv1-512 | |||

| CFWE-512 | |||||

| Conv1-256 | Conv1-256 | Conv1-256 | |||

| Conv3-256 | ×5 | Conv3-256 | ×22 | Conv3-256 | ×35 |

| Conv1-1024 | Conv1-1024 | Conv1-1024 | |||

| CFWE-1024 | |||||

| Conv1-512 | Conv1-512 | Conv1-512 | |||

| Conv3-512 | ×3 | Conv3-512 | ×3 | Conv3-512 | ×3 |

| Conv1-2048 | Conv1-2048 | Conv1-2048 | |||

| Classifier, Softmax | |||||

CFW-Net configuration.

Traditional networks such as AlexNet and VGGNets use three fully connected (FC) layers as the classifier, which contains a large number of parameters and has extremely high memory requirements. So we use one FC layer instead of three FC layers as our classifier.

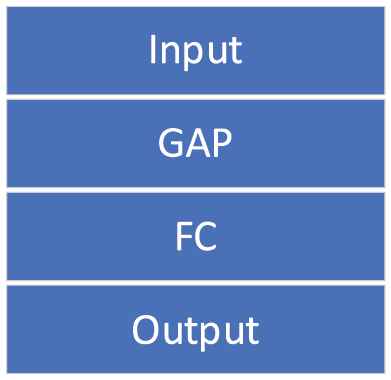

In addition, we introduced the GAP method proposed by Lin et al. [30]. Because the number of output features by the convolutional layer is extremely large, using a single fully connected layer as a classifier will still lead to excessive parameter quantity. So we first use the GAP layer to reduce the feature map size to 1 × 1, and then use the fully connected layer for classification, which greatly reduces the amount of parameters. This classifier structure is shown in Figure 3. We use “GFC” to represent the structure in the Figure.

Structure of GFC.

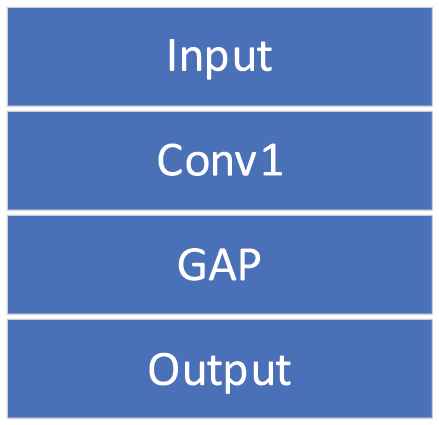

Besides, according to the characteristics of “GAP” structure, we use a 1 × 1 point convolution layer to reduce the dimension of output features, and then use “GAP” for classification. This classifier has no fully connected layer, which further reduces the amount of parameters. This classifier structure, CGAP, is shown in Figure 4.

Structure of CGAP.

The GFC module uses “GAP” and a fully connected layer for classification. “GAP” can reduce the amount of parameters and prevent network overfitting. The fully connected layer can integrate local features and improve position invariance, but its parameters are large. The CGAP module uses point convolution layer and “GAP” for classification. It has no full connection layer, which greatly reduces the amount of parameters, but the calculation of point convolution layer is large. Compared with the CGAP module, the GFC module has a larger amount of parameters, but its calculation amount is less than that of the CGAP module.

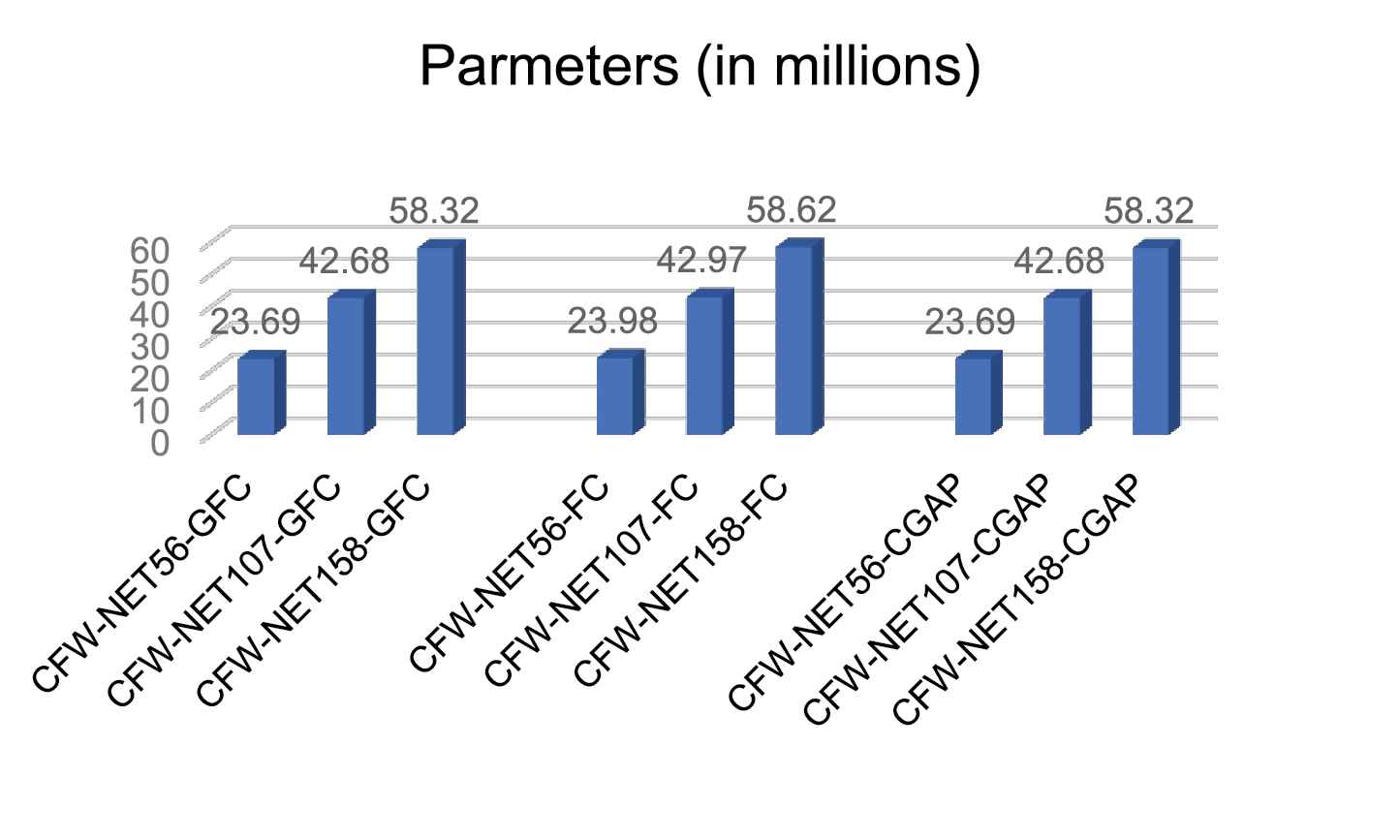

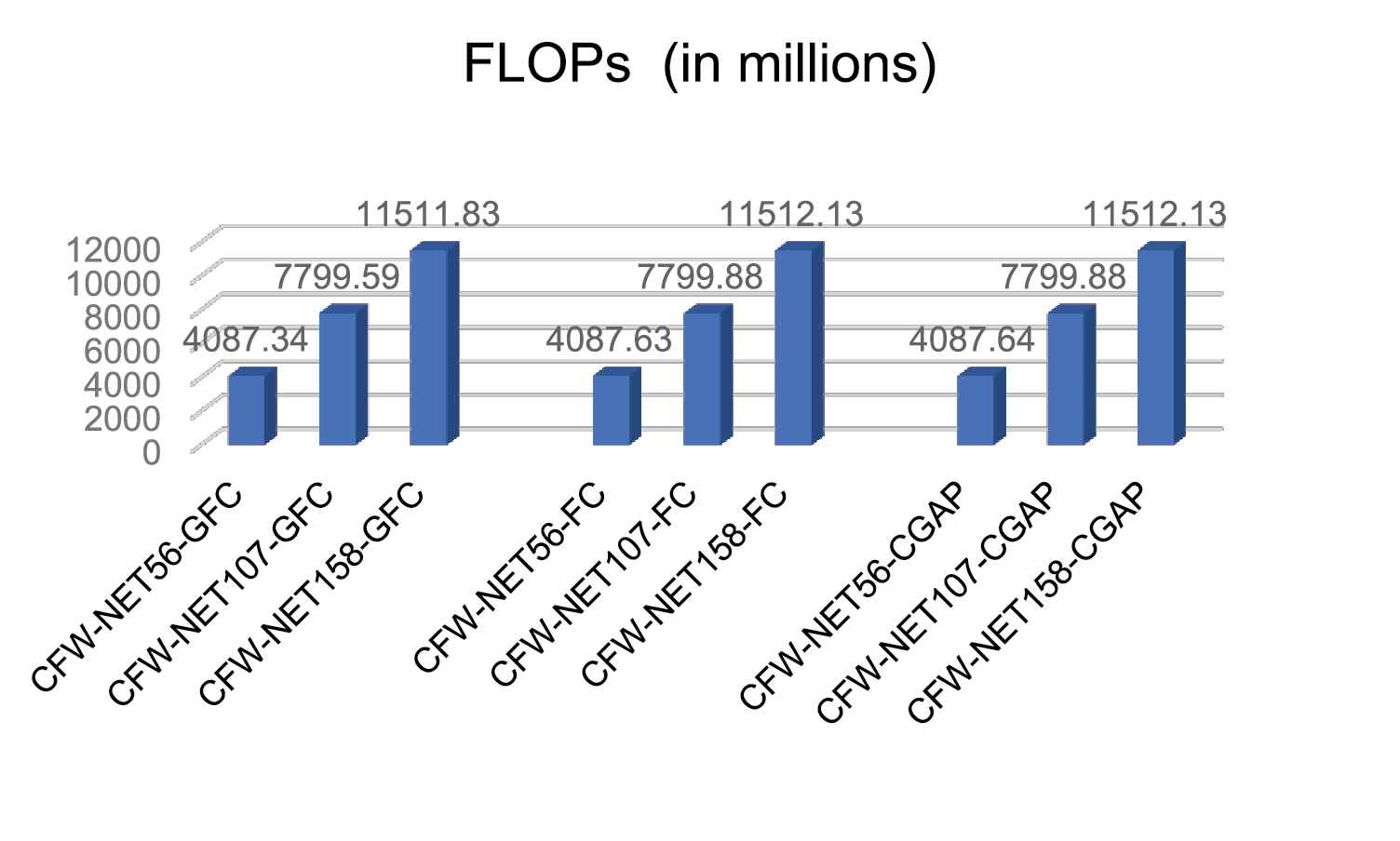

2.3. Network Complexity

In order to study the influence of different depths and different classifiers on the amount of model calculations and parameters, we compare the parameters and calculations of models using different classifiers and different depth models. Taking the 3-classes classification task as an example, the size of the output feature map of the last layer of the network is

The number of parameters for different networks is shown in Figure 5, and the amount of calculation is shown in Figure 6.

The parameters comparison of CFW-Net.

Comparison of floating points of operations (FLOPs).

It can be seen from Figure 5 that in the CFW-Net with same depth, the network using FC as the classifier has about 290,000 more parameters than the networks using other classifiers. Therefore, if there is no significant difference in recognition accuracy, in order to reduce the number of model parameters FC should be avoided as a classifier. Although the parameter number of the network using different classifiers is different, the classifier has little influence on the network parameters of this paper. The amount of parameters of CFW-Net158-CGAP is 2.46 times that of CFW-Net56-CGAP, and the amount of parameters of CFW-Net107-CGAP is 1.8 times that of CFW-Net56-CGAP. Therefore, it can be seen that the network depth has the greatest influence on the model parameters. So when the device memory is insufficient and the hardware conditions do not support too many parameters, CFW-Net56 is a better choice.

It can be seen from Figure 6 that the calculation amount is greatly affected by the network depth. The calculation amount of CFW-Net158 is 1.47 times that of CFW-Net107, the calculation amount of CFW-Net107 is 1.91 times that of CFW-Net56. Therefore, the CFW-Net56 model has the highest cost performance if there is no significant difference in accuracy.

In addition, by comparing the calculation and parameter amount of the network using three different classifiers, it can be seen that the network parameters using CGAP and GFC can save about 290000 parameters compared with the network using FC, and the network using GFC can save about 300000 computation compared with the network using CGAP and FC. Therefore, if there is no significant difference in accuracy, GFC is preferred classifier.

3. EXPERIMENTS AND RESULTS ANALYSIS

3.1. Dataset

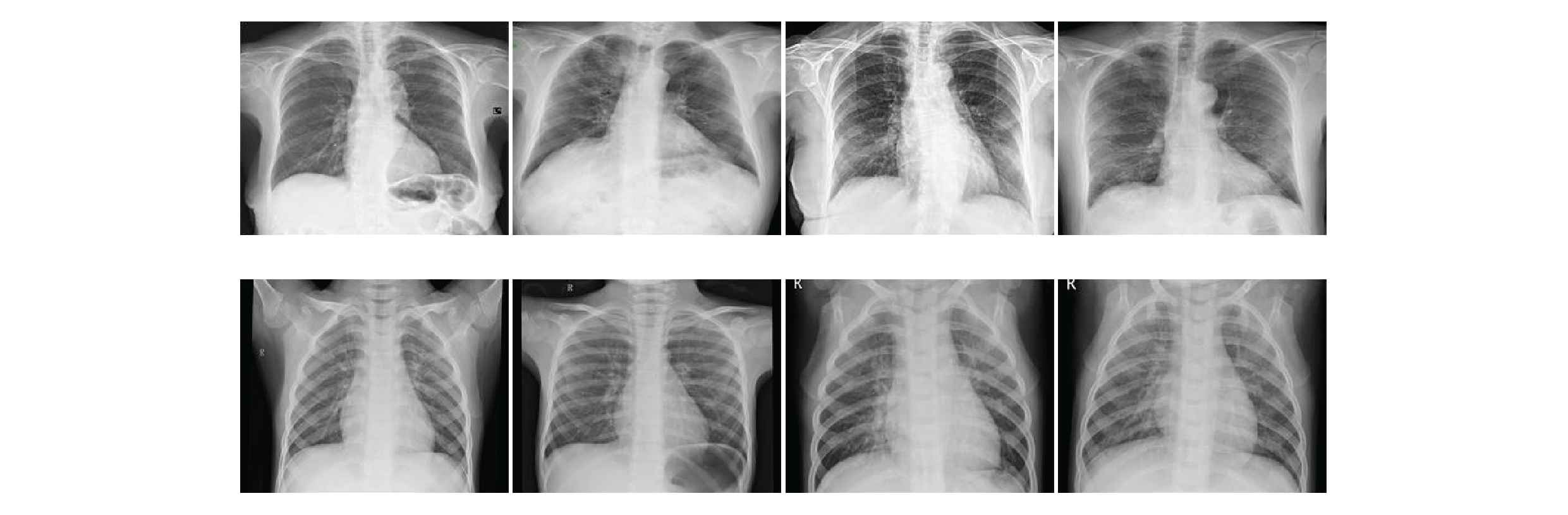

In our work we have used two open-source datasets. The COVID-19 chest X-ray image datasets is from GitHub (https://github.com/ieee8023/covid-chestxray-dataset), which was prepared by Ref. [31]. This datasets consists of X-ray and CT scan images of different patients infected with COVID-19 and other pneumonia. It has a total of 760 images. We selected 412 X-ray images of COVID-19 positive patients. The second datasets comes from Kaggle's chest X-ray images (pneumonia) (https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia), this datasets consists of chest X-ray images of normal and common pneumonia patients, which contains 5863 chest X-ray images. We selected 4265 X-ray images of pneumonia and 1575 normal X-ray images from this datasets. Our training set contains 5526 X-ray images, including 310 COVID-19 patient images, 1341 normal images and 3875 pneumonia. The test set contains 726 X-ray images, including 102 COVID-19 patient chest X-ray images, 234 normal chest X-ray images and 390 chest X-ray images of common pneumonia. The chest X-ray images of various patients in our datasets are shown in Figure 7.

Chest X-ray images.

From the above figures, it can be seen that the chest X-ray images have high inter-class similarity and low intra-class variance, which increases the difficulty of the network to classify chest X-ray images.

3.2. Preprocessing and Experiment Setup

In the experiments, we first resize images in both the training set and test set to a fixed resolution of 224 × 224, and then expand the training set data by the following ways: rotate the image randomly from −10° to 10° with a probability of 0.75, enlarge the image randomly from 1 to 1.1 times with a probability of 0.75, adjust the brightness of the image randomly between 0.4 and 0.6 with a probability of 0.75, adjust the image contrast randomly between 0.8 and 1.25 with a probability of 0.75, and tilt the image with a probability of 0.75 between −0.2 and 0.2. After this preprocessing, the number of data in our training set has been expanded by 4 times, which has solved the problem of insufficient chest X-ray images to a certain extent and reduced the risk of network overfitting.

All the experiments are carried out on the same platform and environment to ensure the credibility of comparisons between different network models. Table 2 shows the software and hardware configuration information of the experimental platform. The “batchsize” size of the training set and test set are both 16.

| Attributes | Configuration Information |

|---|---|

| Operating system | Ubuntu 14.04.5 LTS |

| CPU | Intel(R) Xeon(R) CPU E5-2670 v3 @ 2.30 GHz |

| GPU | GeForce GTX TITAN X |

| CUDNN | CUDNN 6.0.21 |

| CUDA | CUDA 8.0.61 |

| Frame | Fastai |

| IDE | PyCharm |

| Language | Python |

Experimental platform configuration.

In the training process, we use the learning rate preheating training method. That is, the learning rate increases gradually from a small value. Then the learning rate decay method is used to gradually reduce the learning rate. After repeated experiments, the parameters are finally set as follows: the initial learning rate is set as 0.00004, and the training epoch is set as 20. In 0–10 epochs, the learning rate gradually increases from 0.00004 to 0.0001. In 11–20 epochs, the learning rate is gradually reduced from 0.0001 to 0.000046.

3.3. Evaluation Criteria

Based on the evaluation criteria adopted by most medical image classification models, we use accuracy, precision, F1-score, sensitivity and specificity as performance indicators. The formulas for these evaluation criteria are as follows:

Among these equations, TP represents true positive, FP represents false positive, FN represents false negative and TN represents true negative.

3.4. Experimental Results

In order to study the effect of CFW-Net depth and classifier on chest X-ray image classification performance, we used 9 types of CFW-Net to conduct experiments on the data sets. The experimental results are shown in Table 3. In Table 3, the best experimental results are bolded.

| Model | Accuracy | Precision | Sensitivity | Specificity | F1-score | COVID-19 Acc |

|---|---|---|---|---|---|---|

| CFW-Net56-GFC | 94.35 | 96.52 | 94.33 | 97.17 | 95.41 | 100 |

| CFW-Net56-FC | 92.98 | 95.50 | 92.41 | 96.20 | 93.93 | 97.6 |

| CFW-Net56-CGAP | 93.80 | 96.13 | 93.58 | 96.79 | 94.84 | 99.02 |

| CFW-Net107-GFC | 92.70 | 95.45 | 92.07 | 96.18 | 93.73 | 97.06 |

| CFW-Net107-FC | 92.56 | 95.82 | 92.00 | 96.29 | 93.87 | 98.4 |

| CFW-Net107-CGAP | 93.25 | 95.52 | 92.64 | 96.76 | 94.06 | 97.06 |

| CFW-Net158-GFC | 94.49 | 95.58 | 94.45 | 97.44 | 95.01 | 98.4 |

| CFW-Net158-FC | 92.84 | 95.74 | 92.15 | 96.37 | 93.91 | 97.06 |

| CFW-Net158-CGAP | 95.18 | 96.65 | 94.56 | 97.60 | 95.60 | 96.8 |

Performances of different depth CFW-Nets (%).

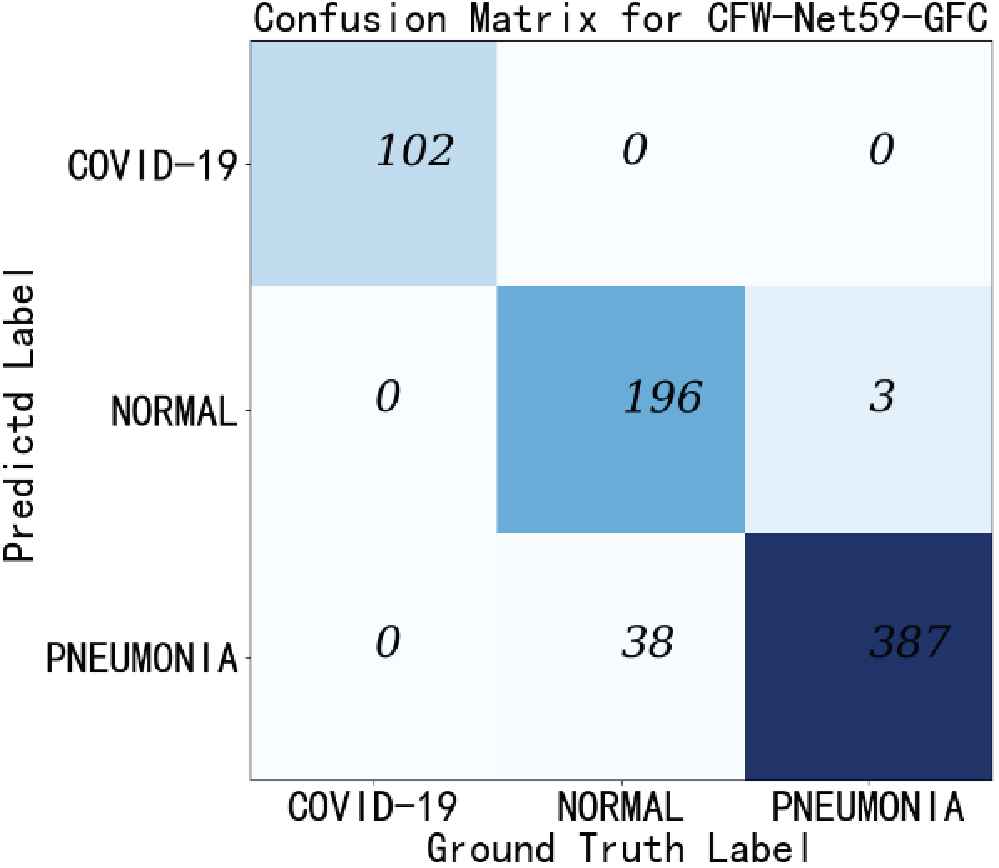

According to Table 3, the performance of CFW-Net using FC as the classifier is lower than the model using the other two classifiers. CFW-Net158-CGAP has the best overall performance on the datasets, with the highest overall accuracy, accuracy, sensitivity, specificity and F1-score, which are 95.18%, 96.65%, 94.56%, 97.60%, 95.60%, respectively, but its classification accuracy of COVID-19 is low. The accuracy of CFW-Net56-GFC in the COVID-19 category reaches the highest 100%, and its overall accuracy is 94.35, which is about 1% higher than that of CFW-Net107 and about 1% lower than that of CFW-Net158. It shows that with the deepening of the network, the performance of the network model does not change significantly. The calculation amount of CFW-Net158 and CFW-Net107 is 2.81 times and 1.91 times that of CFW-Net56, and the parameter amount is 2.46 times and 1.80 times that of CFW-Net56, respectively. Although CFW-Net158 has the highest accuracy, its calculation amount and parameter amount are increased by 2.81 times and 2.46 times respectively compared with CFW-Net56, and the accuracy is only less than 1% higher. CFW-Net56-GFC has the highest accuracy in the COVID-19 category and the overall accuracy is higher than CFW-Net56-CGAP and CFW-Net56-FC. After comprehensive consideration, we believe that CFW-Net56-GFC is the most cost-effective. In Figure 8, we show the confusion matrix of CFW-Net56-GFC, and Table 4 shows more detailed results on the recognition performance of CFW-Net56-GFC.

This figure shows the confusion matrix of the CFW-Net56-GFC.

| Class | Accuracy | Sensitivity | Specificity |

|---|---|---|---|

| COVID-19 | 100 | 100 | 100 |

| Pneumonia | 99.23 | 99.23 | 91.88 |

| Normal | 83.76 | 83.76 | 99.62 |

| Average | 94.33 | 94.33 | 97.17 |

Accuracy, sensitivity, specificity of CFW-Net56-GFC (%).

According to Table 4, our model has good classification performance for chest X-ray images of COVID-19 positive patients and patients with common pneumonia. Especially, the classification accuracy, sensitivity and specificity of COVID-19 have reached 100%. For normal X-ray images, the classification accuracy is poor, and the probability of misjudgment for common pneumonia is high.

We further compare the experimental results of CFW-Net56-GFC with traditional CNNs VGG-19, GoogLeNet, ResNet50 and DenseNet-121. The experimental results are shown in Table 5 and the best experimental results are bolded.

| Model | Accuracy | Precision | Sensitivity | Specificity | F1-score | COVID-19 Acc |

|---|---|---|---|---|---|---|

| ResNet50 | 93.53 | 96.01 | 93.15 | 96.53 | 94.56 | 98.4 |

| DenseNet121 | 93.11 | 95.98 | 92.75 | 96.38 | 94.34 | 99.02 |

| GoogleNet | 92.56 | 95.29 | 91.56 | 95.78 | 93.37 | 95.1 |

| VGG-19 | 93.11 | 96.09 | 92.93 | 96.47 | 94.49 | 100 |

| CFW-Net56-GFC | 94.35 | 96.52 | 94.33 | 97.17 | 95.41 | 100 |

Performances of other convolutional neural networks (CNNs) (%).

DenseNet121 uses densely connected dense blocks to further deepen the network depth, and it achieves good classification accuracy on our datasets. But the accuracy is still lower than our network. Because of the increase in depth, the amount of calculation and parameters are significantly higher than our network. GoogleNet has the lowest classification accuracy on our datasets. The accuracy of VGG-19 is about 1% lower than that of CFW-Net56-GFC. Because of its shallow depth, it is difficult to fully extract image features, resulting in low accuracy of image recognition. Moreover, because VGG-19 uses a three-layer fully connected layer as a classifier, the amount of parameters and calculations are very large, so the equipment requirements are extremely high and the calculation time is long. ResNet50 solves the problem of network degradation with the deepening of network depth by using skip connections, and achieves good recognition performance on chest X-ray images, but its performance indicators are all lower than CFW-Net56-GFC. The CFWE module can learn the weights of channel feature maps to further improve the classification accuracy and enhance the generalization ability of the model.

We further compared CFW-Net14-GFC with the state-of-the-art methods proposed by Khan et al. [10], Rahimzadeh [12], Ozturk et al. [14], Mahmud et al. [13], and so on. These methods also have achieved good performance in COVID-19 detection, and the experimental results are shown in Table 6. In Table 6, the best experimental results are bolded.

| Reference | class | Method | Accuracy | COVID-19 Acc |

|---|---|---|---|---|

| Khan et al. [10] | 3 | CoroNet | 95 | 96.67 |

| Rahimzadeh [12] | 3 | XResNet50V2 | 91.4 | 99.5 |

| Ozturk et al. [14] | 3 | DarkCovidNet | 87.02 | 98 |

| Mahmud et al. [13] | 4 | CovXNet | 90. 3 | 85 |

| Our approach | 3 | CFW-Net56-GFC | 94.35 | 100 |

Comparison of CFW-Net56-GFC with other existing deep learning methods.

Although the overall accuracy of the CoroNet method is up to 95%, which is 0.65% higher than that of the CFW-Net56-GFC method, its accuracy for the COVID-19 category is only 96.67%, which is 3.33 lower than that of CFW-Net56-GFC%. Although DarkCovidNet's COVID-19 accuracy is as high as 98%, its overall accuracy is low. The accuracy of other methods is generally lower than the method in this paper. According to Table 3, the overall accuracy and COVID-19 classification accuracy of the proposed CFW-Net for chest X-ray image classification have reached a very high level, which shows that our network performance is better and more targeted for chest X-ray image classification tasks.

3.5. Experiments Analysis

According to the experimental results, CFW-Net158-CGAP has the highest overall accuracy (95.18%), and CFW-Net56-GFC has the highest accuracy of COVID-19 classification (100%). we chose CFW-Net56-GFC to compare with other methods. Its overall accuracy and COVID-19 classification accuracy were 94.35% and 100%, respectively, and the overall performance was better than other methods.

The datasets used in the experiments consist of chest X-ray images of COVID-19 patients, common pneumonia patients and normal people. Because there are few categories of chest X-ray images, the amount of parameters and calculation of network classifier are reduced. In addition, we propose 3 models with different depths. The recognition performance of the deeper networks for our datasets is not significantly improved compared with shallow networks, and the deeper networks are easier to overfitting. Therefore, for the experimental datasets, the shallow network designed by us can not only reduce the network complexity, but also achieve better detection results.

Through experimental analysis, it can be seen that in the COVID-19 chest X-ray image classification task, the network depth should be kept appropriate. If the network is too shallow, it is difficult to extract sufficient features. On the other hand, if the network is too deep, it will cause gradient dispersion or gradient explosion. In addition, if the network is too deep, the degradation problem will occur, and the amount of parameters and calculation will increase greatly.

Gradient dispersion and gradient explosion can be solved by batch normalization, and degradation problem can be solved by skip connections. The introduction of GAP into the classifier can effectively reduce the amount of network parameters and calculation. Because the chest X-ray image has high inter-class similarity and low intra-class variance, it will lead to model deviation and overfitting, resulting in reduced performance and generalization. Therefore, we designed a CFWE module, which contains Conv1, Conv3, GAP and FC structures. Conv1 is used to control the dimension and reduce the number of parameters. Skip connections are used to prevent information loss, increase network depth and solve the problem of network degradation to a certain extent. The channel feature weights are extracted through GAP and two FC layers to improve the feature extraction capability of the network.

Compared with existent approaches, our method is more targeted for the diagnosis of COVID-19. Chest X-ray image has high inter-class similarity and low intra-class variance, the CFWE module has stronger feature extraction capability for Chest X-ray image. However, compared with other methods, our method cannot diagnose the specific types of common pneumonia, and has a high rate of misjudgment between common pneumonia pictures and normal pictures. In addition, the number of COVID-19 X-ray images in our dataset is small, so we need to obtain more COVID-19 patient images, and further train our model to improve its accuracy.

4. CONCLUSIONS

In this paper, we designed a CFWE module for chest X-ray images. Based on this module, we proposed a CNN CFW-Net to classify chest X-ray images to diagnose and detect COVID-19 Case. We used two open-source datasets, containing 4265 and 1575 images from patients with pneumonia and normal people, and 412 images from COVID-19 patients. Through comparative analysis of the experimental results, we believe that CFW-Net56-GFC has the highest value. Its overall accuracy and COVID-19 category accuracy have reached 94.35% and 100%, respectively, and the sensitivity of COVID-19 is 100%. CFW-Net has extremely high accuracy and sensitivity for COVID-19 cases, and can effectively help radiologists and clinicians detect COVID-19 disease. Although good results have been achieved, CFW-Net still needs clinical research and testing, and the number of COVID-19 chest X-ray images used in this study is limited. We hope to obtain more images from COVID-19 patients and use them to further train our CFW-Net to improve its accuracy.

CONFLICTS OF INTEREST

The authors declare no conflicts of interest.

AUTHORS' CONTRIBUTIONS

W.W. designed the study, wrote the paper, managed the project and was responsible for the final submission and revisions of the manuscript. H.L. wrote the python scripts, trained the CNN models and did the data analysis. J.L. configured the experimental environment arranged experimental data and organized the datasets. H.N. implemented the feature visualization. X.W. revised the paper. All authors read and approved the final manuscript.

Funding Statement

This research was funded by Scientific Research Fund of Hunan Provincial Education Department under Grant 17C0043, Natural Science Foundation of Hunan Province (CN) under Grant 2019JJ80105, Changsha Science and Technology Project under Grant 29312, and Hunan Graduate Scientific research innovation Project under Grant CX20200882.

ACKNOWLEDGMENTS

We are grateful to our colleagues for their suggestions.

REFERENCES

Cite this article

TY - JOUR AU - Wei Wang AU - Hao Liu AU - Ji Li AU - Hongshan Nie AU - Xin Wang PY - 2020 DA - 2020/11/27 TI - Using CFW-Net Deep Learning Models for X-Ray Images to Detect COVID-19 Patients JO - International Journal of Computational Intelligence Systems SP - 199 EP - 207 VL - 14 IS - 1 SN - 1875-6883 UR - https://doi.org/10.2991/ijcis.d.201123.001 DO - 10.2991/ijcis.d.201123.001 ID - Wang2020 ER -