A Multimodal Adversarial Attack Framework Based on Local and Random Search Algorithms

, Jie Yu, Yusong Tan, Qingbo Wu

, Jie Yu, Yusong Tan, Qingbo Wu- DOI

- 10.2991/ijcis.d.210624.001How to use a DOI?

- Keywords

- Adversarial attack; Multimodal applications; Adversarial image; Adversarial text; Local search; Random search

- Abstract

Although many problems in computer vision and natural language processing have made breakthrough progress with neural networks, adversarial attack is a serious potential problem in many neural network- based applications. Attackers can mislead classifiers with slightly perturbed examples, which are called adversarial examples. As the existing adversarial attacks are specific to application and have difficulty in general usage, we propose a multimodal adversarial attack framework to attack both text and image classifiers. The proposed framework firstly generates candidate set to find the substitution words or pixels and generate candidate adversarial examples. Secondly, the framework updates candidate set and search adversarial examples with three local or random search methods [beam search, genetic algorithm (GA) search, particle swarm optimization (PSO) search]. The experiments demonstrate that the proposed framework effectively generates image and text adversarial examples. Comparing the proposed methods with other image adversarial attacks in MNIST dataset, the PSO search in the framework has 98.4% attack success rate which outperforms other methods. Besides, the beam search has the best attack efficiency and human imperception in both MNIST and CIFAR-10 dataset. Comparing with other text adversarial attacks, the beam search in the framework has an attack success rate of 91.5%, which outperforms other existing and the proposed search methods. In attack efficiency, the beam search also outperforms other methods, meaning that we can craft text adversarial examples with less perturbation using beam search.

- Copyright

- © 2021 The Authors. Published by Atlantis Press B.V.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

With the development of neural network-based deep learning, many problems in computer vision (CV) and natural language processing (NLP) have made breakthrough progress. However, adversarial attack [1] can mislead neural network based applications in CV and NLP domain. For example, by wearing specific eyeglasses, an attacker will evade being recognized or impersonate another individual when he attacks face recognition system [2]. An example of text adversarial attack is that a tiny modification of inappropriate content such as online harassment can deceive Google's toxic comments detector [3]. Adversarial attack is a hot topic in CV domain [4–8]. In order to prove that text is also vulnerable to adversarial attack, several researchers propose text adversarial attacks [8–11] against natural language applications. Although there are many adversarial attacks proposed to reveal the vulnerability of image and text neural network classifier, how to implement an adversarial attack that can generate both text and image adversarial examples still remains a hard problem.

The biggest difference between image and text adversarial attack is that text is discrete data while image can be processed as continuous data. The pixel values can be calculated as continuous data and be clipped to be integers between 0 and 255 when generating adversarial images. Although words can be embedded into a continuous space, they are still discrete points in the space. The gradient methods used in image adversarial attack are no longer valid in attacking text classifier. There are several methods for crafting adversarial text. Gradient descent and nearest point searching method [12], linguistic synonym replacement [13,14], global and local searching for substitute words [15,16] are typical methods to generate adversarial text. However, these methods have difficulty in general usage. For instance, linguistic substitution method is specific to application. Besides, the attacks against text classifier cannot directly attack image classifier, and vice versa.

In this paper, a multimodal adversarial attack framework is proposed to generate both text and image adversarial examples. With this framework, text and image are formalized into a unified data type thus the attacks in the framework can be both applied to text and image classifiers. There are two main challenges to construct such a multimodal adversarial attack framework.

One challenge is how to unify text and image data so that the attack can be formalized to a solvable problem. In the proposed framework, both text and image data can be represented as tensor, i.e., a multi-dimensional array. Each word in a text is represented as a word vector, so text is a (text length, word vector dimension) shape tensor. Similarly, image is a (image height, image width, color channel) shape tensor. After unifying image and text data, we introduce a distance function d to measure the difference between the adversarial and original example. Then, in our unified framework, the adversarial attack is formalized as finding a tensor that satisfies (1) can mislead the classifier and (2) d is as small as possible.

The other challenge is that the search space is huge so that we need some techniques to improve the search efficiency. We combine a process named candidate set generation (CSG) and local/random search methods to solve the huge search space problem. CSG generates a candidate set which consists of limited number of tensors in each searching iteration. The candidate set aims to contain an adversarial example to mislead classifier. The candidate set is updated with several variants of search methods if it doesn't contains an adversarial example.

The workflow of the proposed multimodal adversarial attack framework are as follows. First, the text and image data are preprocessed to be tensors. Then, in the CSG the saliency map is proposed to measure the influence of pixels or words on the classification result. The saliency map is to determine the modification priority of each pixel or word. According to the modification priority, several pixels or words are modified and added to the candidate set. If the candidate set contains an adversarial example, the adversarial attack problem is solved. Otherwise, a variety of local/random search methods, such as beam search, genetic algorithm (GA) and particle swarm optimization (PSO), are applied to update the candidate set until it contains an adversarial example. By using this framework, multimodal adversarial examples can be crafted successfully. In summary, the contributions of this paper are as follows:

We propose a multimodal adversarial attack framework which can generate both image and text adversarial examples. It formalizes the image and text data as tensor and then searches adversarial examples in the space of tensor.

We propose saliency map to measure the modification priority of each pixel or word. Using saliency map, the CSG process is proposed to generate images or texts that are most likely to be adversarial examples.

We combine CSG and 3 local/random search methods to solve the huge search space problem. The 3 search methods are variants of the standard beam search, GA and PSO.

2. RELATED WORK

CV and natural language applications are vulnerable to adversarial attacks. Both image and text classifiers can be misled by tiny perturbed examples. The image adversarial attacks are proposed earlier than text adversarial attacks. Neural network classifiers usually use gradient descent to decrease a loss function in order to improve its generalization performance. Thus the attackers use gradient ascent to mislead the classifier and propose several gradient-based adversarial attacks. LBGFS [1], fast gradient sign method (FGSM) [17], basic iterative method (BIM) [18] are typical gradient-based image adversarial attacks.

After that, more sophisticated adversarial attacks are proposed to solve specific problems. Papernot et al. [8] propose transferability to attack the black-box image classifier. Sharif et al. [2] propose a specially made eyeglasses to attack face recognition system in real life. In 2016, Papernot et al. [5] propose Jacobian-based Saliency Map Attack (JSMA), in which saliency map measures the significance of each pixel's impact on classification results. Continuously selecting the most significant pixel to modify during the attack improves the attack efficiency and dramatically reduces the amount of pixels that need to be modified. A more extreme attack is one pixel attack [7], which only modifies one pixel to make the classifier go wrong. In 2017, Carlini and Wagner [19] propose a set of attacks (C&W Attacks) that regard the attack as a constrained optimization problem and achieve good results against defense methods based on gradient smoothing such as knowledge distillation [20].

The text adversarial attack is also widely studied in the literature. There are two categories of text adversarial attack: character-level attack and word-level attack. Character-level attack perturbs the text by modifying character. Hotflip [10] is for the character RNN classifier. It is also a gradient-based white box text adversarial attack method. Gao et al. [21] propose simple character-level transformations to change the original classification of black-box text classifier with minimal edit distance of the perturbation. Hosseini et al. [3] propose several effective attempts to attack Google's Perspective API.

Word-level attack perturbs the text by modifying word. Liang et al. [14] point out the difficulty of text adversarial attack and propose three strategies including inserting, deleting, and modifying to perturb text. These strategies regard the classifier as a white box, calculate the significance of each word's impact, and then change the corresponding position of the text. Samanta and Mehta [9] use an adversarial attack against the IMDB [22] Film Review Classifier and the Twitter Gender Classifier to make a serious error in inferring the results. Papernot et al. [12] propose that forward derivation can be used for RNN. They propose the RNN version of FGSM. Ren et al. [23] propose probability weighted word saliency (PWWS) for text adversarial attack. The change of the classification probability is used as the weight of the original word saliency (WS). This strategy increase attack success rate and reduce the word substitution rate. TextBugger [13] uses the method of finding important words and then replace it. There are also methods using local heuristic search to generate adversarial examples. Alzantot et al. [24] propose the application of GAs to find adversarial text. Kuleshov et al. [16] propose using local greedy search to attack spam detectors and fake news detectors. Jin et al. [25] show that the pretrained BERT [26] which has the best language model performance is also vulnerable to adversarial attacks. Other methods such as graph model [27], game theory [28], and KL divergence [29] can all be used to construct adversarial text.

To the best of our knowledge, our framework is the first multimodal adversarial attack framework that can generate both adversarial images and adversarial texts. In the proposed framework, we use a unified formalization for text and image examples and 3 local/random search algorithms for searching adversarial examples. Compared to other adversarial attacks, the advantages of the search method in the proposed framework are as follows. In each iteration, the amount of perturbations are small (especially beam search) and the perturbations are toward the direction of label's probability maximum decreasing, resulting in more efficient attacks compared to other methods, i.e., achieving higher attack success rates by using smaller perturbations. Beam search perturbs examples slightly and generates adversarial examples in the neighborhood of the original examples in each iteration. Thus, the generated adversarial examples are similar to the original example. It is difficult for humans to perceive the perturbations in the adversarial example. The disadvantage is that the GA and PSO in the framework may generate more noise in the examples due to the gene mutation and particle movement. However, the random search algorithms can find the adversarial examples faster than the beam search.

3. METHOD

3.1. Adversarial Attack Problem

Both text and image data can be represented as tensor. An image consists of

There are several difficulties in solving the constrained optimization problem (1). First, it cannot be solved with gradient descent based methods. Although words are embedded in a continuous space, the words are still discrete points in this space. So the gradient descent of word vector cannot always find the corresponding word. Second, the search space of

3.2. Multimodal Adversarial Attack Framework

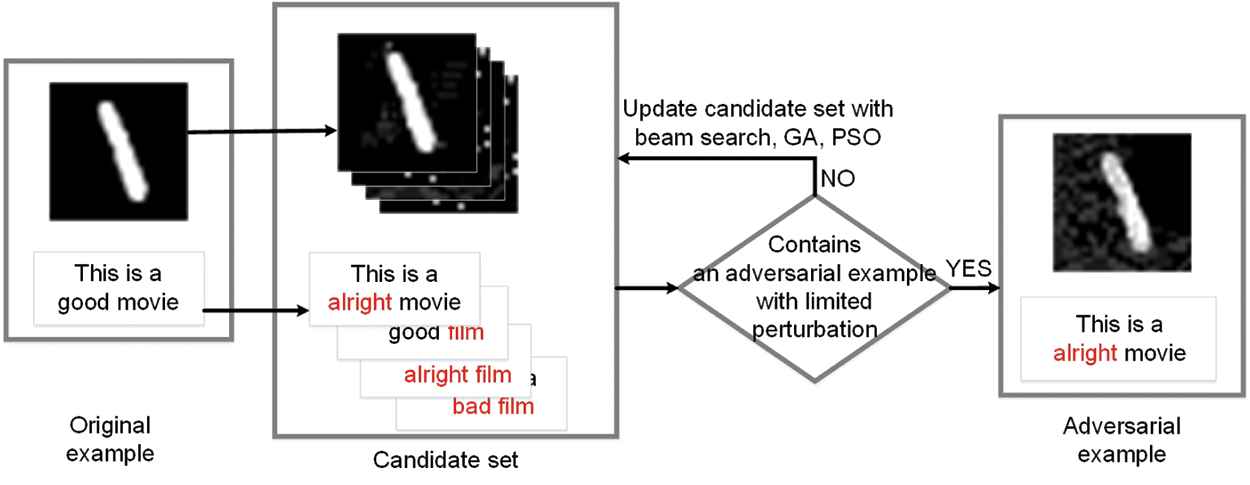

The workflow of the framework is shown in Figure 1. Two most important parts of this framework are CSG and update. During the CSG,

Workflow of the multimodal adversarial attack framework. There are two mainly parts in the framework: candidate set generation (CSG) and its update. The CSG process finds several examples which is near the original example to consist a candidate set. Then several search strategies are utilized to update the candidate set and look for adversarial examples. The candidate set is checked to see whether it contains an adversarial example with limited perturbation.

After CSG process,

3.3. Candidate Set Generation

The CSG process includes two parts: selecting influential pixel or word and selecting substitute pixel or word. First it is necessary to measure the influence of pixels or words on the classification results. We propose the saliency map

If a pixel or word's slightly modification cause the maximum probability changes (

Note that

Second, it is necessary to find substitute pixel or word. For an influential pixel or word

The closest point of

3.4. Candidate Set Update

From the previous subsection we can know that the CSG process generates

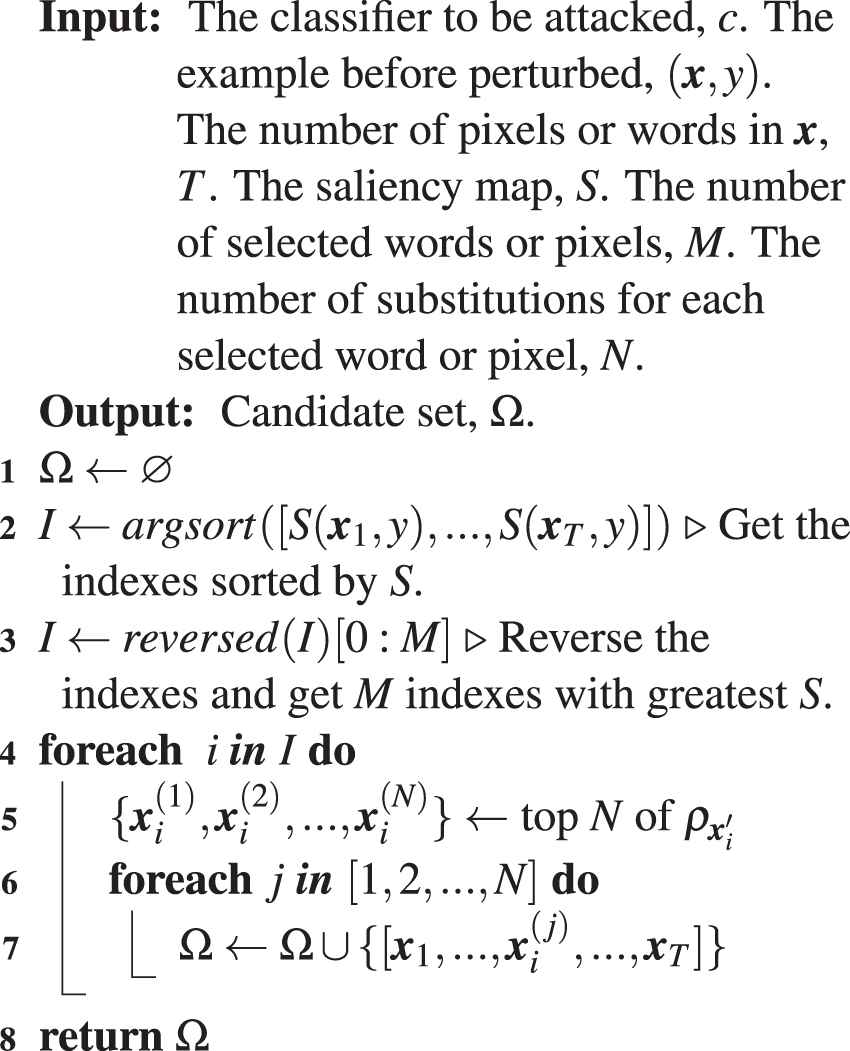

Algorithm 1: Candidate set generation

3.4.1. Search adversarial example with beam search

Completely searching all the generated examples is impossible in practice. So the beam search is utilized to constrain the search branches. The beam search select a certain number of examples for the next iteration. The examples with smallest

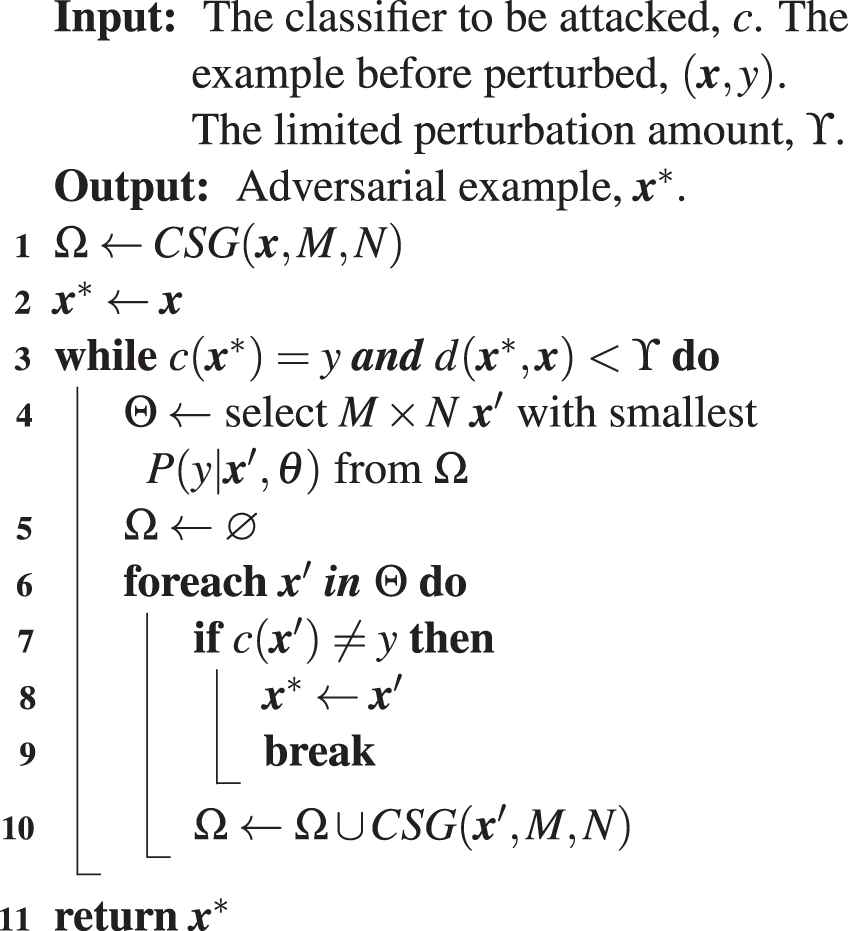

Algorithm 2 describes how to find adversarial example with beam search. In each iteration, every example

3.4.2. Search adversarial example with GA

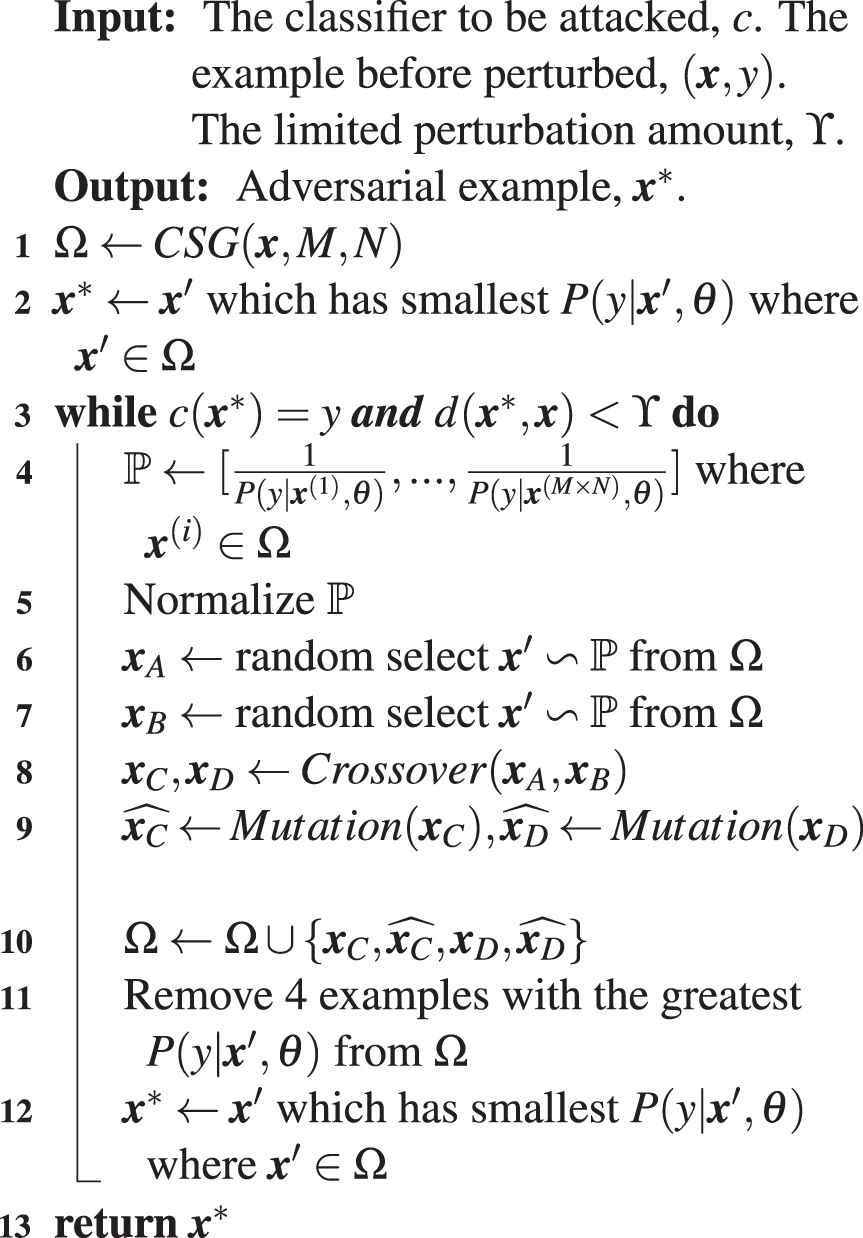

In GA, the candidate set is regarded as a biological population. Examples in the candidate set are individuals. GA aims at making the biological population evolve toward a better optimization objective. To search adversarial examples, the individuals in the population should evolve into individuals with smaller

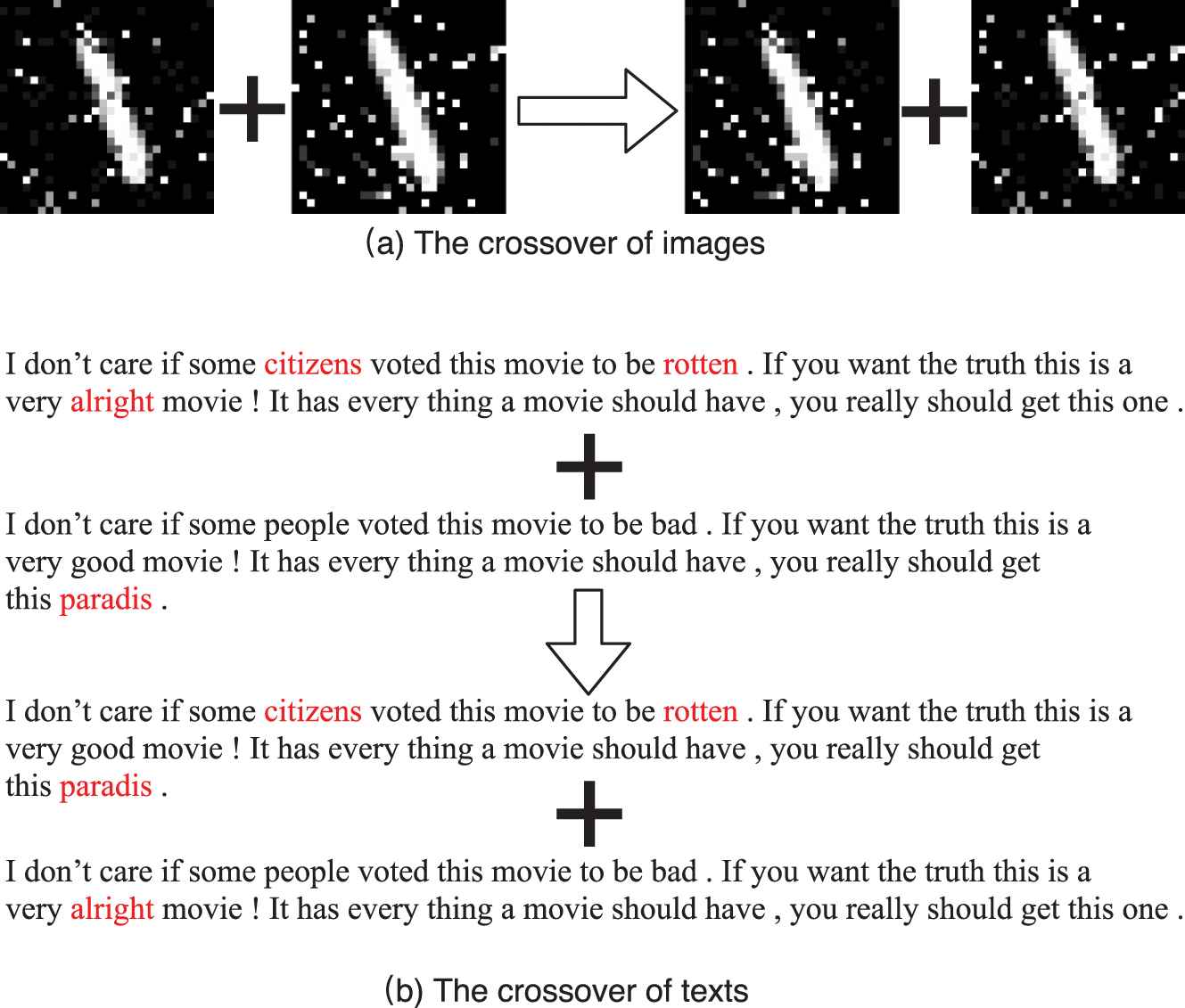

In the crossover process, the examples with greater

Algorithm 2: Search adversarial example with beam search

Algorithm 3: Search adversarial example with genetic algorithm

The crossover of genetic algorithm. To reproduce the next generation from two examples, we propose the crossover process of images and texts. Images or texts are both segmented into two parts and then reassembled to be new examples. For example, the two sentences in the candidate set are truncated from the middle, and then the first half and the second half of the different sentences are assembled together.

3.4.3. Search adversarial example with PSO

PSO regard the examples as particles. The particles have positions in a high- dimensional space. The positions are represented as tensors. In this search method, we make the particles move towards positions which have smaller

At the beginning of each iteration,

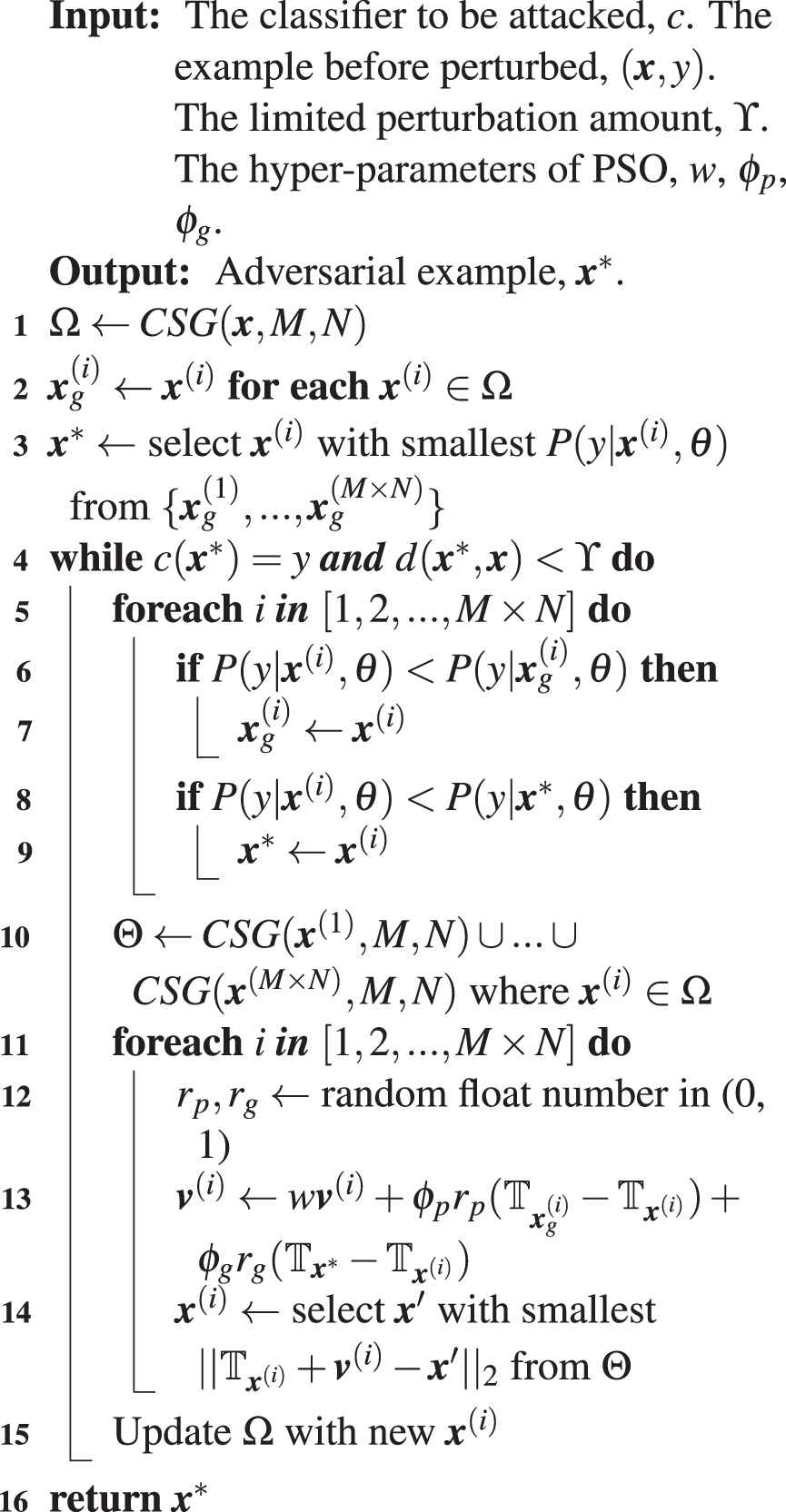

Algorithm 4: Search adversarial example with particle swarm optimization

With this method, the particle swarm will move toward position with smaller

4. EXPERIMENTS

4.1. Experimental Setup

4.1.1. Dataset

MNIST2 is a handwritten digits recognition dataset. It has a training set of 60,000 examples and a test set of 10,000 examples. Each example is an image with its label. The image has 28 × 28 pixels which contain only one color channel: the gray level.

CIFAR-103 is a color image dataset. Each example in the dataset is a 32 × 32 image with a label from 10 categories. There are 50,000 images in the training set and 10,000 images in the testing set. We use MNIST and CIFAR-10 to study the effects of multimodal adversarial attack against image classifier.

The data set used in the text adversarial attack is IMDB [22]. It contains 50,000 film reviews, including 25,000 in training sets and 25,000 in test sets. Each review has a rating score which is range from 1 to 10. Reviews with score greater than 7 are labeled as positive and reviews with score less than 3 are labeled as negative, so the classifier on IMDB conducts a 2-category classification. In addition to the 50,000 labeled reviews, the training set has another 50,000 unlabeled reviews, which can be used to unsupervised train language models.

4.1.2. Experiment platform

The hardware platform used in this experiment is a workstation with an i7-5930k CPU and 2 NVIDIA GeForce TITAN X GPUs. The operating system is Ubuntu 16.04. The TensorFlow [30] version is 1.4.1.

4.1.3. Target classifier

The LeNet [31] is the target image classifier and attacked by the proposed adversarial attacks. LeNet has an excellent performance on handwritten digits recognition. The LeNet is implemented withtensorflow [30] framework. The classifier has 2 convolutional and max pooling layers, 2 fully connection layers and a dropout layer. In the first convolutional layer, there are 32 kernels with size 5 × 5. Note that the “SAME” padding is used in the convolutional layer. The padding technique extends the edge of the 28 × 28 images and maintains its size before and after convolution. After the first 2 × 2 max pooling layer and a ReLU [32] activation function, the outputs are 32 feature images with size 14 × 14. The second convolutional uses 64 kernels with size 5 × 5. Similarly, the output of the second 2 × 2 max pooling layer are 64 feature images with size 7 × 7. Then there is a fully connection layer which takes 3136 (= 7 ∗ 7 ∗ 64) neurons as the input and outputs 1024-dimensional vector. There is a dropout layer with keep probability 0.5 after first fully connection layer. Last layer is also a fully connection layer which outputs 10-dimensional vector. The greatest float number in the 10-dimensional vector indicates the label of original input image. We use MNIST training set to train the LeNet model. The model achieves 99.2% accuracy on MNIST digital recognition test set.

A double layer bidirectional LSTM [33] network is the target text classifier. The hidden state number of LSTM cell is 512. The dimension of the word vector is 256. The vocabulary size is 86,934. The sequence length is 400. The training of RNN has two phases: language model pretraining and classification model training. To pretrain the language model, we use the output of current LSTM cell to predict the next word. Through the adam optimization method [34] with 0.001 initial learning rate, all of the labeled and unlabeled reviews in the training set are utilized to pretrain the language model and word embedding. After pretraining, we train the classification model, the LSTM structure are the same but a fully connected layer and a softmax layer is added in order to predict the sentence's probability of each class. The loss function is cross entropy. The training phase cost about 4 hours on the experimental platform.

4.1.4. Evaluation metrics

We propose the following metrics to evaluate the performance of the attacks.

Attack success rate is the ratio of successfully misled examples to the correctly classified examples. The success rate is defined with

Image perturbation rate evaluates the image's perturbation amount.

Text perturbation rate evaluates the text's perturbation amount. It is the ratio of the changed words to the length of text.

4.1.5. Baselines

To compare the proposed multimodal adversarial attack framework with other image adversarial attacks, we implement FGSM [17], BIM [18], JSMA [5], threshold attack [35], HopSkipJump [36] attack as the baselines. FGSM simply applies gradient ascent to modify images while BIM iteratively applies gradient ascent until attack successes or exceeds the perturbation limit. JSMA modifies images pixel by pixel. Threshold attack restricts the number of modified pixels. HopSkipJump is a black-box attack, but it develops an estimation method for calculating gradient. They are both iteration based attacks. These attacks against MNIST and CIFAR-10 classifier are implemented using ART,4 which is an adversarial attack and defense tool box.

As for the text adversarial attack, we compare the proposed multimodal attacks with other 4 existing attacks: the greedy search attack [16], WS attack [9], [23] PWWS, fast gradient projection method [37] (FPGM). The greedy search attack, which is similar to beam search, selects some synonym words which can maintain the semantic and syntactic similarity in each iteration. Then it find one word that has the smallest probability of being classified as its original label after substitution. WS calculates best substitution word for each position. Then it calculates each position's influence on classification result and substitute words in order of the influences. PWWS is an improved WS. Its calculation of WS is weighted with probability change. Thus the WS of PWWS considers both each position's influence and probability change. FGPM is also a synonym substitution-based text adversarial attack. Its substitution is scored by the product of gradient magnitude and the projected distance between the original word and the candidate word in the gradient direction.

4.2. Multimodal Adversarial Attacks Against Image Classifiers

4.2.1. Attack effectiveness

To prove that the proposed framework is effective in attacking image classifier, we implement 5 baseline methods and Algorithms 2–4 to attack the target model. In the attack we can find that if we do not limit the perturbation amount, the attack will always succeed. Therefore, there should be restrictions in the algorithm, i.e.,

| Attack Success Rate (1.0 Perturbation) | Averaged Attack Time | |

|---|---|---|

| FGSM | 59.2% | |

| BIM | 95.9% | |

| JSMA | 96.8% | |

| Threshold | ||

| HopSkipJump | 97.3% | |

| Beam search | 94.7% | |

| GA search | ||

| PSO search |

Comparing the proposed attacks with the baseline attacks on MNIST dataset.

| Attack Success Rate (1.0 Perturbation) | Averaged Attack Time | |

|---|---|---|

| FGSM | 84.5% | |

| BIM | 96.1% | |

| JSMA | 97.4% | |

| Threshold | ||

| HopSkipJump | 96.7% | |

| Beam search | 97.7% | |

| GA search | ||

| PSO search |

Comparing the proposed attacks with the baseline attacks on CIFAR-10 dataset.

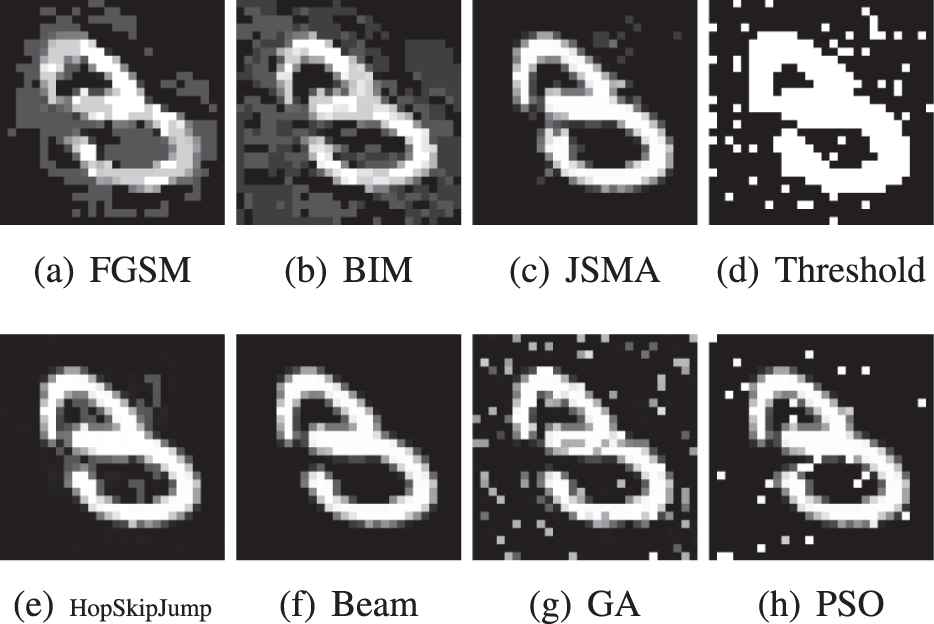

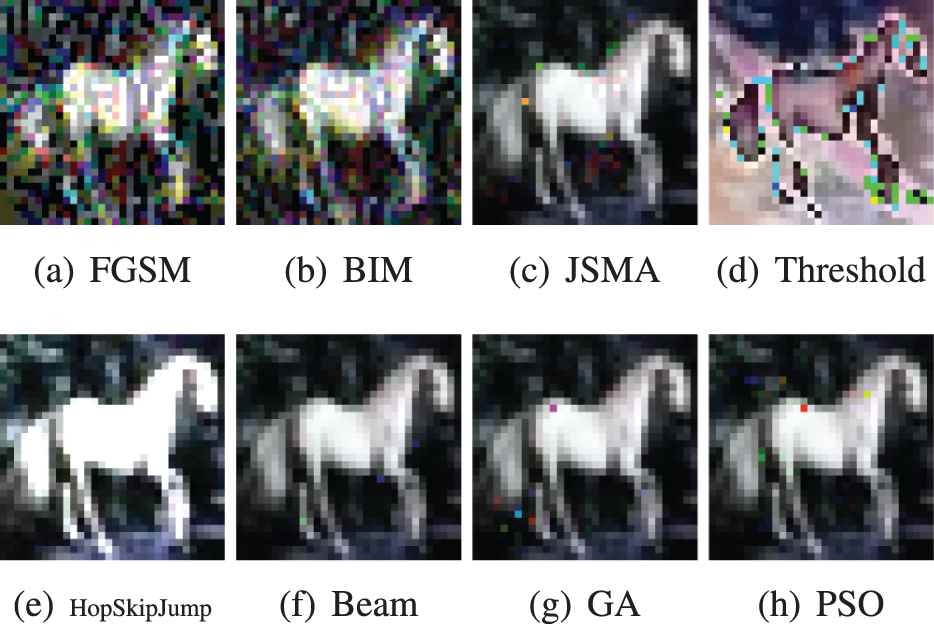

The examples in MNIST and CIFAR-10 are input into the framework to generate adversarial examples. Figures 3 and 4 show some of the adversarial examples. It can be seen from the figures that the modification pattern of FGSM and BIM are very similar. FGSM is a gradient ascent method, which adds all pixels with gradient sign. BIM iteratively adds gradient sign. This is the reason why FGSM and BIM has similar modification pattern. GA, PSO, and threshold add noises to the images in order to mislead the classifier. The reason why PSO generates these noises is that the particles change simultaneously in multiple directions, and the particles may move to an unpredictable location in space. GA generates noises due to its mutation on unpredictable positions. Threshold attack uses differential evolution (DE) to modify original images. The randomness in DE causes the noises in adversarial images. The generated examples of beam search and JSMA are similar to the original example. The reason is that these attacks modify images pixel by pixel. The pixels on the edge of the objects in images are usually more influential on classification result. Modifying the object edge generates examples that are very similar to the original example. HopSkipJump tends to modify influential local pixels to generate adversarial examples, so its attack pattern is that local area of image being modified.

Comparison of the generated image examples in MNIST dataset. The modification pattern of FGSM and BIM are similar. Both of them craft adversarial images by modifying the entire pixels at the same time. GA, PSO, and threshold add noise to the images in order to mislead the classifier. JSMA, beam search, and HopSkipJump tend to modify only the influential pixels to minimize the amount of modification.

Comparison of the generated image examples in CIFAR-10 dataset.

4.2.2. Attack efficiency

As can be seen from Tables 1 and 2, the attacks proposed in the framework can effectively produce adversarial examples. In MNIST dataset, PSO can achieve the best attack success rate 98.4%. Although PSO has the best success rate, its adversarial examples are easily to be perceived by humans. The beam search is better in deceiving human perception. In CIFAR-10 dataset, beam search can achieve the best success rate. To quickly generate adversarial images, BIM and JSMA is better because of their short averaged attack time and relatively greater attack success rate. The FGSM and pixel attack is not recommended. Their attack success rates are too low.

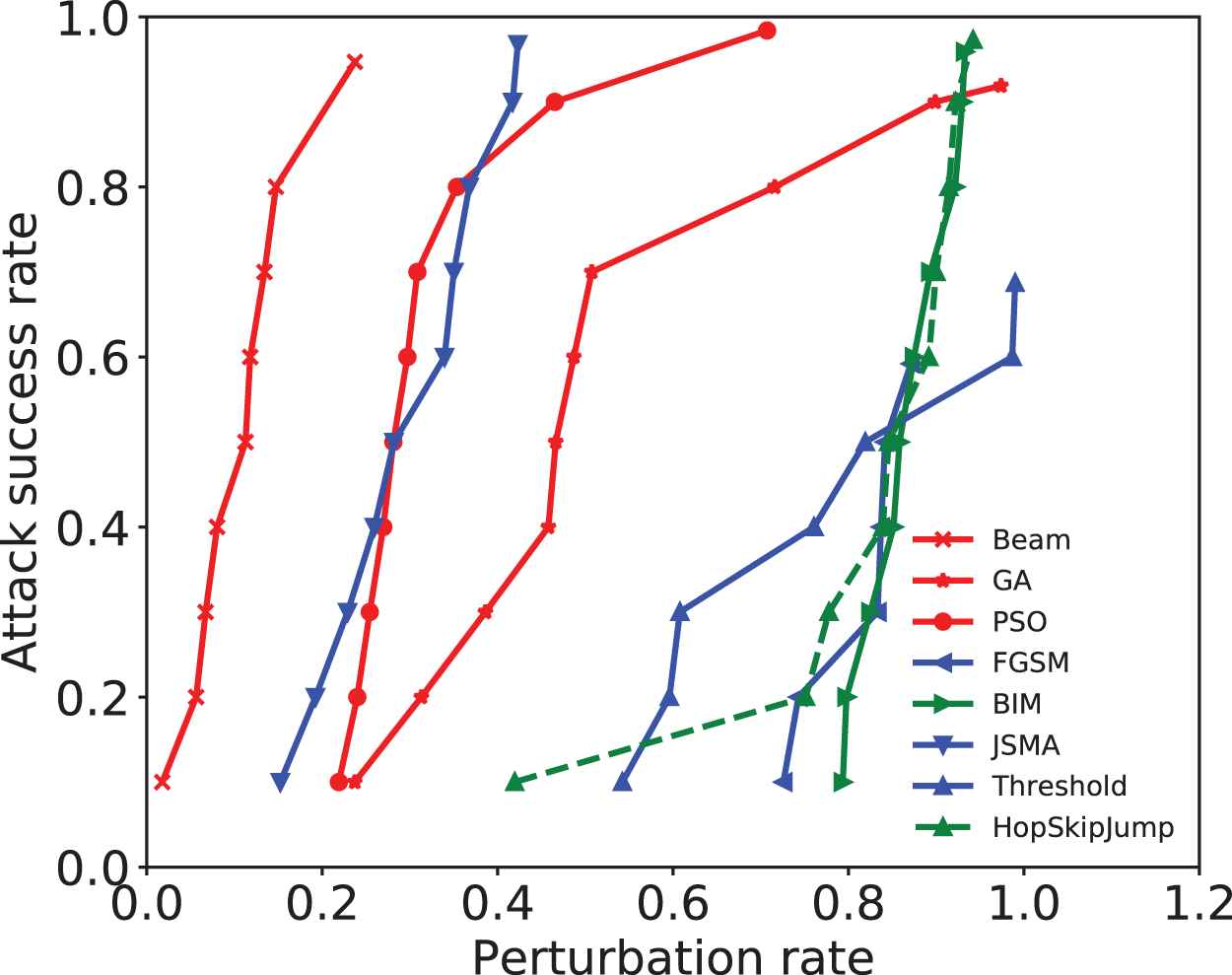

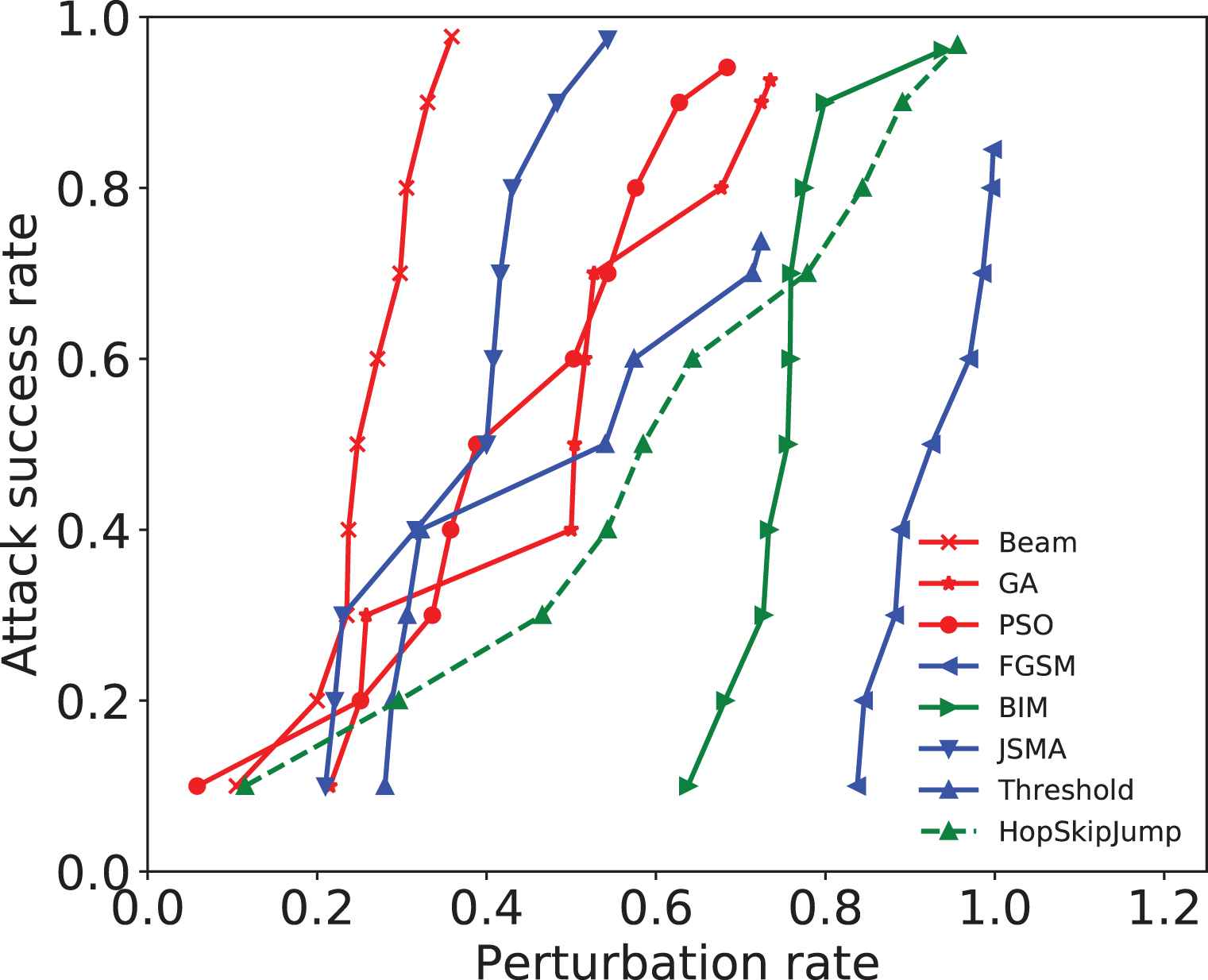

In image adversarial attack, a high efficiency means the attack has better success rate with less perturbation rate. Figures 5 and 6 shows the attack success rate–perturbation curves. The point in the figure represents an attack's success rate with a restricted perturbation rate. For example, a perturbation rate of up to 0.147 can make a beam search successfully attack 70% of the examples, so the point (0.147, 0.7) is on the curve of beam search attack. We can conclude with Figures 5 and 6 that in the baseline methods, JSMA has the best attack efficiency. In MNIST dataset, the attack success rate of beam search is 2.1% less than JSMA, but its perturbation rate is nearly half of JSMA. In CIFAR-10 dataset, beam search achieves greater attack success rate with less perturbation. Therefore, the beam search in the framework has better attack efficiency than other attack methods.

The attack success rate–perturbation curve in MNIST dataset. It shows the range of perturbation rate and attack success rates. For example, the adversarial images' perturbation rates of beam search range between 0 and 0.25, which is the least perturbation rate in all attacks. Beam search can obtain an attack success rate over 90%. Other attacks need more perturbations to achieve the same attack success rate. Thus, the attack efficiency of beam search outperforms other attack methods.

The attack success rate–perturbation curve in CIFAR-10 dataset.

4.2.3. Human evaluation

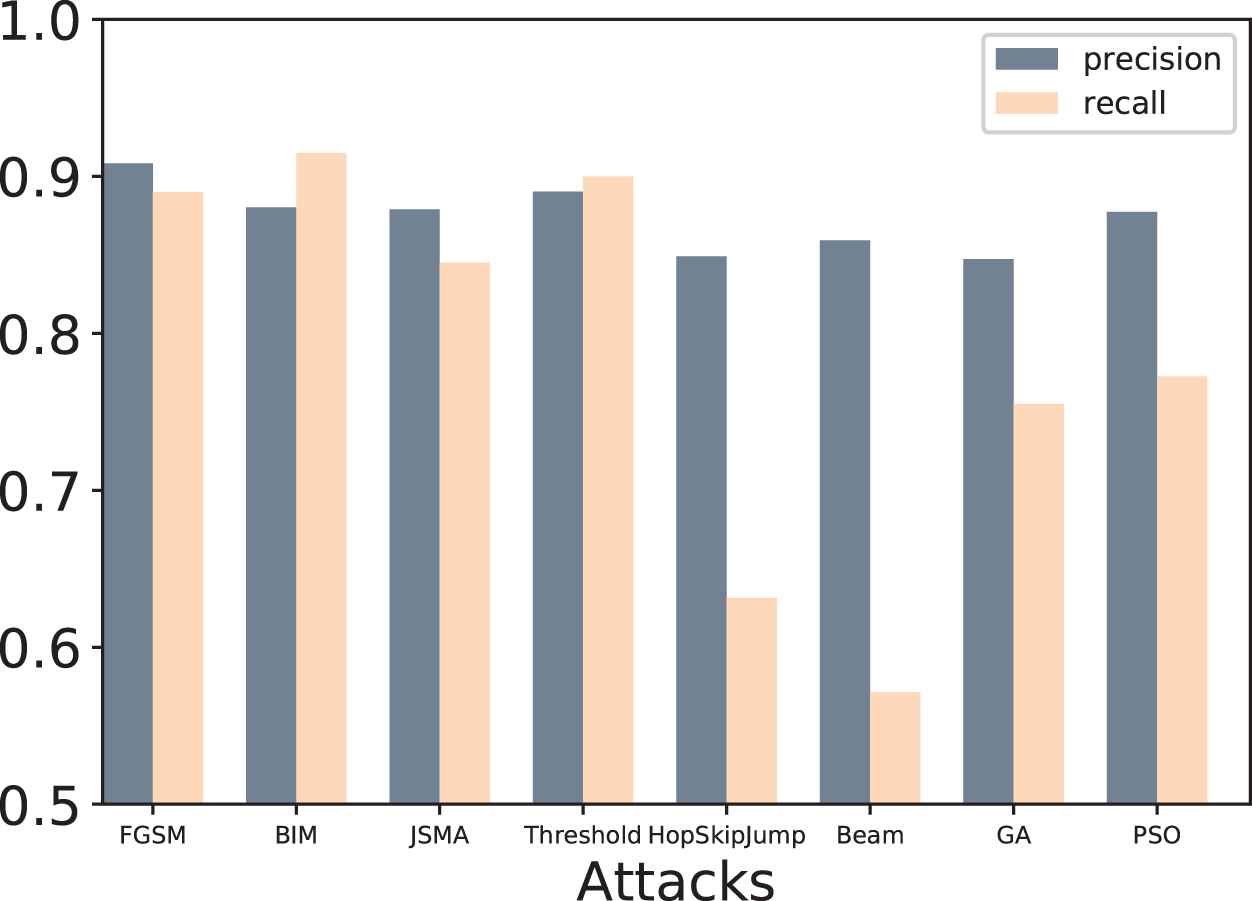

In this part we study the imperceptibility of adversarial images. An imperceptible adversarial example is more likely to evade human perception so we implement a human evaluation. We randomly select images for human evaluation from adversarial examples generated by the 5 baseline attacks and 3 proposed attacks. For each attack we select 100 images, 50% of which are from original images and the rest are from adversarial images. So there are 700 images for one volunteer to determine whether the images are adversarial or not. We ask 8 volunteers to do the test. We use precision and recall to evaluate volunteers' results. Precision is the proportion of recognized real adversarial examples to the adversarial examples recognized by volunteers. Recall is the proportion of recognized real adversarial examples to the real adversarial examples. High precision indicates that volunteers can identify adversarial example precisely. High recall indicates that the volunteers can find the adversarial examples completely.

Volunteers averaged precision and recall are shown in Figure 7. We can see from the figure that the precisions of volunteers are above 84%, meaning that human can identify adversarial examples precisely. However, the recalls of each attack are various. BIM attack has the highest recall 91.5%, indicating that most of the adversarial examples generated by BIM are recognized by human. Beam search attack has the lowest recall 57. 1%. A low recall indicates that the adversarial examples can easily evade volunteers' perception. In other words, about half of the adversarial examples evade human detection. So we can conclude that the adversarial images of beam search attack have the best imperceptibility.

Human testers' averaged precision and recall of recognizing adversarial images.

4.2.4. Study of hyperparameters

In this section, we study the effect of different hyperparameters on experimental results. The hyperparameters used in the proposed framework are: the candidate set size

The above hyperparameters are all related to the candidate set size

| M × N | 2 × 2 | 2 × 3 | 3 × 2 | 2 × 4 | 4 × 2 |

| R | 93.8% | 92.6% | 92.7% | 94.5% | 94.7% |

The attack success rate of beam search when various

Another hyperparameter that affects the success rate of the attack is the perturbation limit

| 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 | |

| 46.6% | 48.6% | 50.7% | 71.6% | 89.8% | 91.9% |

The attack success rate of GA when various

4.3. Multimodal Adversarial Attack Against Text Classifier

4.3.1. Attack effectiveness

Text adversarial attack can always succeed if we don't limit the perturbation amount. An extreme case is that a text example is unlimited modified until all of its words are changed. We use text perturbation rate (see Eq. (8)) to limit modification. So the

With the perturbation restriction, we implement the baseline attacks and the 3 attacks in the framework. Some examples of adversarial text are shown the substitute words in Table 5 should be marked in red color. The examples generated by GA and PSO have more diversity. This may be caused by the randomness of GA and PSO algorithm. However the randomness will cause more unnecessary modification. The performances of these 7 attacks are shown in Table 6. The attack success rate of beam search is the best (91.5%) among these attacks. Greedy search can be regarded as a special beam search. In each iteration, the greedy search select only one example with smallest original label probability, while beam search select several examples. Although GA do not achieve a high success rate, it has advantage in the time overhead of finding adversarial examples. WS and PWWS have relatively low attack success rates at 20% perturbation. The reason is that in every position of the sentence, only one substitute word is selected. Comparing with N candidate substitute words are selected in our framework, WS and PWWS are more difficult to find adversarial texts.

| Original | I don't care if some people voted this movie to be bad. If you want the truth this is a very good movie ! It has everything a movie should have, you really should get this one. |

| Greedy | I don't care if some people voted this movie to be bad. If you want the truth this is a very |

| WS | I don't care if some |

| PWWS | I don't care if some people voted this movie to be bad. If you want the truth this is |

| FGPM | I don't care if some |

| Beam | I don't care if some people voted this movie to be bad. If you want the truth this is a |

| GA | I don't care if some |

| PSO | I don't care if some people |

Comparison of the text examples.

| Attack Success Rate (20% Perturbation) | Averaged Attack Time | |

|---|---|---|

| Beam search | 91.5% | 95.3 s |

| GA | 63.4% | 6.6 s |

| PSO | 55.4% | 241.2 s |

| Greedy search | 90.7% | 36.5 s |

| WS | 54.8% | 16.2 s |

| PWWS | 59.3% | 39.6 s |

| FGPM | 88.1% | 112.4 s |

Comparing the proposed attacks with other text adversarial attacks.

4.3.2. Attack efficiency

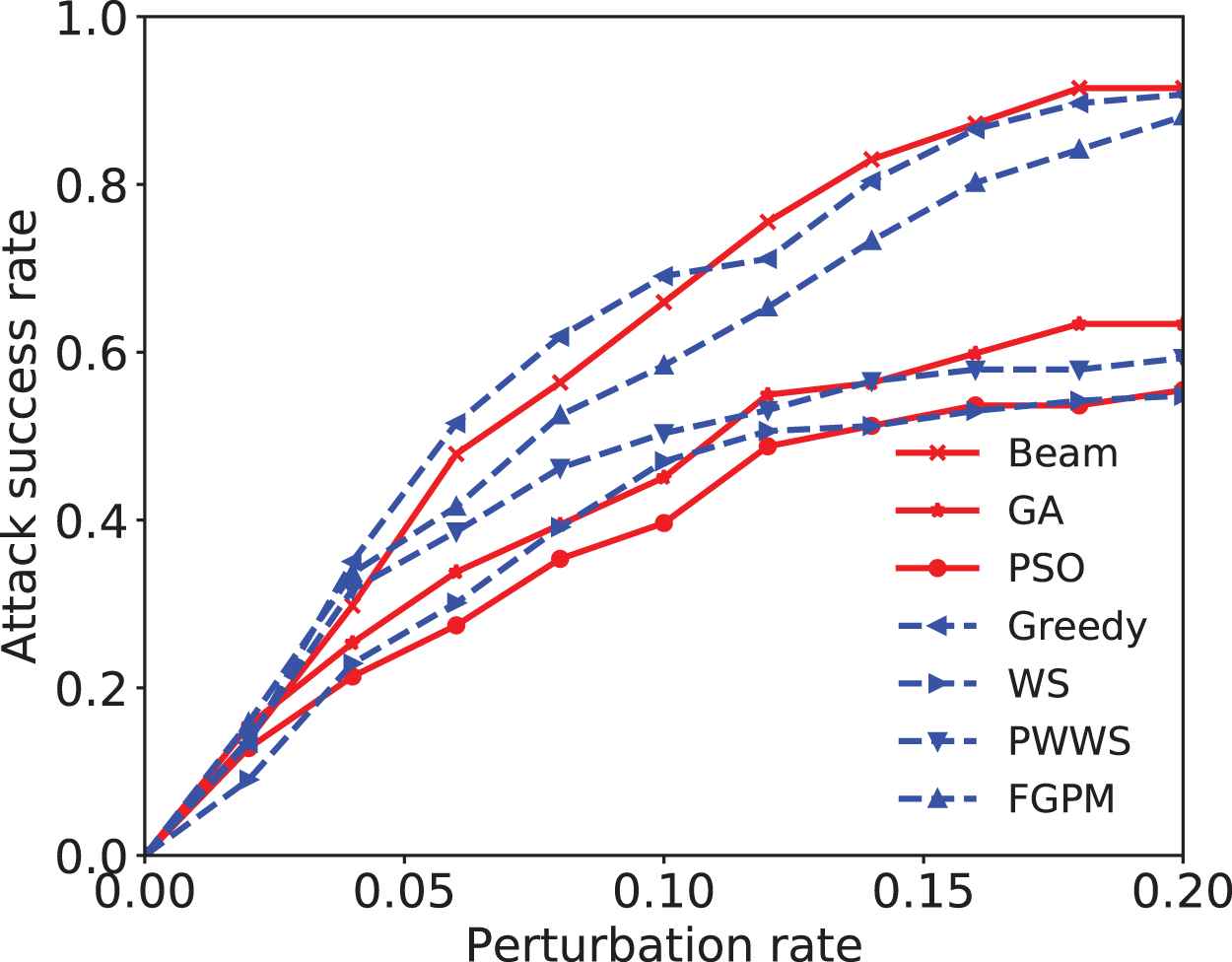

In text adversarial attack, a higher efficiency means the attack has better success rate with less perturbation rate. As we restrict the perturbation with

The attack success rate–perturbation curve of text adversarial attacks.

The attack efficiency of GA and PSO are relatively low. GA and PSO search method simultaneously modify multiple words, which will cause unnecessary modifications when attack success. Comparing the rest 5 attacks, the beam and greedy search attack are better in efficiency. The reason is that beam and greedy's search coverage is wider. WS, PWWS, and FGPM only select one substitute word in each position, while our methods have N candidate substitute words in each position. Thus beam and greedy search can find more suitable words to modify text, resulting in their less text perturbation rate.

4.3.3. Human evaluation

In this part, we evaluate the imperceptibility of adversarial texts. We randomly select 100 sentences of each attack for human evaluation. Half of the sentences are clean text from test set, and rest of them are adversarial texts. Thus there are 600 sentences for one human tester to determine whether the sentences are adversarial or not. We ask 8 volunteers to do the test, the same as we do in the image human evaluation.

The averaged precision and recall are 0.868 and 0.706 respectively. As analyze in Section 4.2.3, high precision indicates that volunteers can identify adversarial examples precisely, and a relatively low recall indicates that the volunteers could not completely find the adversarial examples. To investigate what kind of adversarial texts can easily evade human detection, we group the adversarial texts according to the perturbation rate (see Eq. (8)). There are 4 groups (group

5. CONCLUSIONS

In this paper, a framework is proposed to craft multimodal adversarial examples. The image and text examples are formalized as tensors. In the framework, CSG process is proposed to generate multiple candidate examples from one example. Then 3 local/random search methods are utilized to update the candidate examples and search the adversarial example. Experiments show that the framework can craft adversarial examples that have good attack success rates against text and image classifiers. The time overhead of GA search are better than beam search in both image and text attack. But the beam search has better performance in attack efficiency. In the human evaluation, the adversarial example generated by beam search are more likely to evade human detection.

CONFLICTS OF INTEREST

The authors declare they have no conflicts of interest.

AUTHORS' CONTRIBUTIONS

Paper writing and experiment implementation, Zibo Yi; Methodology, Zibo Yi and Jie Yu; Revising and reviewing, Jie Yu, Yusong Tan, Qingbo Wu; Supervision, Jie Yu, Yusong Tan, Qingbo Wu; Funding acquisition, Jie Yu.

Funding Statements

This work is supported by National Key Research and Development Program of China (No. 2018YFB0204301).

Footnotes

REFERENCES

Cite this article

TY - JOUR AU - Zibo Yi AU - Jie Yu AU - Yusong Tan AU - Qingbo Wu PY - 2021 DA - 2021/07/05 TI - A Multimodal Adversarial Attack Framework Based on Local and Random Search Algorithms JO - International Journal of Computational Intelligence Systems SP - 1934 EP - 1947 VL - 14 IS - 1 SN - 1875-6883 UR - https://doi.org/10.2991/ijcis.d.210624.001 DO - 10.2991/ijcis.d.210624.001 ID - Yi2021 ER -