An architecture based on computing with words to support runtime reconfiguration decision of service-based systems

- DOI

- 10.2991/ijcis.11.1.21How to use a DOI?

- Keywords

- service-based systems; quality-of-service; linguistic decision making models; computing with words

- Abstract

Service-based systems (SBSs) need to be reconfigured when there is evidence that the selected Web services configurations no further satisfy the specifications models and, thus the decision-related models will need to be updated accordingly. However, such updates need to be performed at the right pace. On the one hand, if the updates are not quickly enough, the reconfigurations that are required may not be detected due to the obsolescence of the specification models used at runtime, which were specified at design-time. On the other hand, the other extreme is to promote premature reconfiguration decisions that are based on models that may be highly sensitive to environmental fluctuations and which may affect the stability of these systems. To deal with the required trade-offs of this situation, this paper proposes the use of linguistic decision-making (LDM) models to represent specification models of SBSs and a dynamic computing-with-words (CWW) architecture to dynamically assess the models by using a multi-period multi-attribute decision making (MP-MADM) approach. The proposed solution allows systems under dynamic environments to offer improved system stability by better managing the trade-off between the potential obsolescence of the specification models, and the required dynamic sensitivity and update of these models.

- Copyright

- © 2018, the Authors. Published by Atlantis Press.

- Open Access

- This is an open access article under the CC BY-NC license (http://creativecommons.org/licences/by-nc/4.0/).

1. Introduction

Service-based Systems (SBSs) are built by composing distributed and heterogeneous services that are capable of partially or fully satisfying their functional and non-functional requirements 1. Most SBSs depend on external third parties services. In contrast to software components 2, these services are out of the control of the systems integrators’ jurisdiction 3: they are deployed on provider-site, they are not exclusive, they may serve several clients at the same time and, therefore, they may change in uncertain and non-predictable ways.

Due to the proliferation of services, non-functional requirements have become crucial in the service selection process. Today, the problem of SBSs has changed from finding a service that is capable of satisfying a functional requirement to finding which one should be selected from several functional-equivalents. Therefore, services are selected according to how well they satisfy the non-functional constraints (NFCs) of the specification model.

At design time, the non-functional requirements are transformed into concrete and precise NFCs using ranges of numerical values. These numbers are provided by experts whose perceptions are shaped by their own skills, experience, and/or level of knowledge about the domain (the current characteristics of the alternatives). These models are used at design and deployment time to select the services.

Pre-runtime verification of the configurations’ satisfaction of the specification models cannot give the desired guarantees that are needed post-deployment3 because runtime changes are inherent4. For example, during runtime, a previously selected service may become no longer the right alternative to be used because: (1) it has dropped its quality-of-service (QoS) 4; or, and even more difficult to detect, (2) other functional equivalents became better alternatives than the selected option, making experts and users’ perceptions change the meaning (range of values) of a constraint (e.g. which services are ”fast”). Thus, even when the selected service still satisfies the model, it is no longer a valid alternative. Therefore, proposals to assess concrete specifications models (using crisp numbers) under dynamic and changing environments (with fluctuations, outliers and/or random trends) may miss the required reconfigurations because the models, and precisely the NFCs’ meanings, may already be obsolete.

In the specific case of SBSs, they need continuous verification to check that the current service configurations still satisfy the specification models because the available knowledge about the service market (and specifically about the services’ QoS) before deployment was either incomplete and uncertain or it may have changed during execution.

To address this issue, in general, it has been proposed that specification models should evolve as requirements or environments evolve 9,10 by synchronizing the models’ parameters during runtime 11,12,6,10,7. Satisfaction to these models should be continuously verified 6,8,5. Several different implementations have been developed using the previous concept. For instance, the MOSES framework 11 modeled changing aspects as average statistic estimators, while the KAMI framework 21 uses Markov chains to periodically recompute the parameters’ values and predict violations.

However, under the dynamic and changing environments in the service market, when the obsolescence issue is addressed, the stability of the SBSs may be compromised because they will tend to perform premature reconfiguration decisions due to the oscillating QoS’s behavior in the service market. In extreme cases, configurations that had previously been discarded may rapidly become valid again, which means that the cost of reconfiguration was un-necessary.

We have previously proposed in our ongoing work that SBSs’ owners should represent specification models as linguistic decision making (LDM) models to specifically represent the constraints over services’ QoS as constraints over linguistic values instead of precise numbers 13,14,22. The models’ satisfaction are frequently assessed during runtime by a CWW engine that addresses the models’ obsolescence. Unfortunately, under dynamic and changing environments, with fluctuations and/or outliers, SBSs are too sensitive to fluctuations giving place to premature reconfiguration decisions and this affects the stability of these systems.

In this work, to enable us to address both issues at the same time (i.e. the obsolescence of specification models and the high sensitivity for reconfiguration of SBSs under dynamic environments), we complement our previous proposal with a CWW engine using a multi-period multi-attribute decision making (MP-MADM) resolution approach35 to assess reconfigurations against of specification models, which evaluates and aggregates models’ satisfaction in several periods in order to determine when a reconfiguration is really needed.

The rest of the paper is organized as follows. In Section 2, we review the LDM models and the CWW architecture as the computational basis in LDM processes. In Section 3, we present our proposal. In Section 4, we first introduce an example and then we present our experiment to show how well our proposal under dynamic and changing environments mitigates the degradation of the stability of systems by reducing the number of premature decisions while at the same time ameliorating the problem of obsolescent specifications of models. Finally, in Section 5 we conclude the paper.

2. Background

Multiple-attribute decision making (MADM) has been widely and successfully used to support decision making in multiple areas. The Multi-period multi-attribute decision making (MP-MADM) approach is an extension of the MADM where the decision should also be taken by using the historical data. Typically, most decisions that are made in the real world take place in an environment in which the goals and constraints are not known with precision 26 and, therefore, the problem cannot be precisely represented using crisp values 27. Typically, these problems involve human perceptions using linguistic broad constructions (e.g. “nice”, “a lot”, “a few”, “comfortable”, to name a few). LDM models 28 have been successfully used to solve ill-structured decision problems in a wide range of practical problems, such as personnel evaluation, online auctions, venture capital supply chain management and medical diagnostics. However, these applications present two challenges: (1) they cannot be solved with the classical tools of decision theory 29,30; and, (2) they exist under uncertain environments31,32,33,34.In order to meet these MADM challenges, a four-stage linguistic resolution scheme 17 has been proposed: the selection of the linguistic term set and its semantic, the selection of the aggregation operator of linguistic information, and the aggregation and exploitation phases.

CWW 15 has been applied as the computation basis in LDM processes 16. It proposes a methodology of reasoning, computation and decision making in which “words” from natural language are used. Several CWW-based architectures have been proposed 15,18,19,20. Its main components are an explanatory database (ED), a CWW engine, an encoder and a decoder.

3. Proposed Solution

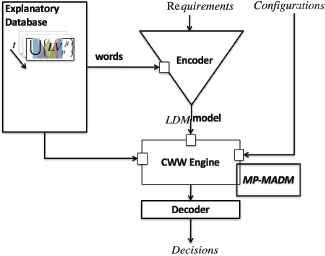

Figure 1 shows the dynamic CWW architecture to support SBSs’ owners in their reconfiguration decisions during runtime under changing environments. Complementary to the basic components of a CWW architecture, this proposal needs an additional component—the collector—, which is responsible for periodically monitoring the QoS values of the services in the marketplace and collecting the QoS measurements.

A dynamic CWW architecture to support SBSs’ owners in performing reconfiguration decisions during runtime. The architecture is composed by the ED (upper-left), the encoder (upper-right), the CWW engine with a decoder (lower-right). The collector is a key part that continuously senses the service market so that it can measure the QoS.

The left side of Figure 1 shows the ED, which is composed of the set

The encoder in the center of Figure 1 supports humans in the process of reification of the requirements into specification models, which are represented as LDM models by using the available “words” (i.e. linguistic values) provided by the ED. This component supports the first two phases of the linguistic resolution scheme.

We reuse a grammar that was proposed in our previous work 22 to support SBSs’ owners in building the LDM models, which is as follows:

The “at least” (ℒ) and “at most” (ℳ) are unary ordering-based modifiers 23. Given the label sq associated to the linguistic value

The LDM model is a set of aggregated constraints that are written in terms of words (which are extracted from the ED) and the operators. δj() is a function denoting the resulting LDM for the constraint related to the j − th QoS measurement, where the function δj evaluates the level of compliance to the j − th constraint.

Based on the monitored data, the ED component updates the meanings of the linguistic terms (words) at current time t. At setup time, these values are obtained from the first sample. The collector component is either implemented manually (i.e. by humans experts) or automatically. The ED periodically recomputes the parameters of the membership functions of each linguistic values according to the available data. For instance, quantiles or the fuzzy c-means algorithm can be used, as in our previous work 24.

During runtime, the CWW engine component receives both the LDM model and the configuration of services that are currently in use and the available set of configurations alternatives. Based on this information, the CWW engine ranks the alternatives including the configuration in use by using the MPMADM approach. In the MP-MADM approach35, the time-based fuzzy assessment matrix, R, is constructed using the time sequence of membership functions

Afterwards, the temporal assessments of R are aggregated using the dynamic weighted average 35 (DWA) operator of equation (2). The temporal aggregated assessment at time τ, with a time-window of size Δ, of the j-th QoS attribute of the alternative 𝒜i is given by:

A BUM function is defined as the function f : [0,1] → [0,1] where f(0)= 0, f(1)= 1, f(x) ⩾ f(y) if x > y 25.

To obtain an ordered ranked list of the provided alternatives, the linguistic weighted average operator (LWA) is used to compute the final score of each one:

The greater the score SCORE(𝒜i) is, the better the alternative 𝒜i will be. A ranking order of the alternatives

4. Results and Evaluation

To illustrate how our proposed solution can be used in a real application, we have designed an Internet-of-things application that is called Golden Age. This service-based system monitors different aspects of their patients at home (e.g. heart rate, current location, to name a few), notifying their relatives when appropriate. To notify, Golden Age needs a web service that is capable of sending messages (SMS), with at least good performance and good availability. The availability attribute is expressed as a percentage of uptime in a given period of time. At the beginning, the performance is subdivided into both the response_time and throughput. For this study, we have assumed that the SMS functional requirement needed by Golden age is implemented by the Web service TextAnywhere SMS.

In this study, we have considered a subset of the services that are listed in the QWS dataset*. The providers of the dataset have collected 5,000 web services while offering various measurements and they provide a subset of 365 real web service implementations. The majority of the web services offered were obtained from public sources on the Web. The dataset specifcally consists of 365 Web services. Each web service presents a set of (9) nine Quality of Web Service (QWS) attributes that have been measured using commercial benchmark tools.

This dataset is partially used to feed the ED, replacing in this experiment the collector component. A total of 33 alternatives out of 2567 possible services were identified as being able to provide the required {SMS} functionality. For instance, we have the following alternatives set 𝒜 = {TextAnywhereSMS,…,SmsGatewayService}, with the following QoS measurements: (1) response time: is the time taken to send a request and receive a response (in milliseconds); (2) availability: is the number of successful invocations/total invocations (percentage); and (3) throughput: is the total number of invocations for a given period of time (percentage).

Assuming that the SBS’s owner is concerned with G = {G(1) = response_time, G(2) = availability, G(3) = throughput} to assess the performance and availability quality concerns. The linguistic variables under consideration are: {LV response_time SMS, LV availability SMS, LVthroughput SMS}. Golden Age’s owners have agreed to use five linguistic values for each linguistic variable. For availability and throughput, the linguistic values are {poor, fair, good, very_good, excellent}. Meanwhile, for response_time the linguistic values are {very_fast, fast, medium, slow, very_slow}.

Moreover, SBS’s owners have decided that attributes are equally important

The LDM is constructed as follows. First, we identify the minimum level of quality required for each attribute.

- •

LV response_time SMS: {very_fast; fast; medium; slow; very_slow},

- •

LV availability SMS: {poor; f air; good; very good; excellent} and,

- •

LVthroughput SMS: {poor; f air; good; very good; excellent}.

Later, the requirements using natural language are reified into a LDM using the Backus-Naur form, as follows:

The satisfaction degree of the alternative 𝒜i to the LDM model is computed as

The SMS functional requirement of Golden age is implemented by the Web service TextAnywhere SMS; therefore, C = {TextAnywhereSMS}.

Simulation experiments

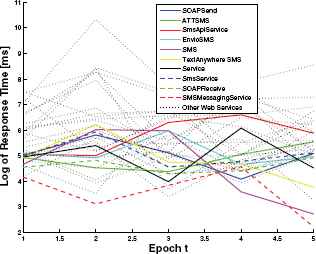

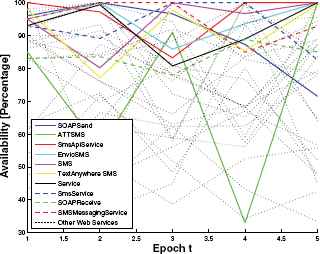

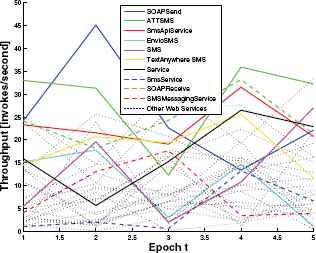

This section describes how the synthetic data has been generated, starting from the measurements of SMS services registered in the QWS dataset. The QWS dataset will be considered as made at design time (period t = 1) data points. We have considered five periods of time. For the periods ranging from t = 2 until t = 5, we have simulated an autoregressive process (Xt = Xt−1 + εt) for the response_time, availability and throughput measurements, where we have incorporated additive Gaussian noise

QoS measurements of the logarithm of the response time of the web services obtained with the collector component

Sos measurements of the availability of the web services obtained with the collector component

Sos measurements of the throughput of the web services obtained with the collector component

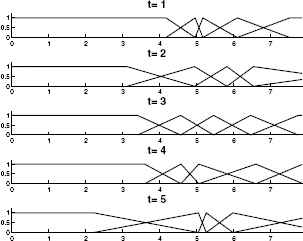

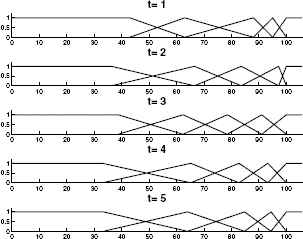

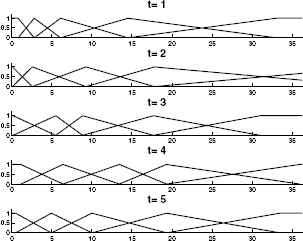

In this proposal, the linguistic terms are obtained with the quantile information of all 33 alternatives of SMS services of the entire database. Figure 5, 6 and 7 show the EDs, at different periods of time, of the Response Time, availability and throughput variables, respectively. Although the resulting time series are random fluctuations with very high variability, the linguistic terms of the ED are slightly disturbed due to the aggregation factor. In the figures, it can be seen that the linguistic terms did not suffer major changes through time. This corresponds to an expected behavior because the web services were only affected with random noise and should not present a change in the concept regarding to new trends.

ED of the logarithm of the Response Time for the five periods of time

ED of the Availability for the five periods of time

ED of the Throughput for the five periods of time

We have compared the MADM model with the following three approaches:

- 1.

The MADMdesign_time computes the score with the ED obtained at design time;

- 2.

The MADMcurrent_time computes the score with the ED obtained at current time; and,

- 3.

The MP − MADM correspond to our proposal and it computes the score with the historical ED stored since design time.

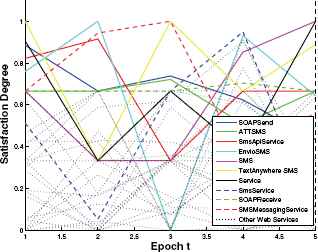

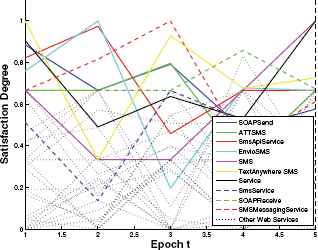

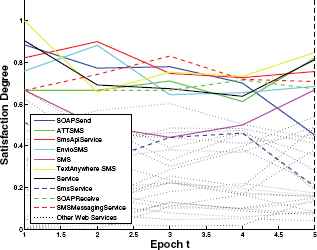

Figures 8, 4 and 4 show the satisfaction degree for the MADMdesign_time, MADMcurrent_time and MP − MADM models, respectively. From Figure 8 to 4, we have arbitrarily highlighted in colors some services so that we can better appreciate and track the dynamic behavior of the aggregated score. Both the MADMdesign_time and MADMcurrent_time exhibit a highly variable behavior. Meanwhile, the MP−MADM is more stable and, therefore, the decision making process becomes more robust. From the ED and the current QoS measurements, the CWW engine computes the satisfaction degree as the score given in equation (5). On the one hand, we have the MADMdesign_time where the ED may become obsolete. On the other hand, the MADMcurrent_time is prone to increase the reconfiguration decision because it is more susceptible to the variability of the service market. It is desired that a reconfiguration decision should be made only when there is enough evidence that the current architectural configuration is violating its requirements.

The MADMdesign_time computes the score with the ED obtained at design time

The MADMcurrent_time computes the score with the ED obtained at current time

The MP − MADM correspond to our proposal and it computes the score with the historical ED stored since design time

To analyze the stability, we compute the Rviolations index as follows. At design time, we select all those services that expose a satisfaction degree above the threshold ρ; that is, we consider only the services that are likely to be selected as part of the architectural configuration. For each of the following periods, we compute the number of times that some of the selected services drop their satisfaction degree below a threshold ρ. Afterwards, we compute the proportion of requirements’ violations; that is, the proportion of services that drop their satisfaction degree below a threshold ρ in certain time interval.

We executed the experiment 100 times. Table 1 shows the mean and standard deviation of the Rviolations index obtained for the three different approaches and evaluated at different level of thresh-olds ranging from 0.50 to 0.95. The numerical results shows that the MP-MADM approach provides a better stability than the other two approaches be-cause it obtained a lower Rviolations index.

| ρ | MADMdesign_time | MADMcurrent_time | MP-MADM |

|---|---|---|---|

| 0.50 | 0.1712 ± 0.0262 | 0.1806 ± 0.0249 | 0.1061 ± 0.0240 |

| 0.55 | 0.1748 ± 0.0265 | 0.1872 ± 0.0247 | 0.1184 ± 0.0284 |

| 0.60 | 0.1809 ± 0.0255 | 0.1996 ± 0.0239 | 0.1428 ± 0.0284 |

| 0.65 | 0.1831 ± 0.0269 | 0.2053 ± 0.0246 | 0.1600 ± 0.0281 |

| 0.70 | 0.2038 ± 0.0312 | 0.2099 ± 0.0326 | 0.1584 ± 0.0361 |

| 0.75 | 0.2045 ± 0.0325 | 0.2127 ± 0.0320 | 0.1849 ± 0.0333 |

| 0.80 | 0.2004 ± 0.0338 | 0.2075 ± 0.0326 | 0.1895 ± 0.0403 |

| 0.85 | 0.1916 ± 0.0317 | 0.1989 ± 0.0365 | 0.1907 ± 0.0421 |

| 0.90 | 0.1825 ± 0.0363 | 0.1861 ± 0.0381 | 0.1833 ± 0.0437 |

| 0.95 | 0.1729 ± 0.0437 | 0.1780 ± 0.0402 | 0.1775 ± 0.0469 |

Comparative table that shows the average and standard deviation of the Rviolations index. The experiment was executed 100 times.

5. Conclusions

In this work, we have proposed a dynamic CWW architecture to support SBSs’ owners in their reconfiguration decisions during runtime under changing environments. As in our previous work, we have tackled the obsolescence of the models during runtime by proposing the reification of the requirements into LDM models. We have shown how the inadequacy of the current models to represent non-functional requirements (or in general constraints with qualitative nature) has been addressed. The obsolescence of the design-time models used during runtime have been mitigated transparently and they are naturally underpinned by the LDM models that we provided. Specifically, the CWW engine provided in this paper assesses the satisfaction of the configurations to models and it uses the MP-MDAM data aggregation algorithm to address both the obsolescence of the models and the risk of premature reconfigurations. In a nutshell, the main contribution of this paper is a better management of the trade-off between both the obsolescence of models and the risk of making premature decisions under dynamic environments.

Acknowledgments

We are very grateful to the anonymous referees for their constructive suggestions to improve this paper. The work of R. Torres was partially supported by UNAB Grant DI-1303-16/RG. The work of R. Salas was supported by the grant FONDEF IDeA ID16I10322. H.Astudillo was partially supported by FONDECYT Grant 1140408

Footnotes

The data set can be obtained from http://www.uoguelph.ca/~qmahmoud/qws/ and was released in 2010

References

Cite this article

TY - JOUR AU - Romina Torres AU - Rodrigo Salas AU - Nelly Bencomo AU - Hernan Astudillo PY - 2018 DA - 2018/01/01 TI - An architecture based on computing with words to support runtime reconfiguration decision of service-based systems JO - International Journal of Computational Intelligence Systems SP - 272 EP - 281 VL - 11 IS - 1 SN - 1875-6883 UR - https://doi.org/10.2991/ijcis.11.1.21 DO - 10.2991/ijcis.11.1.21 ID - Torres2018 ER -