Research on the Realization Method of Augmented Reality based on Unity3D

- DOI

- 10.2991/jrnal.k.191203.004How to use a DOI?

- Keywords

- Augmented reality; Vuforia SDK; EasyAR SDK; Unity3D

- Abstract

Aiming at the problems of low stability and limited application conditions in traditional augmented reality, Unity 3D software is applied to realize augmented reality by combining with Vuforia SDK and EasyAR SDK (Software Development Kit). The developed results can be used on mobile terminal and augmented reality glasses-Hololens. At the same time, the 3D registration technique is studied for the augmented reality. Moreover, the principle and process to realize augmented reality in Unity3D and SDK are given. Finally, with the common image markers, the stability of the two SDKs was tested and analyzed with the angular variation experiment. Compared with the traditional system, the model stability is improved.

- Copyright

- © 2019 The Authors. Published by Atlantis Press SARL.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

With the help of computer graphics, computer vision and other technologies, virtual objects are superimposed onto real scenes by Augmented Reality (AR). It enables users to perceive a new environment where the real scene and the virtual scene are seamlessly integrated together, and it presents users with a real-time interactive sensory effect [1].

Many researchers have made some achievements in the field of augmented reality. Feiner et al. [2] published a paper on the prototype of the AR system at the International Conference on Graphic Images, which was subsequently widely cited by the American Computer Society. In 1999, the open source augmented reality development kit-ARToolKit, was released by the University of Washington [3]. With the release of the development kit, a large number of augmented reality applications gradually emerge. In 2015, Microsoft released the AR head-up Microsoft HoloLens.

However, traditional augmented reality has the problems of low stability and limited application range. Here we use Unity3D (San Francisco, USA) software combined with Vuforia SDK and EasyAR SDK to realize augmented reality. And it can also be applied on mobile terminal and AR glasses-Hololens, in which the traditional black and white identification images in ARTOOLKIT are extended to any ordinary images for recognition. In this paper, considering the applications of the common marker images, the 3D registration technology is studied, and two SDKs in Unity3D platform are developed to realize augmented reality. At last, their effect is analyzed and compared.

2. IMPLEMENTATION SCHEME

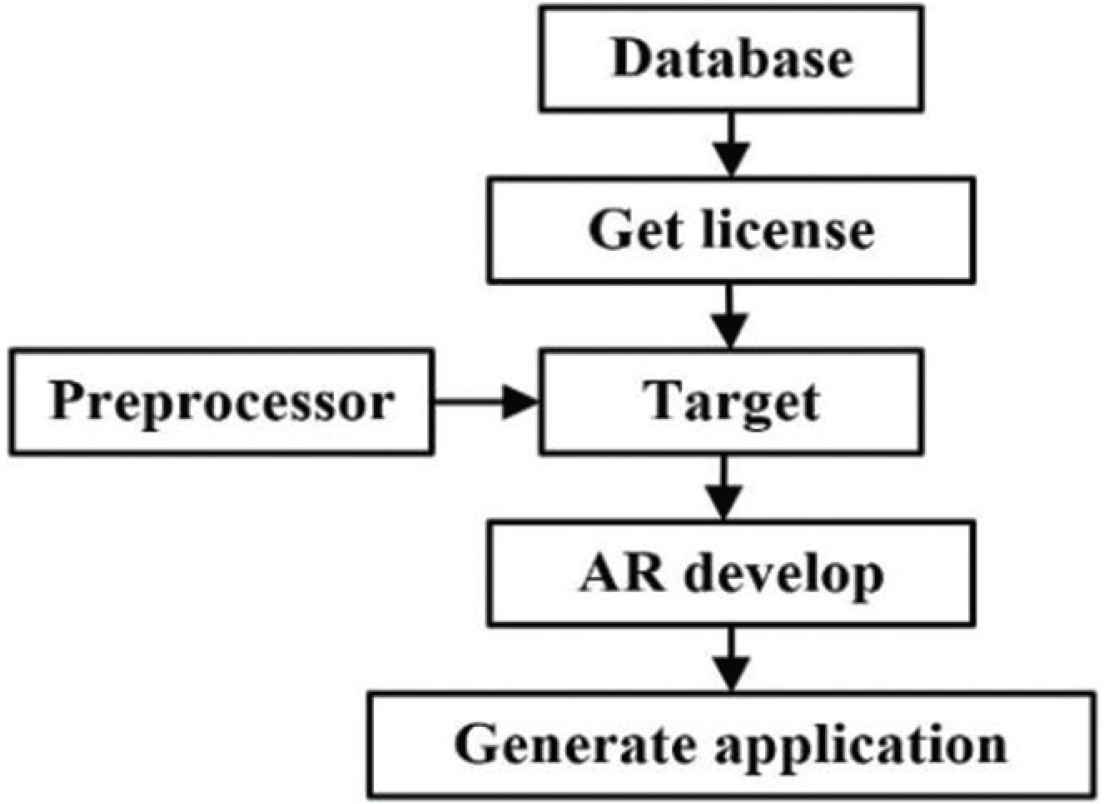

In this paper, with OpenCV, some markers are be developed for the automatic recognition and positioning in a captured image, and then superimposes the existed virtual objects under the camera image by OpenGL to realize augmented reality. The implementation scheme is shown in Figure 1.

Implementation scheme.

In this scheme, the feature points of the image marker are extracted in the captured image of real-time scene. The relevant feature point information is obtained after detection and description, and the points are matched with the Hamming distance, filtered the matching points with the RANSAC (Random Sample Consensus) algorithm to get the final matching points. Then, by the conversion of the coordinate system, the position of the virtual object in the real scene is determined. At last, with the OpenGL the augmented reality can be realized [4].

3. KEY TECHNOLOGIES

In order to realize augmented reality, three core technologies are necessary: three-dimensional registration technique, display technology and real-time interaction technology [5].

- •

Three-dimensional registration: In order to realize the melting between the virtual and real world, the position of the tracking camera is calculated, at the same time, the position of the virtual object in the real scene is calculated too.

- •

Display technology: In the real world, virtual images or models present a virtual but fused in the real world to the user.

- •

Real-time interaction: It means an interaction between the user and the virtual object. The information flow and feedback can be timely and effective performed between them.

In 3D registration, the marker in the world coordinate system needs to be converted into pixel coordinate system in the camera.

Figure 2 shows the transformation between coordinate systems. Point P is a point in the world coordinate system, and Ow − Xw, Yw, Zw is the world coordinate system, describing the position of the camera. Oc − Xc, Yc, Zc is the camera coordinate system and the camera optical center is used as its origin. The xoy is the image coordinate system, and (x, y) corresponds to the physical coordinates; the uo0v is the pixel coordinate system, (u, v) corresponds to the pixel coordinate.

Illustration of the coordinate systems.

Assuming that the coordinates of the point O0 in the pixel coordinate system are (u0, v0), the corresponding relationship between the pixel coordinate system and the image coordinate system is shown in Equation (1).

The transformation matrix from world coordinate system (Xw, Yw, Zw) to camera coordinate system (Xc, Yc, Zc) is shown in Equation (2). The transformation matrix is composed of rotation matrix R and translation matrix T, R is 3 × 3 orthogonal identity matrix, T = (t1, t2, t3)T is a three-dimensional column vector.

Figure 3 shows the transformation between camera coordinate system and image coordinate system. The coordinate value of point P in the image coordinate system is (x, y), the coordinate value in the camera coordinate system is (Xc, Yc, Zc), and the focal length of the camera is f, then according to the proportion relationship [Equations (3) and (4)]:

Camera and image coordinate system.

We can get the following Equation (5):

By substituting Equations (1) and (2) into Equation (5), Equation (6) for the transformation relation between world coordinate system and pixel coordinate system can be obtained:

Set

4. THE DEVELOPING PROCESS

Unity3D is a very compatible platform. It provides a variety of plugins that are necessary for further higher level development and various development environments. Vuforia SDK is a development kit developed by Qualcomm. And EasyAR SDK is an AR engine developed by China Company. They can both work in the Unity3D platform. So they were used to develop our applications in the Unity 3D platform with the augmented reality process.

Figure 4 shows the AR implementation steps.

AR implementation process.

In a word, before realizing augmented reality with SDK and Unity3D, a database should be built on the official website of that SDK to manage the identifying images. Then a series of identification images are selected and uploaded to the cloud database for image preprocessing, training and matching, etc. After that, the prepared model imported into Unity3D to develop the AR applications.

5. EXPERIMENT AND RESULTS

The experiment is done in Win 10 system with the model of the graphics card of GTX 960. And the Unity3D with version of 2018.2.10-f1 is applied. The development kit is Vuforia-unity-6-2-10 and Easyar-2.0.0-basic. The experiment environment was in good lighting conditions.

Figure 5 shows the comparison results with augmented reality. Here the 3D model is superimposed on the recognized image and can move according to the operator’s commands, in which, (a) is the 3DS MAX model; (b) and (c) are the AR effect with Vuforia SDK; (d–f) are the AR effect with EasyAR SDK.

A comparison test with augmented reality.

Figures 6 and 7 are the AR angle variation experiments.

Image angle variation experiment with Vuforia.

Image angle variation experiment with EasyAR.

In the experiments, using the same image marker and the experimental equipment, etc., the angle between the image marker and camera are adjusted by more than 50 times, then their identification success rate is recorded as shown in Table 1.

| Marker image angle (°) | Vuforia (%) | EasyAR (%) |

|---|---|---|

| 0 | 0 | 0 |

| 15 | 70.5 | 0 |

| 30 | 81.4 | 30.5 |

| 45 | 95.6 | 90.4 |

| 60 | 98.0 | 94.8 |

| 90 | 98.2 | 96.7 |

Identification success rate

Based on Table 1, we could find when the image marker is at angle of more than 45, the identification success rate is high for both methods. It can be found that the identification success rate with Vuforia method is higher when the angle is less than 45.

6. CONCLUSION

In this paper, the key technologies of augmented reality are studied, and some augmented reality applications are developed and verified. At the same time, the identification success rate and stability are compared respectively with Vuforia and EasyAR method. Here only some main functions are tested, then more functions will be developed in the future work.

Authors Introduction

Dr. Jiwu Wang

He is an Associate Professor at School of Mechanical, Electronic and Control Engineering, Beijing Jiaotong University, China. He obtained his PhD degree at Tsinghua University, China in 1999. His main interests are computer vision, robotic control and fault diagnosis.

He is an Associate Professor at School of Mechanical, Electronic and Control Engineering, Beijing Jiaotong University, China. He obtained his PhD degree at Tsinghua University, China in 1999. His main interests are computer vision, robotic control and fault diagnosis.

Mr. Weixin Zeng

He is a master student at School of Mechanical, Electronic and Control Engineering, Beijing Jiaotong University, Beijing China. His main interests are VR and AR.

He is a master student at School of Mechanical, Electronic and Control Engineering, Beijing Jiaotong University, Beijing China. His main interests are VR and AR.

REFERENCES

Cite this article

TY - JOUR AU - Jiwu Wang AU - Weixin Zeng PY - 2019 DA - 2019/12/23 TI - Research on the Realization Method of Augmented Reality based on Unity3D JO - Journal of Robotics, Networking and Artificial Life SP - 195 EP - 198 VL - 6 IS - 3 SN - 2352-6386 UR - https://doi.org/10.2991/jrnal.k.191203.004 DO - 10.2991/jrnal.k.191203.004 ID - Wang2019 ER -