EBC-Estimator of Multidimensional Bayesian Threshold in Case of Two Classes

- DOI

- 10.2991/jsta.d.200824.001How to use a DOI?

- Keywords

- Estimator; Multidimensional Bayesian threshold; Mixture with varying concentrations

- Abstract

Some threshold-based classification rules in case of two classes are defined. In assumption, that a learning sample is obtained from a mixture with varying concentration, the empirical-Bayesian classification (EBC)-estimator of multidimensional Bayesian threshold is constructed. The conditions of convergence in probability of estimator are found.

- Copyright

- © 2020 The Authors. Published by Atlantis Press B.V.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

The model of a mixture of several probability distributions was mentioned for the first time by Newcomb [1] and Pearson [2]. Such mixtures naturally arise in many areas. In particular, in the theory of reliability and time of life, mixtures of gamma distributions [3] are used. Examples of the use of mixtures of normal distributions in the processing of biological and physiological data are given in [4]. In Slud [5], a mixture of two exponential distributions is used to describe the debugging process of the software. Some applications of the model of mixtures in medical diagnostics were given in [6,7].

The technique of a nonparametric analysis of mixtures where concentrations changes from observation to observation develops, actively. The problem of distributions estimating in case at known concentrations is considered in the works of Maiboroda [8,9]. Estimates of concentrations in two-component mixtures in work [10]. Works by Sugakova [11] and Ivanko [12] are devoted to the evaluation of component distribution densities. The correction algorithms for weighted empirical distribution functions are proposed in [13].

For the theoretical study of problems of nonparametric regression the nonhomogeneous weighted empirical distribution functions used by Stoune [14]. These were applied by Maiboroda in the tasks of analyzing the mixture. In particular, in Maiboroda [8] found conditions under which the weighted empirical distribution functions are unbiased and minimal estimators of unknown distribution functions of components of the mixture.

Object classification by its numerical characteristic is an important theoretical problem and has practical significance, for example, the definition of a person as “not healthy,” if the temperature of its body exceeds 37°C. To solve this problem we consider the threshold-based rule of type

According to this rule, an object is classified to the first class if its characteristic does not exceed a threshold

However, it is often necessary to classify an object in case of more than one threshold, for example, the definition of a person as “not healthy,” if the temperature of its body exceeds 37°C or lower than 36°C. Another example: the person is sick, if the level of its hemoglobin exceeds 84 units or lower than 72 units. Accordingly, one can apply the classifiers of type

In particular, this problem is discussed in [20,21].

The case of two thresholds and three prescribed classes deserves special attention. An example is the classification of the disease stages. Thus, during the diagnosis of breast cancer a tumor marker CA 15-3 is used. If the value is less than 22 IU/mL, then the person is healthy; if its level is in the range from 22 to 30 IU/mL—precancerous conditions can be diagnosed; if the index is above 30 IU/mL—patient has cancer. When solving some technical problems it is needed to consider the substance in its various aggregate forms: gaseous, liquid, solid. The transition from state to state occurs at a specific temperature. According to this, a boiling point and a melting point are used. Accordingly, 6 classifiers of forms

2. SETTING OF THE PROBLEM

The problem of classification of an object

The a priori probabilities

The family of classifiers is denoted by

Let,

Analogically, for

A classification rule

Let,

Denote Bayesian threshold for classifier

Analogically, let

For

Let,

For

Let,

For

Denote

When determining the best threshold, one faces the problem of estimating the threshold by using a learning sample, whose members are classified correctly. We consider the Bayesian empirical classification method, in assumption, that a learning sample is obtained from a mixture with varying concentration.

The distribution functions

Here

To estimate the distribution functions

One can apply kernel estimators to estimate the densities of distributions:

The empirical-Bayesian estimator is constructed as follows. At first, one determines the set

Second, one chooses

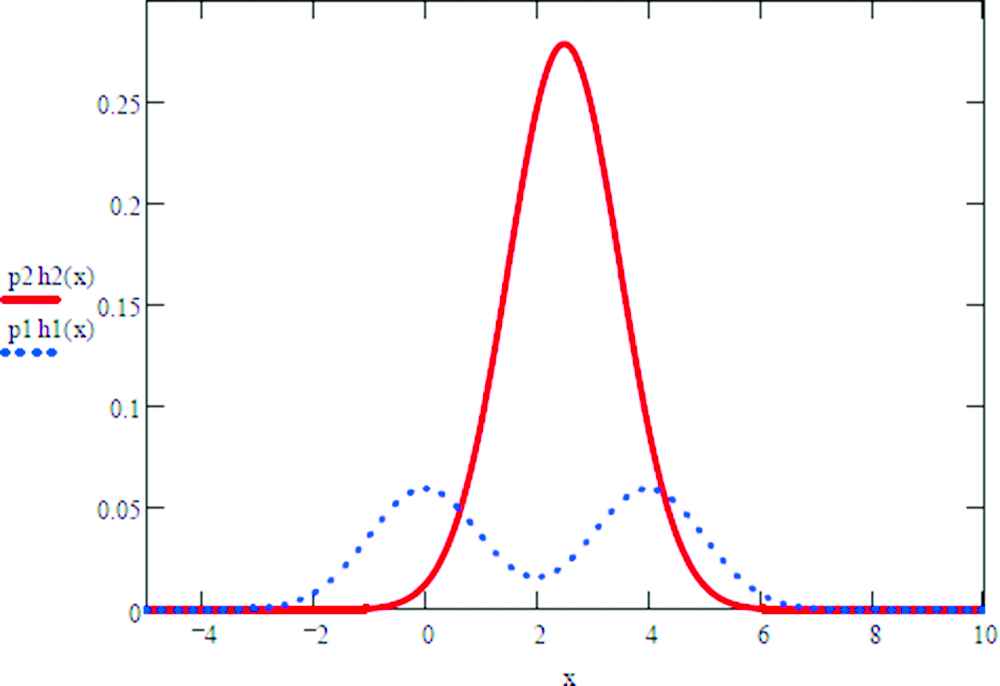

An example of multidimensional threshold in case of two classes is shown on Figures 1 and 2 (Mathcad v.13 was used).

Three-dimensional threshold: h1 = 0.5N(0,1) + 0.5N(4,1), h2 = 0.4N(2,1) + 0.6N(6,1), p1 = 0.3, p2 = 0.7.

Two-dimensional threshold: h1 = 0.5N(0,1) + 0.5N(4,1), h2 = N(2.5,1), p1 = 0.3, p2 = 0.7.

3. MAIN RESULTS

3.1. Choice of Classifier

The choice of the classifier

Theorem 3.1.1.

If root of (4) minimizes

Proof.

The statement follows from the properties

Remark 3.1.1.

The next theorem can be proved analogically to Theorem 3.1.1.

Theorem 3.1.2.

If root of (3) minimizes

Proof.

The statement follows from the properties

Remark 3.1.2.

3.2. The Convergence in Probability of EBC-Estimator

In what follows we assume that

(A). The threshold

(Bk). The limits

Lemma 3.2.1.

Let conditions (A) and (Bk) hold. Assume that densities

Then

Proof.

According to Theorem 1 of [11], the assumptions of the theorem imply that

Now we shall that

Since,

Thus,

Lemma 3.2.2.

Let assumptions of Lemma 3.2.1 for

Proof.

Fix

Choose

Fix an arbitrary

Theorem 3.2.1.

Assume that conditions of Lemma 3.2.1 hold. Then

Proof.

Since (5)

This completes the proof of the theorem, since

4. CONCLUSION

In this paper, we found the conditions of convergence in probability of the estimator for the Bayesian threshold constructed by the method of empirical-Bayesian classification for a sample from a mixture with variable concentrations.

CONFLICT OF INTEREST

The author has no conflicts of interest to declare.

ACKNOWLEDGMENTS

I thank the reviewers whose insightful comments helped me to improve this paper.

REFERENCES

Cite this article

TY - JOUR AU - Oksana Kubaychuk PY - 2020 DA - 2020/09/08 TI - EBC-Estimator of Multidimensional Bayesian Threshold in Case of Two Classes JO - Journal of Statistical Theory and Applications SP - 342 EP - 351 VL - 19 IS - 3 SN - 2214-1766 UR - https://doi.org/10.2991/jsta.d.200824.001 DO - 10.2991/jsta.d.200824.001 ID - Kubaychuk2020 ER -