Preliminary Comparative Experiments of Support Vector Machine and Neural Network for EEG-based BCI Mobile Robot Control

- DOI

- 10.2991/jrnal.k.190220.014How to use a DOI?

- Keywords

- Brain computer interface; electroencephalography; support vector machine; neural network; mobile robot control

- Abstract

Here we present experimental results of Electroencephalogram (EEG)-based Brain Computer Interface (BCI) for mobile robot control by means of Support Vector Machine (SVM) and Neural Network (NN). The authors had trained NNs using EEGs collected from subjects and verified the performance as BCI; however, the results were unsatisfactory for practical use. In this study, we have used SVM with Radial Basis Function (RBF) kernel function for further improvement and compared the performance with the NNs. Consequently, the SVMs outperformed the NNs in almost all cases.

- Copyright

- © 2019 The Authors. Published by Atlantis Press SARL.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

Brain–Computer Interface (BCI) provides you with a means to convey your intention and volition to a computer on the basis of your brain activity. A lot of studies on BCI have used Electroencephalogram (EEG) because of its invasiveness. The BCI technology not only makes up for impairments but also expands and enhances abilities of human beings. Electric wheelchair control and robotic arm control are typical application examples of EEG-based BCI. Singla et al. [1,2] developed a Steady State Visual Evoked Potential (SSVEP)-based BCI for controlling a wheelchair using multi-class SVM. Meng et al. [3] experimentally investigated a noninvasive BCI for reach and grasp task of robotic arm. Mobile robot control by EEG-based BCI is also a challenging and promising technology [4].

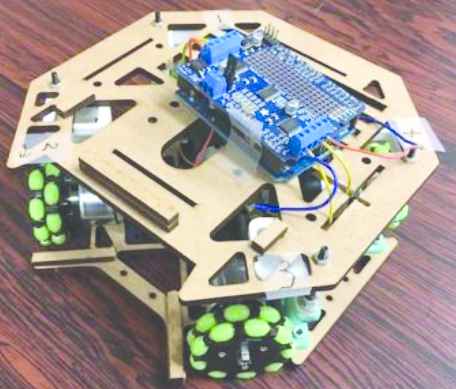

The authors have been developing an EEG-based BCI for mobile robot control by means of machine learning techniques, especially multi-layered neural networks (NNs) [5,6]. We fabricated an omnidirectional mobile robot and collected brain waves from subjects picturing a movement of the mobile robot in their brains. Using the collected brain waves, we trained multi-layered NNs to obtain brain wave pattern classifiers for BCI and evaluated them. However, the trained NNs could not achieved practical classification performance.

Support Vector Machine (SVM) is also a popular machine learning method that has been applied to various fields and accomplished tremendous results. In this study, we use SVM with RBF kernel function as classifiers for EEG patterns and compare their performance with the multi-layered NN we used in the previous studies.

2. EXPERIMENTS FOR EEG DATA COLLECTION

The authors carried out EEG measurement experiments to collect EEG signals for training NNs and SVMs. The same experiments had been conducted for three subjects in the previous study [5]. For this study, we have newly obtained EEG signals from three other subjects, a 21-year-old and two 22-year-old male college students; EEG signals recorded from totally six subjects (named A–F) were used in this study. As with the previous EEG measurement experiments, the EEG headset shown in Figure 1 were used for recording EEG signals, and the omni-wheel mobile robot shown in Figure 2 that moves following predetermined commands was used. Commands for move forward, backward, to right, and to left were given to the mobile robot in these experiments.

EMOTIV EPOC (wireless portable EEG headset).

Omni-wheel mobile robot.

Each subject imagined 30 trials per direction, i.e. 120 trials in total, at each day for five different days; therefore, we collected EEG data of 600 trials for each subject. One trial consists of about 5 s rest, 5 s task (imagining), and 5 s rest again. Brain waves of a subject were recorded throughout the 15 s trials. The subject silently counted 5 s during the first rest, and then pressed a key of a keypad just before getting started the task so that a PC for EEG recording could recognize the timing. When a beep sounded after 5 s, the subject stopped the task and took a rest until the next trial for about 5 s.

The subjects were given two different imagining tasks, called CLOSED-EYES and OPEN-EYES.

- •

CLOSED-EYES: close your eyes and imagine a specified arrow.

- •

OPEN-EYES: watching the mobile robot moving to a certain direction, imagine the robot’s motion.

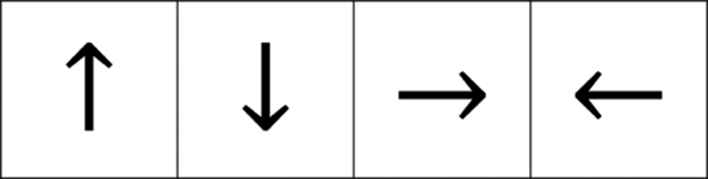

The CLOSED-EYES experiment was carried out first, then the OPEN-EYES one. Figure 3 shows arrows, one of which the subjects imagined in a trial of the CLOSE-EYES task in the EEG measurement experiment. Up, down, right, and left arrows correspond to moving forward, backward, to right, and to left for the mobile robot, respectively.

Arrows indicating directions (up, down, right, and left).

Figure 4 shows the procedure of the trials in the experiments for OPEN-EYES task. At the beginning of the procedure, a subject counts 5 s silently while gazing the mobile robot being at rest. During the task period, the subject keeps gazing at the mobile robot moving to a certain direction and imagine the robot’s motion for 5 s. As the task finish, the subject closes their eyes for rest and the mobile robot automatically moves back to the initial position. The subject can notice when they should end the rest even with CLOSED-EYES because the mobile robot emits a sound during the return movement.

Procedure of trials in experiments for OPEN-EYES.

The EEG signals recorded with 14 electrodes of the EPOC for 5 s were preprocessed according to the following methods. First, EEG signal components in the frequency range from 8 to 30 Hz were extracted by a band-pass filter. Second, power spectrum of the filtered EEG signals was calculated, and then moving average was applied to them. Finally, the power spectrum was normalized between 0 and 1, and resampled with 1 Hz. This preprocessing produces a 322-dimensional feature vector from 5 s EEG signals, which was used as an input into the EEG pattern classifiers.

3. EEG SIGNAL CLASSIFICATION WITH MULTI-LAYERED NEURAL NETWORK AND SUPPORT VECTOR MACHINE

Stacked Autoencoder (SAE) with a softmax layer at the output was adopted as multi-layered NN for EEG pattern classification. In the training process of the NNs, the hyperparameters were optimized by Bayesian optimization [7]. Table 1 describes the optimized hyperparameters and their options. The SAEs and Bayesian optimization were implemented using Chainer (ver. 1.18.0, Preferred Networks, inc.) [8] and GPyOpt13 (ver. 1.2.0, the Sheffield machine learning group and collaborators) [9].

| Hyperparameters | Options |

|---|---|

| Number of hidden layers | 1–3 |

| Number of nodes in 1st hidden layer | 250 or 300 |

| Number of nodes in 2nd hidden layer | 150 or 200 |

| Number of nodes in 3rd hidden layer | 50 or 100 |

| Iteration of pretraining each AE (epoch) | 1000 or 2000 |

| Iteration of finetuning (epoch) | 5000 or 10,000 |

| Dropout 50% in pretraining AEs | OFF or ON |

| Dropout 50% in finetuning | OFF or ON |

Hyperparameters and options

As another EEG pattern classifier, nonlinear SVM with RBF kernel function was used. In the training process of the SVMs, input feature vectors were scaled, and parameters of RBF function and regularization were optimized using grid search. LIBSVM (ver. 3.22, Chih-Chung Chang and Chih-Jen Lin) [10] was used for implementing the SVMs.

The classification performance of the NNs and SVMs trained in this study was evaluated using fivefold cross validation. Table 2 shows the computation environment for development of the SAEs and SVMs.

| CPU | Intel® Core™ i7-6800K CPU @ 3.40 GHz |

| Memory | 16 GB (DDR4-2133 4 GBx4) |

| Storage | SSD 240 GB + HDD 1 TB |

| GPU | NVIDIA GeForce GTX1060 6 GB GDDR5 |

| OS | Ubuntu 16.04 LTS |

Specifications of computation environment for development of SAEs and SVMs

4. RESULTS AND DISCUSSION

Tables 3 and 4 shows results of the comparative experiments of the NNs and SVMs trained with the EEG signals recorded in CLOSED_EYES task. The values in the rightmost columns are averaged classification rate percentages of the five experiment days. The better averaged classification rate between the NNs and SVMs are shown in red. Comparative results in the case of OPEN_EYES are shown in Tables 5 and 6.

| Subject | 1st day | 2nd day | 3rd day | 4th day | 5th day | Average |

|---|---|---|---|---|---|---|

| A | 55.00 | 57.50 | 66.67 | 79.17 | 92.50 | 70.17 |

| B | 50.00 | 70.00 | 53.33 | 66.67 | 54.17 | 58.83 |

| C | 44.17 | 49.17 | 51.77 | 76.67 | 33.33 | 51.02 |

| D | 58.34 | 70.00 | 54.17 | 50.83 | 40.83 | 54.83 |

| E | 45.83 | 45.00 | 56.67 | 44.17 | 29.17 | 44.17 |

| F | 76.67 | 42.50 | 40.00 | 39.17 | 57.50 | 51.17 |

Classification rate percentages of NNs trained with Bayesian optimization using 120 samples recorded in CLOSED_EYES

| Subject | 1st day | 2nd day | 3rd day | 4th day | 5th day | Average |

|---|---|---|---|---|---|---|

| A | 57.97 | 49.04 | 64.40 | 58.80 | 62.74 | 58.59 |

| B | 64.70 | 63.04 | 71.79 | 72.50 | 56.61 | 65.73 |

| C | 93.63 | 86.73 | 55.36 | 59.70 | 62.02 | 71.49 |

| D | 52.44 | 49.94 | 55.95 | 61.25 | 55.71 | 55.06 |

| E | 64.64 | 71.43 | 65.00 | 72.32 | 58.33 | 66.34 |

| F | 65.18 | 68.16 | 65.18 | 63.40 | 56.79 | 63.74 |

Classification rate percentages of SVMs trained using 120 samples recorded in CLOSED_EYES

| Subject | 1st day | 2nd day | 3rd day | 4th day | 5th day | Average |

|---|---|---|---|---|---|---|

| A | 95.83 | 48.33 | 45.83 | 41.67 | 75.00 | 61.33 |

| B | 66.67 | 57.50 | 60.83 | 33.33 | 43.33 | 52.33 |

| C | 60.00 | 40.00 | 49.17 | 76.67 | 38.33 | 52.83 |

| D | 52.50 | 79.17 | 37.50 | 38.34 | 35.00 | 48.50 |

| E | 49.17 | 33.34 | 45.84 | 19.17 | 31.67 | 35.84 |

| F | 51.67 | 62.50 | 55.84 | 48.34 | 45.83 | 52.84 |

Classification rate percentages of NNs trained with Bayesian optimization using 120 samples recorded in OPEN_EYES

| Subject | 1st day | 2nd day | 3rd day | 4th day | 5th day | Average |

|---|---|---|---|---|---|---|

| A | 63.04 | 56.61 | 62.68 | 66.31 | 65.83 | 62.89 |

| B | 68.75 | 73.57 | 66.90 | 63.75 | 77.02 | 70.00 |

| C | 65.00 | 58.57 | 67.20 | 60.24 | 69.40 | 64.08 |

| D | 69.52 | 49.88 | 55.06 | 52.86 | 60.00 | 57.46 |

| E | 62.62 | 67.50 | 63.39 | 54.94 | 72.20 | 64.13 |

| F | 54.64 | 56.67 | 45.24 | 43.21 | 42.62 | 48.48 |

Classification rate percentages of SVMs trained using 120 samples recorded in OPEN_EYES

These results clearly show that the SVMs outperformed the NNs regardless of the task types, CLOSED_EYES and OPEN_EYES. The averaged classification rate percentages of all subjects were 55.03% for the NNs and 63.49% for the SVM in CLOSED_EYES; 50.61% for the NNs and 61.17% for the SVM in OPEN_EYES.

The input feature vectors to the classifiers were extracted from the EEG signals obtained in the experiments as mentioned in Section 2. The EEG signals were recorded when the subjects were imagining one of the four motions of the mobile robot. That means the extracted feature vectors belongs to one of the classes representing mobile robot motions, such as move forward, backward, to left, and to right. In the experiments, the stacked autoencoder NNs and nonlinear SVMs with RBF kernel function were trained to recognize the class of the input feature vectors.

The NNs scaled the input feature vectors’ dimension down through the hidden layers and finally classified them at the softmax output layer. On the other hand, the nonlinear SVMs transforms the input feature vectors into a higher dimensional feature space with RBF kernel function so that a decision boundary can linearly separate the feature vectors into classes more easily. The transformation to a higher dimensional space might have led to the results that the SVMs achieved the better classification performance.

5. CONCLUSION

This paper presented the comparative experimental results of two machine learning methods, SVM with RBF kernel function and stacked autoencoder NN with a softmax output layer, which were trained as EEG-based BCI for mobile robot control. The experimental results showed that the SVMs with RBF kernel function outperformed the NNs trained with hyperparameter optimization in almost all cases. The authors have presumed that the feature vector transformation into a higher dimensional feature space was a key factor for the improvement by SVMs. Therefore, from that point of view, the authors will design an EEG pattern classification method including feature extraction to achieve practical EEG-based BCI mobile robot control.

Authors Introduction

Mr. Yasushi Bandou

Mr. Yasushi Bandou is a master student at Graduate School of Computer Science and Systems Engineering, Kyushu Institute of Technology. His main interest is brain computer interface using support vector machine.

Mr. Yasushi Bandou is a master student at Graduate School of Computer Science and Systems Engineering, Kyushu Institute of Technology. His main interest is brain computer interface using support vector machine.

Mr. Takuya Hayakawa

Mr. Takuya Hayakawa is a master student at Graduate School of Computer Science and Systems Engineering, Kyushu Institute of Technology. His main interest is brain computer interface using neural network.

Mr. Takuya Hayakawa is a master student at Graduate School of Computer Science and Systems Engineering, Kyushu Institute of Technology. His main interest is brain computer interface using neural network.

Dr. Jun Kobayashi

Dr. Jun Kobayashi is an Associate Professor at Department of Systems Design and Informatics, Kyushu Institute of Technology. He obtained his PhD degree at Kyushu Institute of Technology in 1999. Currently, his main interests are Robotics, Physiological Computing, and Cybernetic Training.

Dr. Jun Kobayashi is an Associate Professor at Department of Systems Design and Informatics, Kyushu Institute of Technology. He obtained his PhD degree at Kyushu Institute of Technology in 1999. Currently, his main interests are Robotics, Physiological Computing, and Cybernetic Training.

REFERENCES

Cite this article

TY - JOUR AU - Yasushi Bandou AU - Takuya Hayakawa AU - Jun Kobayashi PY - 2019 DA - 2019/03/30 TI - Preliminary Comparative Experiments of Support Vector Machine and Neural Network for EEG-based BCI Mobile Robot Control JO - Journal of Robotics, Networking and Artificial Life SP - 269 EP - 272 VL - 5 IS - 4 SN - 2352-6386 UR - https://doi.org/10.2991/jrnal.k.190220.014 DO - 10.2991/jrnal.k.190220.014 ID - Bandou2019 ER -