Development of the Image-based Flight and Tree Measurement System in a Forest using a Drone

- DOI

- 10.2991/jrnal.k.200528.003How to use a DOI?

- Keywords

- Deep learning; region convolutional neural networks; structure from motion; simultaneous localization and mapping; recognition of trees; drone in a forest

- Abstract

Drones have been used in many purposes for a long time. Especially, development of the automatic observation systems such as measurement using drones for the primary sector of industry have been frequently researched in recent years. The measurement of a tree growth in a forest is also one of the aim for a drone application. In this study, our aim is to develop the automatic measurement system for size of a tree in a forest. The difficulties are that a drone has to recognize trees, to construct a map of a forest and to measure the size of trees from a front camera. To overcome those difficulties, we propose that a drone recognizes trees based on single shot multibox detector (SSD), constructs a map from simultaneous localization and mapping (SLAM) and measures a tree by structure from motion (SfM). Experimental results from the drone competition show that a drone has been able to recognize a tree and to fly safety.

- Copyright

- © 2020 The Authors. Published by Atlantis Press SARL.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

Forests occupy about 70% of Japanese whole land area, and artificial forests are 30% in Japanese forests. Maintenance and management of forests is important because rundown forests cause of disasters; however, grooming chores of forests are considered to become difficult in near future due to acceleration of demographic aging in Japan. Hence, forestry companies and its workers strongly demand the autonomous maintenance and management system [1].

The features of Japanese forests are that trees grow on mountains, so that there are many rocks and ground inclines in a forest. In addition, ground in a forest usually covered by underbrush. For this, a robot with wheels or crawlers is difficult to apply for maintenance and management system. To solve this difficulty, we propose to apply a drone to developing autonomous maintenance and management system in a forest. The abilities of a drone have been made impressive progress in recent years, so that drones are able to fly stably in any situations. On the other hand, drones have several weak points if those fly in a forest; therefore, drones are radio-controlled by someone in general. Most of drones use Global Positioning System (GPS) for position estimation and measure height between a drone and ground from altimeters or ultrasonic sensors. The signal of GPS may be blocked by trees or be altered by reflected signal (multipath problem); therefore, receiving accurate GPS signal for construction of a map and estimating positions is difficult in a forest. In addition to problem of flight control, a problem of development for autonomous maintenance and management system is that a drone autonomously has to recognize trees, to construct map without GPS signal and to measure the size of a tree. We have proposed a method to recognize a color marker of target tree from a camera on a drone based on particle filter algorithm [2]. Our proposal have succeeded in recognition for the certain tree; however, our proposal was not able to construct map and to recognize general trees without color marker.

Anzai et al. [3] have proposed to combine a Laser Range Finder (LRF) with a camera on a drone. Their proposal chooses candidates of trees and measures of that based on the information from LRF, then recognizes a color marker of target trees from a camera. In addition to recognition of tree, their proposal constructed simultaneously a map of a forest. The problem of their approach is that recognition method needs color markers; namely, only one drone cannot recognize category of trees. This means that their proposal are not able to apply to the environment which has mix of several types of trees. Moreover, they need large and powerful drone because they use several sensors and a micro-computer.

In contrast to that, our aim is that we develop a system by using a small size and powerless drone. To realize for our aim, we propose to apply “Object Category Recognition” based on Convolutional Neural Network (CNN) to recognition of trees, “Structure Recognition” based on Structure from Motion (SfM) to measurement of size of a tree and “Map Construction” based on Simultaneous Localization and Mapping (SLAM) to construction of forest map. In addition, our proposal use only a camera because our drone does not have the flight ability with other sensors. To the best of our knowledge, proposals which apply those algorithms to autonomous maintenance and management system for forest do not exist in the world; hence, it can be said that our proposal has academic novelty. Experimental results from the drone competition show that a drone has been able to recognize a tree and to fly safety.

This paper is constituted as follows. Sections 2–4 show the details of CNN, SfM and SLAM, respectively. Section 5 shows the experiments and its results. Finally, Section 6 concludes this paper.

2. METHODS FOR OUR SYSTEM

In this section, we explain the summary without using the equations of algorithms for our proposal due to complexity in each algorithm and limitations of space. Please see also references for each to know the details.

2.1. Convolutional Neural Network

Convolutional Neural Network is the one of Deep Learning Algorithm [4]. It can be said that the success of CNN becomes a springboard for attracting attention for multi(deep)-layered neural network. A notable successful point of CNN is that CNN is able to classify with high rate probability for category of an object from an image.

The structure of the basic CNN is that CNN has two types of layer, i.e., convolutional and pooling layer and those layers are used in pair. Convolutional layers learns the feature of small area in images, and pooling layers integrate outputs from convolutional layers. From the structure, the basic CNN learns the category of object in an image. For example, when there are dog or cat images, CNN classify to two classes those images. To realize our aim, the classifier has to recognize where target objects are in an image; hence, the basic CNN is not able to be applied for our aim.

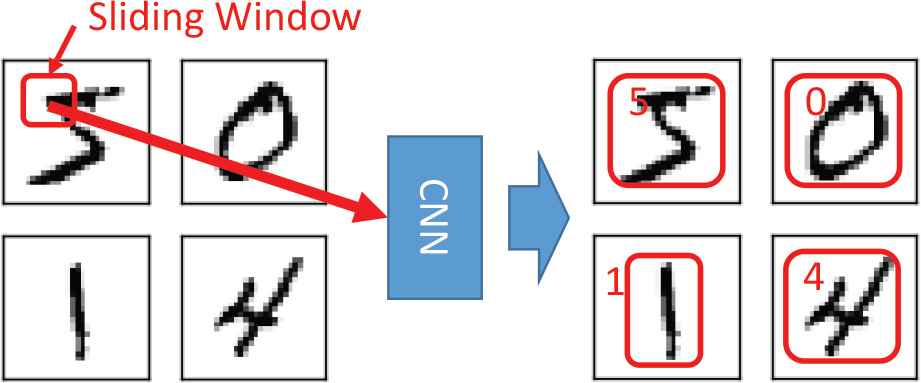

In contrast to the basic CNN, an approach for recognition of each object in an image based on CNN is said the Region CNN (R-CNN) [5]. Figure 1 illustrates the structure of a typical R-CNN.

The structure of a typical R-CNN.

The algorithm of R-CNN split an image to small region by using sliding window as shown in Figure 1. The size and position of sliding window is variable, so many small regions are generated. A CNN in R-CNN learns the feature of small regions in an image for classification of objects, and so R-CNN is able to recognize several types of objects and positions of target objects in an image. A typical R-CNN is known to indicate the very good performance; on the other hand, it needs the very high computational capability due to huge number of input data (small regions). Several approaches for cost down of R-CNN have been proposed, e.g., Fast R-CNN. We apply Single Shot MultiBox Detector (SSD) [5] to recognition of trees in this research because SSD is low computational cost. The characteristic of SSD is that an image is split into fixed size of grids alternative to using sliding window, and SSD learns the features of objects in the certain grids. For this, computational cost is drastic decrease. However, recognition rate of SSD is lower than other R-CNN methods about 10% according to paper 5, so we will validate whether it is capable for the SSD method to distinguish the trees with good recognition rate in the experiments.

2.2. Structure from Motion

Measurement of size of trees is important for autonomous maintenance and management system in a forest. We propose to apply SfM [6] to generation of 3D tree models for measurement of the size of a tree. The measurement algorithm is that it compares a criterial tree with target tree based on generated 3D tree models. Diagram of SfM is shown as Figure 2. The summary of SfM is generation of 3D models from integration a series of 2D images.

Diagram of a structure from motion.

The details of SfM algorithm is as below. First, it discovers corresponding points from a series of images based on Optical Flow algorithm. Secondly, sets of corresponding points are merged to a set of corresponding points, and point group data is generated from those points. Finally, a 3D image model is generated by pasting a series of images to point group data. Note that point group data correspond 3D models, and so measurement of size of trees are not necessarily have to need 3D image models; therefore, generation and correspondence among images for point group data is the most important for measurement.

2.3. Simultaneous Localization and Mapping

A drone must have a map of forest in advance to measure the size of trees. However, it is difficult to prepare the exact map of a forest in advance because states of a forest vary depending on seasons. For this, construction of exact map for a forest is also important for autonomous maintenance and management system. We propose to apply SLAM [7] for construction of exact map. SLAM is a method to estimate positions (localization) and to construct map (mapping) simultaneously by only using sensory information and robot control signals. Several types of SLAM have been proposed, we apply Visual SLAM to construction of map. Visual SLAM constructs a map and estimates position based on a series of images, so it is said that Visual SLAM is as same as SfM on generating of point group data; namely, SfM uses point group data for construction of 3D image models, and Visual SLAM uses that for localization and mapping. There are several methods for Visual SLAM, we utilize Oriented FAST and Rotated BRIEF (ORB)-SLAM [8] because ORB-SLAM shows good performance for mapping and localization.

3. EXPERIMENTS

We conduct two kinds of experiments, i.e., in laboratory and in “The 4th Forestry Drones & Robots Competition [1].” This section shows the details of a drone, then experimental results. From the competition, our target tree is cedar, and so our final goal is construction of the autonomous cedar maintenance and management system.

3.1. Drone: Tello

In this research, we use “Tello [9]” drone. Tello has a front camera, which is 5 (M) Pixel and 82.6 (°) field of view (FOV). The weight of Tello is about 80 (g), so Tello is very light drone. Our system controls Tello from a laptop PC via WiFi connection.

3.2. Experiment 1: In Laboratory

First, we show the results of R-CNN. In this study, we use Google Object Detection API [10] for R-CNN to accelerate of development. In R-CNN experiment, we took a video of cedars in advance in a forest where is near our university. We use that video to training of R-CNN and its evaluation. Figure 3 shows the recognition result.

Recognition result of a cedar.

From the result, R-CNN found a cedar in region enclosed by green rectangle; hence, R-CNN succeeded in recognition a cedar although a part of cedar is hidden by other trees. For this reason, it can be said that SSD algorithm has enough ability for recognition and finding cedars.

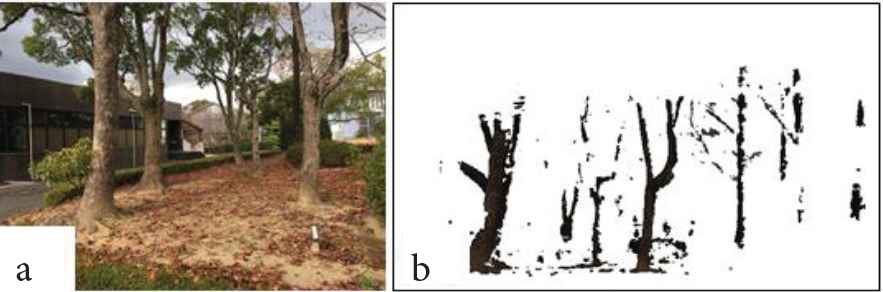

Second, we show the result of SfM. 3D image model of SfM was constructed in real time processing with flying Tello. In this study, we use OpenMVG, OpenMVS and Meshlab [11]. Figure 4 shows the result of generation of point group data for trees in our university.

Generation of point group data. (a) Target trees. (b) Point group data.

From Figure 4b, point group data are generated from only trees instead of background objects of trees compared with Figure 4a.

Figure 5 shows the result of construction for 3D image model of a pillar of our university.

Result of SfM. (a) Target pillar. (b) and (c) 3D image model.

From Figure 5b and 5c, we were able to generate 3D images instead of planar image, so that we succeeded in construction for 3D image model by using SfM.

Finally, we show the result of SLAM. We use open library of ORB-SLAM [8] as same as R-CNN and SfM to construct map of the hall. Figure 6a and 6b shows detection of corresponding points in an image and results of construction the hall map, respectively.

Result of SLAM. (a) Detection of corresponding points. (b) Generated hole map.

Results of SLAM show that corresponding points were exactly detected in a series of images from Figure 6a, and a map surrounding a pillar in hall was correctly generated from Figure 6b; therefore, we succeeded in construction of a map based on SLAM.

3.3. Experiment 2: Competition

We integrated R-CNN, SfM and SLAM in order to develop the autonomous maintenance and management system; then, we participated in drone competition [1] to evaluate our system. Figure 7 illustrates the image of a part of session for that competition. There are some target trees in the competition field. One of target trees is the criterial tree that has color markers. To get points, a drone has to get closer to less than about 1 (m) of a target tree; then, a drone measures the size of that tree.

The image of a part of session for that competition [1].

Figure 8 shows a scene of flight of a drone to measure the criterial tree with our system.

The scene of flight of a drone (In competition).

From Figure 8, a drone succeeded in going to near a criterial tree because R-CNN and SfM module correctly operated; however, a drone were not able to construct a forest map due to problem of SLAM module. As a result of drone competition, it can be said that we succeeded in development a part of autonomous maintenance and management system.

4. CONCLUSION

In this study, our aim is to develop the automatic measurement system for size of a tree in a forest. The difficulties are that a drone has to recognize trees, to construct a map of a forest and to measure the size of trees from a front camera. To overcome those difficulties, we propose that a drone recognizes trees based on R-CNN, constructs a map from SLAM and measures a tree by SfM. As a result, it can be said that we succeeded in development a part of autonomous maintenance and management system. Precise integration of R-CNN, SfM and SLAM is left for further study.

CONFLICTS OF INTEREST

The authors declare they have no conflicts of interest.

AUTHORS INTRODUCTION

Dr. Keiji Kamei

In 2007, he obtained his PhD at the Department of Brain Science and Engineering, Kyushu Institute of Technology (Engineering). From April 2007 to March 2014, he was a lecturer. Since April 2014, he has been an associate professor, Nishinippon Institute of Technology. His research interest is Artificial Neural Networks, Artificial Intelligence and Computer Science. He is a member of JNNS, APNNS, IPSJ, IEICE, SOFT.

In 2007, he obtained his PhD at the Department of Brain Science and Engineering, Kyushu Institute of Technology (Engineering). From April 2007 to March 2014, he was a lecturer. Since April 2014, he has been an associate professor, Nishinippon Institute of Technology. His research interest is Artificial Neural Networks, Artificial Intelligence and Computer Science. He is a member of JNNS, APNNS, IPSJ, IEICE, SOFT.

Mr. Masahiro Kaneoka

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Web Marketing and Web Design.

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Web Marketing and Web Design.

Mr. Ken Yanai

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Computer Aided Education.

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Computer Aided Education.

Mr. Masaya Umemoto

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Artificial Life System.

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Artificial Life System.

Mr. Hiroki Yamaguchi

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Artificial Neural Networks.

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Artificial Neural Networks.

Mr. Kazuki Osawa

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Artificial Neural Networks.

He is a bachelor research member in Kamei laboratory at Nishinippon Institute of Technology. His research interest is Artificial Neural Networks.

REFERENCES

Cite this article

TY - JOUR AU - Keiji Kamei AU - Masahiro Kaneoka AU - Ken Yanai AU - Masaya Umemoto AU - Hiroki Yamaguchi AU - Kazuki Osawa PY - 2020 DA - 2020/06/02 TI - Development of the Image-based Flight and Tree Measurement System in a Forest using a Drone JO - Journal of Robotics, Networking and Artificial Life SP - 86 EP - 90 VL - 7 IS - 2 SN - 2352-6386 UR - https://doi.org/10.2991/jrnal.k.200528.003 DO - 10.2991/jrnal.k.200528.003 ID - Kamei2020 ER -